OpenCVのPhaseCorrelate関数を改造してPOCステレオをやってみる(その2)

(編集メモ)

高速化を検討します。

やり散らかし感のあるままのソースコードですが、興味がほかに移ってしまったので、あげておきます。FFTの順変換を先に全て計算してしまい大量のメモリに持たせています。

Core i7-4770Sでtsukubaの処理におよそ1.5~3.0秒。リアルタイムまであと40倍。。。

#include "opencv2/opencv.hpp"

#include <immintrin.h>//AVX: -mavx

#include <omp.h>

using namespace cv;

#if _DEBUG

#pragma comment(lib, "opencv_core248d.lib")

#pragma comment(lib, "opencv_highgui248d.lib")

#pragma comment(lib, "opencv_imgproc248d.lib")

#pragma comment(lib, "opencv_contrib248d.lib")

#else

#pragma comment(lib, "opencv_core248.lib")

#pragma comment(lib, "opencv_highgui248.lib")

#pragma comment(lib, "opencv_imgproc248.lib")

#pragma comment(lib, "opencv_contrib248.lib")

#endif

// インクルードパス、ライブラリパスをプロジェクトに登録してください。

void vec_mul(const size_t n, double *z, const double *x, const double *y);

static void mulSpectrums1D( Mat& srcA, Mat& srcB, Mat& dst, int flags, bool conjB );

static void magSpectrums1D( Mat &src, Mat &dst);

static void divSpectrums1D( Mat &srcA, Mat &srcB, Mat &dst, int flags, bool conjB);

static void fftShift1D( Mat &out);

void vec_mul(const size_t n, double *z, const double *x, const double *y){

static const size_t double_size = 4; //単精度は8つずつ計算

const size_t end = n / double_size;

//AVX 専用の型にデータをロードする

__m256d *vz = (__m256d *)z;

__m256d *vx = (__m256d *)x;

__m256d *vy = (__m256d *)y;

for(size_t i=0; i<end; ++i)

vz[i] = _mm256_mul_pd(vx[i], vy[i]); //AVX を用いる SIMD 演算

}

cv::Point2d phaseCorrelate1DWithoutDFT(vector<Mat> &fft1, vector<Mat> &fft2, int pu, int pv, int qu, int qv, int rows, int cols, int ww, int wh, int k=65535, int flag_pixel = false)

{

int M=wh;

int N=ww;

vector<Mat> C;

C.resize(M);

#pragma omp parallel for

for(int i=0; i<M; i++){

Mat P, Pm;

mulSpectrums1D(fft1[(pv+i)*cols+pu], fft2[(qv+i)*cols+qu], P, 0, true);

magSpectrums1D(P, Pm);

divSpectrums1D(P, Pm, C[i], 0, false); // FF* / |FF*| (phase correlation equation completed here...)

if(0<k&&k<C[i].cols){

Rect lpf_roi(k,0,C[i].cols-k,1);

Mat zero = C[i](lpf_roi);

zero = Mat::zeros(zero.size(), zero.type());

}

}

Mat Rall;

if(M<11){

#pragma omp parallel for

for(int i=0; i<M; i++){

Rall = Rall + C[i] / M;

}

}

else{

vector<double> hann;

hann.resize(M);

double sum_hann = 0;

for(int i=0; i<M; i++){

hann[i] = 0.5 - 0.5*cos(2.0 * CV_PI * static_cast<double>(i) / M); // ww-1

sum_hann += hann[i];

}

#pragma omp parallel for

for(int i=0; i<M; i++){

Rall = Rall + C[i] * hann[i] / sum_hann;

}

}

idft(Rall, Rall); // gives us the nice peak shift location...

fftShift1D(Rall); // shift the energy to the center of the frame.

// locate the highest peak

Point peakLoc;

minMaxLoc(Rall, NULL, NULL, NULL, &peakLoc);

Point2d t(0,0);

if(!flag_pixel&&(peakLoc.x>=1)&&(peakLoc.x<Rall.cols-1)){

t.x = (Rall.at<double>(0, peakLoc.x-1)-Rall.at<double>(0, peakLoc.x+1))/(2.0*Rall.at<double>(0,peakLoc.x-1)-4.0*Rall.at<double>(0,peakLoc.x)+2.0*Rall.at<double>(0,peakLoc.x+1));

}

// adjust shift relative to image center...

Point2d center( (double)ww/ 2.0, 0.0);

C.clear();

t.x = t.x + peakLoc.x;

return (center - t);

}

int main(int argc, char* argv[])

{

// Parameters

Mat tempI = imread("tsukuba_r.png", 0);

Mat tempJ = imread("tsukuba_l.png", 0);

if(tempI.empty()||tempJ.empty()) return -1;

double mult_scale = 2.0; // 1階層ごとの画像サイズの縮尺

int thresh = tempI.cols/4; // 階層の深さ決定の目安

int ww = getOptimalDFTSize(32); // 窓の幅 半値幅が有効幅

int wh = 17; // 窓の高さ

// Decision of the Image Hierarchical Level

int lmax=0;

for(;;){

int value = (int)(pow(mult_scale,-(double)(lmax++))*tempI.cols);

if(thresh>value) break;

}

vector<int> width, height;

width.resize(lmax);

height.resize(lmax);

for(int i=0; i<lmax; i++){

width[i] = (int)(pow(mult_scale,-(i))*tempI.cols);

height[i] = tempI.rows;

}

Mat hann = Mat(1,ww,CV_64F);

for(int i=0; i<ww; i++){

hann.at<double>(i) = 0.5 - 0.5*cos(2.0 * CV_PI * static_cast<double>(i) / (ww)); // ww-1

}

Mat disparity = Mat::zeros( Size(tempI.cols, tempI.rows), CV_64F);

vector<vector<Mat> > dfti(lmax, vector<Mat>(tempI.cols*(tempI.rows+ww+1)));

vector<vector<Mat> > dftj(lmax, vector<Mat>(tempJ.cols*(tempJ.rows+ww+1)));

// Step1: Image Pyramid

vector<Mat> I, J, LI, LJ;

LI.resize(lmax);

LJ.resize(lmax);

I.resize(lmax);

J.resize(lmax);

#pragma omp parallel for

for(int l=0; l<lmax; l++){

resize(tempI, I[l], Size(width[l], height[l]), 0, 0, 1);

resize(tempJ, J[l], Size(width[l], height[l]), 0, 0, 1);

copyMakeBorder(I[l], LI[l], wh/2, wh/2+1, ww/2, ww/2, BORDER_CONSTANT, Scalar::all(0));

copyMakeBorder(J[l], LJ[l], wh/2, wh/2+1, ww/2, ww/2, BORDER_CONSTANT, Scalar::all(0));

}

for(int l=lmax-1; l>=0; l--){

for(int v= 0; v<(int)LI[l].rows; v++){

#pragma omp parallel for

for(int u=0; u<(int)I[l].cols; u++){

Rect roi(u,v,ww,1);

Mat Iroi = LI[l](roi);

Mat I64f, J64f;

Iroi.convertTo(I64f, CV_64F);

multiply(hann, I64f, I64f);

dft(I64f, dfti[l][v*I[l].cols+u], DFT_REAL_OUTPUT);

Mat Jroi = LJ[l](roi);

Jroi.convertTo(J64f, CV_64F);

multiply(hann, J64f, J64f);

dft(J64f, dftj[l][v*J[l].cols+u], DFT_REAL_OUTPUT);

}

}

}

for(int v= 0; v<(int)I[0].rows; v++){

#pragma omp parallel for

for(int u=0; u<(int)I[0].cols; u++){

int error = 0;

vector<Point2d> P, Q, Qd;

P.resize(lmax+1);

Q.resize(lmax+1);

Qd.resize(lmax+1);

// Step2:

P[lmax] = Point2d(pow(mult_scale,-lmax)*u,v);

Q[lmax] = Point2d(pow(mult_scale,-lmax)*u,v);

for(int l=lmax-1; l>=0; l--){

// Step3:

P[l] = Point2d(pow(mult_scale,-l)*u,v);

Qd[l] = Point2d(mult_scale*Q[l+1].x,Q[l+1].y);

// Step4:

if( (Qd[l].x<0)||(LJ[l].cols<Qd[l].x+ww)){

Q[l] = P[l];

continue;

}

Point2d shift = phaseCorrelate1DWithoutDFT(dfti[l], dftj[l], P[l].x, P[l].y, Qd[l].x, Qd[l].y, I[l].rows, I[l].cols, ww, wh, ww/2, true);

if( P[l].x < (shift.x+Qd[l].x)){

Q[l] = Qd[l]+Point2d(shift.x, 0);

}

else {

Q[l] = Qd[l];

}

} // Step5

{ // Step6

Rect i_roi( (int)P[0].x,(int)P[0].y,ww,wh);

if( (Q[0].x<0)||(LJ[0].cols<Q[0].x+ww)){

error = 1;

goto end;

}

Rect j_roi = Rect( (int)Q[0].x,(int)Q[0].y,ww,wh);

Point2d shift = phaseCorrelate1DWithoutDFT(dfti[0], dftj[0], P[0].x, P[0].y, Q[0].x, Q[0].y, I[0].rows, I[0].cols, ww, wh, ww/2, false);

if( P[0].x < (shift.x+Q[0].x)){

Q[0] = Q[0]+Point2d(shift.x, 0);

}

#if _DEBUG

Mat matchedImg = Mat::zeros(Size(I[0].cols*2,0), CV_8UC1);

vconcat(I[0],J[0],matchedImg);

cvtColor( matchedImg, matchedImg, COLOR_GRAY2RGB);

line(matchedImg,Point(P[0].x,P[0].y),Point(Q[0].x,matchedImg.rows/2+Q[0].y),Scalar(0,0,255));

imshow("matched", matchedImg);

waitKey(1);

#endif

}

end:

double d = Q[0].x-P[0].x;

double view_band = 1.0; // エラー閾値、表示調整用

if(view_band*ww<d||error) d=0;

disparity.at<double>(v, u) = d;

}

}

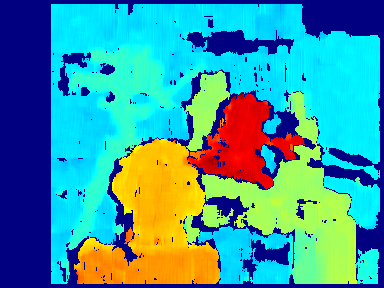

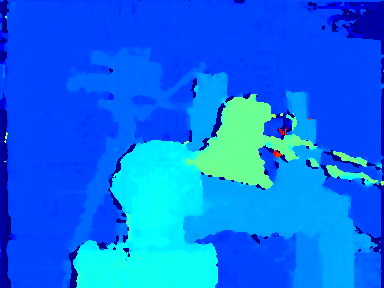

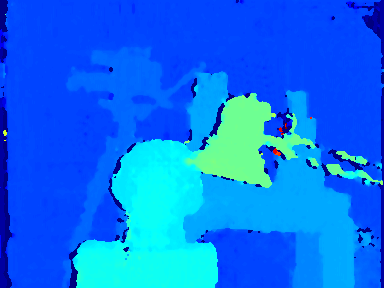

// http://stackoverflow.com/questions/13840013/opencv-how-to-visualize-a-depth-image

double min, max;

cv::minMaxIdx(disparity, &min, &max);

Mat adjMap;

double scale = 255/(max-min);

disparity.convertTo(adjMap, CV_8UC1, scale, -min*scale);

cv::Mat falseColorMap;

applyColorMap(adjMap, falseColorMap, cv::COLORMAP_JET);

imshow("disparity", falseColorMap);

imwrite("disparity.bmp", falseColorMap);

waitKey();

return 0;

}

static void mulSpectrums1D( Mat& srcA, Mat& srcB,

Mat& dst, int flags, bool conjB )

{

int depth = srcA.depth(), cn = srcA.channels(), type = srcA.type();

int rows = srcA.rows, cols = srcA.cols;

int j, k;

CV_Assert( type == srcB.type() && srcA.size() == srcB.size() );

CV_Assert( type == CV_32FC1 || type == CV_32FC2 || type == CV_64FC1 || type == CV_64FC2 );

dst.create( srcA.rows, srcA.cols, type );

bool is_1d = (flags & DFT_ROWS) || (rows == 1 || (cols == 1 &&

srcA.isContinuous() && srcB.isContinuous() && dst.isContinuous()));

if( is_1d && !(flags & DFT_ROWS) )

cols = cols + rows - 1, rows = 1;

int ncols = cols*cn;

int j0 = cn == 1;

int j1 = ncols - (cols % 2 == 0 && cn == 1);

const double* dataA = (const double*)srcA.data;

const double* dataB = (const double*)srcB.data;

double* dataC = (double*)dst.data;

size_t stepA = srcA.step/sizeof(dataA[0]);

size_t stepB = srcB.step/sizeof(dataB[0]);

size_t stepC = dst.step/sizeof(dataC[0]);

dataC[0] = dataA[0]*dataB[0];

if( cols % 2 == 0 )

dataC[j1] = dataA[j1]*dataB[j1];

if( !conjB )

for( j = j0; j < j1; j += 2 )

{

double re = dataA[j]*dataB[j] - dataA[j+1]*dataB[j+1];

double im = dataA[j+1]*dataB[j] + dataA[j]*dataB[j+1];

dataC[j] = re; dataC[j+1] = im;

}

else

for( j = j0; j < j1; j += 2 )

{

double re = dataA[j]*dataB[j] + dataA[j+1]*dataB[j+1];

double im = dataA[j+1]*dataB[j] - dataA[j]*dataB[j+1];

dataC[j] = re; dataC[j+1] = im;

}

}

static void magSpectrums1D( Mat &src, Mat &dst)

{

int depth = src.depth(), cn = src.channels(), type = src.type();

int rows = src.rows, cols = src.cols;

int j, k;

CV_Assert( type == CV_32FC1 || type == CV_32FC2 || type == CV_64FC1 || type == CV_64FC2 );

dst.create( src.rows, src.cols, CV_64FC1 );

dst.setTo(0);//Mat elements are not equal to zero by default!

bool is_1d = (rows == 1 || (cols == 1 && src.isContinuous() && dst.isContinuous()));

if( is_1d )

cols = cols + rows - 1, rows = 1;

int ncols = cols*cn;

int j0 = cn == 1;

int j1 = ncols - (cols % 2 == 0 && cn == 1);

const double* dataSrc = (const double*)src.data;

double* dataDst = (double*)dst.data;

size_t stepSrc = src.step/sizeof(dataSrc[0]);

size_t stepDst = dst.step/sizeof(dataDst[0]);

dataDst[0] = dataSrc[0]*dataSrc[0];

if( cols % 2 == 0 )

dataDst[j1] = dataSrc[j1]*dataSrc[j1];

for( j = j0; j < j1; j += 2 )

{

dataDst[j] = std::sqrt(dataSrc[j]*dataSrc[j] + dataSrc[j+1]*dataSrc[j+1]);

}

}

static void divSpectrums1D( Mat &srcA, Mat &srcB, Mat &dst, int flags, bool conjB)

{

int depth = srcA.depth(), cn = srcA.channels(), type = srcA.type();

int rows = srcA.rows, cols = srcA.cols;

int j, k;

CV_Assert( type == srcB.type() && srcA.size() == srcB.size() );

CV_Assert( type == CV_32FC1 || type == CV_32FC2 || type == CV_64FC1 || type == CV_64FC2 );

dst.create( srcA.rows, srcA.cols, type );

bool is_1d = (flags & DFT_ROWS) || (rows == 1 || (cols == 1 &&

srcA.isContinuous() && srcB.isContinuous() && dst.isContinuous()));

if( is_1d && !(flags & DFT_ROWS) )

cols = cols + rows - 1, rows = 1;

int ncols = cols*cn;

int j0 = cn == 1;

int j1 = ncols - (cols % 2 == 0 && cn == 1);

const double* dataA = (const double*)srcA.data;

const double* dataB = (const double*)srcB.data;

double* dataC = (double*)dst.data;

double eps = DBL_EPSILON; // prevent div0 problems

size_t stepA = srcA.step/sizeof(dataA[0]);

size_t stepB = srcB.step/sizeof(dataB[0]);

size_t stepC = dst.step/sizeof(dataC[0]);

if( is_1d && cn == 1 )

{

dataC[0] = dataA[0] / (dataB[0] + eps);

if( cols % 2 == 0 )

dataC[j1] = dataA[j1] / (dataB[j1] + eps);

}

if( !conjB )

for( j = j0; j < j1; j += 2 )

{

double denom = dataB[j]*dataB[j] + dataB[j+1]*dataB[j+1] + eps;

double re = dataA[j]*dataB[j] + dataA[j+1]*dataB[j+1];

double im = dataA[j+1]*dataB[j] - dataA[j]*dataB[j+1];

dataC[j] = re / denom;

dataC[j+1] = im / denom;

}

else

for( j = j0; j < j1; j += 2 )

{

double denom = dataB[j]*dataB[j] + dataB[j+1]*dataB[j+1] + eps;

double re = dataA[j]*dataB[j] - dataA[j+1]*dataB[j+1];

double im = dataA[j+1]*dataB[j] + dataA[j]*dataB[j+1];

dataC[j] = re / denom;

dataC[j+1] = im / denom;

}

}

static void fftShift1D(Mat &out)

{

if(out.rows == 1 && out.cols == 1)

{

// trivially shifted.

return;

}

std::vector<Mat> planes;

split(out, planes);

int xMid = out.cols >> 1;

int yMid = out.rows >> 1;

bool is_1d = xMid == 0 || yMid == 0;

xMid = xMid + yMid;

for(size_t i = 0; i < planes.size(); i++)

{

Mat tmp;

Mat half0(planes[i], Rect(0, 0, xMid, 1));

Mat half1(planes[i], Rect(xMid, 0, xMid, 1));

half0.copyTo(tmp);

half1.copyTo(half0);

tmp.copyTo(half1);

}

merge(planes, out);

}

・OpenCVのDFTよりも大浦さんFFTの方が2倍近く早い

・順FFTは画像全画素に対して計算してしまう

・SIMD

大浦さんのFFT(rdft)の変換結果

2492.17 26.841 -1320.47 -87.0134 -7.17704 -60.3765 51.3907 -3.14701 31.0883 -101.097 54.217 111.956 -31.8355 50.0337 -39.1108 -24.2204 -6.02643 -8.15339 -1.7731

8 -34.7583 33.0092 1.28083 19.6931 22.3442 -16.5685 18.5711 -22.3404 8.28457 -13.9967 -24.1731 10.3953 -20.5076

r[0]r[N]r[1]i[1]・・・

OpenCVのDFTの変換結果

2492.17 -1320.47 87.0134 -7.17704 60.3765 51.3907 3.14701 31.0883 101.097 54.217 -111.956 -31.8355 -50.0337 -39.1108 24.2204 -6.02643 8.15339 -1.77318 34.7583 3

3.0092 -1.28083 19.6931 -22.3442 -16.5685 -18.5711 -22.3404 -8.28457 -13.9967 24.1731 10.3953 20.5076 26.841

r[0]r[1]i[1]・・・r[N]

r[1]の配置と、大浦さんFFTは虚数部の符号が共役になっていることが違う。

処理速度メモ

630.583:1118.1=大浦さんFFTの計算時間:OpenCVDFT計算時間

ハン窓適用しない場合 425.723

ハン窓適用AVX2使用 503.28

無理やりfloat 408

2次元ならFastTemplateMatchingとほぼ同じで、OpenCVのcvMatchTemplateに実装されている。うーん、やっぱり素直にテンプレートマッチングでいいか。。。

BM 6.012294ms

SGBM 32.474747ms

HH 57.067048ms

VAR 189.052939ms

[1]汎用 FFT (高速 フーリエ/コサイン/サイン 変換) パッケージ: http://www.kurims.kyoto-u.ac.jp/~ooura/fft-j.html

[2]bambooflow Note 時間計測関数: http://www10.atwiki.jp/bambooflow/pages/249.html

[3]kawa0810の日記,Intel AVX を使用して SIMD 演算を試してみる: http://d.hatena.ne.jp/kawa0810/20120303/1330797281