Intel RealSense D435のキャリブレーション

Intel RealSense D435のデプスキャリブレーションの方法を調べていきます。

資料

まず目を通すべき重要な資料はこれです。

https://www.intel.com/content/dam/support/us/en/documents/emerging-technologies/intel-realsense-technology/RealSense_D400_Dyn_Calib_Quick_Start.pdf

Intelの技術資料は、まとまっているようでまとまっていません。似たような文書が散乱していて欲しい情報を得るのに、一瞬戸惑ってしまいます。

もう少し細かい情報を調べたいときに見る資料はこちらです。

https://www.intel.com/content/dam/support/us/en/documents/emerging-technologies/intel-realsense-technology/RealSense_D400_Dyn_Calib_User_Guide.pdf

Dynamic Target Tool

Dynamic Calibratorでは、外部パラメータのみをキャリブレーションします。

- RotationLeftRight, TranslationLeftRight

- RotationLeftRGB, TranslationLeftRGB

キャリブレーションパターンを紙に印刷して板に張り付けて、キャリブレーションボードを作る従来のやり方もできますが、スマホを使ってやや現代風の方法をとることが出来ます。(この記事では、これを試してみたかっただけ、とも。通常は工場出荷時にキャリブレーションされていますので、外部パラメータのキャリブレーションを試す必要はありません。)

まず、iPhoneやAndroidのアプリとして配布されているIntel RealSense Dynamic Target Toolを、スマホにダウンロードしてインストールしてください。デバイスモデルが正しく認識されれば、適切に調整されたキャリブパターンがスマホ画面に表示されます。

https://itunes.apple.com/jp/app/dynamic-target-tool/id1291448596?mt=8

Dynamic Target Tool - Google Play の Android アプリ

DynamicCalibrator

キャリブレーションツールを起動します。ターゲット有と無の2つのキャリブレーション方法を選べるようです。

> Intel.RealSense.DynamicCalbrator

ターゲット有の場合

- Use Targetにチェックを入れて、Start Calibrationを押します。

RealSenseは三脚等に固定し、カメラの前1mぐらいの空間を物理的に空けます。

- rectification phase

少し準備の間があって画面中央に青枠が表示されます。カメラからおよそ70㎝の距離(50-100cmの間)で、なおかつ、青枠の中に映る位置にスマホを持っていきます。上手くいくと、青枠が外れて透明になりますので、全部の青枠が無くなるように青枠全体をなめるようにスマホを動かしていきます。全部、透明になると次のフェーズに勝手に移行します。

- Scale calibration phase

画面中に認識されたパターンの枠が表示されますので、奥行50-100cm、画面の右から左まで、万遍なくマーカー枠が記録されるように動かしていきます。必要なデータがそろうと、勝手に終了します。

ターゲット無の場合

画面の指示に従って、センサーを適当に動かすと終了します。

キャリブレーションデータの取得

で、センサーの中に書かれたキャリブデータをファイルに落とします。

うちの子だと、カメラパラメータは以下の通りでした。RGBカメラのキャリブレーションをしなければいけませんね。

> Intel.Realsense.CustomRW -r calib.xml

CustomRW for Intel RealSense D400, Version: 2.5.2.0Calibration parameters from the device:

resolutionLeftRight: 1280 800FocalLengthLeft: 640.671387 640.275391

PrincipalPointLeft: 636.945984 387.554199

DistortionLeft: -0.058403 0.066275 0.000052 -0.000805 -0.021272FocalLengthRight: 641.304993 640.990051

PrincipalPointRight: 637.570923 388.892883

DistortionRight: -0.058802 0.067591 0.000368 -0.000935 -0.021977RotationLeftRight: 0.999986 -0.003006 0.004452

0.002983 0.999983 0.005066

-0.004467 -0.005052 0.999977

TranslationLeftRight: -49.963303 -0.013997 0.335144HasRGB: 1

resolutionRGB: 1920 1080

FocalLengthColor: 1384.542603 1385.032715

PrincipalPointColor: 961.749573 533.023682

DistortionColor: 0.000000 0.000000 0.000000 0.000000 0.000000

RotationLeftColor: 0.999967 -0.006930 0.004343

0.006935 0.999975 -0.001158

-0.004335 0.001188 0.999990

TranslationLeftColor: 14.763059 0.139163 0.053030

Open3DをIntel RealSense D435で動かすためにTestRealSense.cppをlibrealsense2に対応するよう改造する

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

Intel RealSense D435は、廃版になってしまったKinect1/2の代替として期待されている低価格のデプスセンサーです。同時に発売されたD415に対し、D435はグローバルシャッター方式であるという優位性があるため、移動ロボット界隈の人の人気を集めています。

D435もOpen3DもIntel製でとても親和性が高そうです。今回の記事では、RealSense D435をOpen3Dで使えるようにしたいと思います。

Intel製だから、そのまんま動くんじゃない?

Open3DのTestコードの中に、TestRealSense.cppというのがあり、最初はこれがすんなり動いてくれるものだとばかり思っていたのですが、2つ問題がありました。1つは、Linuxに対応していないこと。2つ目は、librealsense1にしか対応していないこと。

librealsense1というのは、Intel RealSense SR300より前の古いデプスセンサー用ドライバー/SDKです。関数インターフェースの互換性もありません。そのため、D415/D435は動いてくれません。

仕方ないので、また自分で写経してみることにしました。

librealsense2のインストール

librealsense2のソースコードはこちらです。便宜的にlibrealsense2と呼んでいますが、librealsenseが正式名称のようです。適切にインストールしてください。自分の環境は、Intel CPUを使ったUbuntu 16.04LTSのPCを使用し、librealsenseはソースからビルドしました。

GitHub - IntelRealSense/librealsense: Intel® RealSense™ SDK

TestRealSense2.cppのソースコード

Caution: まだバグがあります。

1. Optionの設定をしても、White Balanceが有効になっているように見えません。

2. Ctrl+Cでプログラムを止めると、プログラムを再実行したときにstreamからデータが拾えません。USBの抜き差しが必要になります。Viewerのウィンドウをxマークで落としてください。

TestRealSense2.cpp

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <thread> #include <librealsense2/rs.hpp> #include <Core/Core.h> #include <Visualization/Visualization.h> // Why IO.a, Core.a, Visualization.a don't have these objects? #include <Core/Utility/FileSystem.cpp> #include <Core/Camera/PinholeCameraTrajectory.cpp> #include <Core/Geometry/TriangleMesh.cpp> #include <Core/Geometry/TriangleMeshFactory.cpp> #include <Core/Geometry/PointCloud.cpp> #include <IO/ClassIO/PointCloudIO.cpp> #include <IO/ClassIO/IJsonConvertible.cpp> #include <IO/ClassIO/IJsonConvertibleIO.cpp> using namespace three; void print_option_range(const rs2::sensor& sensor, rs2_option option_type) { // from api_how_to.h std::cout << "Supported range from option " << option_type << ":" << std::endl; rs2::option_range range = sensor.get_option_range(option_type); float default_value = range.def; float maximum_supported_value = range.max; float minimum_supported_value = range.min; float difference_to_next_value = range.step; std::cout << " Min Value : " << minimum_supported_value << std::endl; std::cout << " Max Value : " << maximum_supported_value << std::endl; std::cout << " Default Value : " << default_value << std::endl; std::cout << " Step : " << difference_to_next_value << std::endl; } void change_option(const rs2::sensor& sensor, rs2_option option_type, float requested_value) { try { sensor.set_option(option_type, requested_value); } catch(const rs2::error& e) { std::cerr << "Failed to set option " << option_type << ". (" << e.what() << ")" << std::endl; } } int main(int argc, char **args) try { rs2::context ctx; rs2::device_list devices = ctx.query_devices(); if(devices.size() == 1){ PrintInfo("There is a connected RealSense device.\n"); } else { PrintInfo("There are %d connected RealSense devices.\n", devices.size()); } if(devices.size() == 0){ return 0; } int dev_id = 0; rs2::device dev = devices[dev_id]; std::string name = "Unknown Device"; if(dev.supports(RS2_CAMERA_INFO_NAME)){ // include/h/rs_sensor.h name = dev.get_info(RS2_CAMERA_INFO_NAME); } PrintInfo("Using device %d, an %s\n", dev_id, name.c_str()); std::string sn = "########"; if(dev.supports(RS2_CAMERA_INFO_SERIAL_NUMBER)){ sn = std::string("#") + dev.get_info(RS2_CAMERA_INFO_SERIAL_NUMBER); } PrintInfo(" Serial number: %s\n", sn.c_str()); std::string fn = "########"; if(dev.supports(RS2_CAMERA_INFO_FIRMWARE_VERSION)){ fn =dev.get_info(RS2_CAMERA_INFO_FIRMWARE_VERSION); } PrintInfo(" Firmware version: %s\n\n", fn.c_str()); int index = 0; int rgb_camera = 0; int stereo_module = 0; std::vector<rs2::sensor> sensors = dev.query_sensors(); // api_how_to.h for(rs2::sensor sensor : sensors){ if(sensor.supports(RS2_CAMERA_INFO_NAME)){ PrintInfo("%d: %s\n", index, sensor.get_info(RS2_CAMERA_INFO_NAME)); if(sensor.get_info(RS2_CAMERA_INFO_NAME) == std::string("Stereo Module")){ stereo_module = index; } if(sensor.get_info(RS2_CAMERA_INFO_NAME) == std::string("RGB Camera")){ rgb_camera = index; } index++; } } if(sensors[rgb_camera].supports(RS2_OPTION_ENABLE_AUTO_EXPOSURE)){ // rs_option.h print_option_range(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_EXPOSURE); change_option(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_EXPOSURE, 0.0); // api_how_to.h } if(sensors[rgb_camera].supports(RS2_OPTION_EXPOSURE)){ print_option_range(sensors[rgb_camera], RS2_OPTION_EXPOSURE); change_option(sensors[rgb_camera], RS2_OPTION_EXPOSURE, 625.0); } if(sensors[rgb_camera].supports(RS2_OPTION_GAIN)){ print_option_range(sensors[rgb_camera], RS2_OPTION_GAIN); change_option(sensors[rgb_camera], RS2_OPTION_GAIN, 128.0); } if(sensors[rgb_camera].supports(RS2_OPTION_ENABLE_AUTO_WHITE_BALANCE)){ print_option_range(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_WHITE_BALANCE); change_option(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_WHITE_BALANCE, 0.0); } // Above, it seems doesn't work... // Decrare Realsense pipeline, encapsulating the actual device and sensors rs2::pipeline pipe; // rs_pipeline.h rs2::config cfg; // examples/measure/rs-measure.cpp cfg.enable_stream(RS2_STREAM_DEPTH, RS2_FORMAT_Z16); cfg.enable_stream(RS2_STREAM_COLOR, RS2_FORMAT_RGB8); // Start streaming with recommended configuration auto profile = pipe.start(cfg); std::this_thread::sleep_for(std::chrono::milliseconds(50)); if(sensors[stereo_module].supports(RS2_OPTION_WHITE_BALANCE)){ change_option(sensors[stereo_module], RS2_OPTION_WHITE_BALANCE, 2100.0); } auto depth_stream = profile.get_stream(RS2_STREAM_DEPTH).as<rs2::video_stream_profile>(); auto depth_intr = depth_stream.get_intrinsics(); // Calibration data PrintInfo("%d %d %.6f %.6f %.6f %.6f\n", depth_intr.width, depth_intr.height, depth_intr.fx, depth_intr.fy, depth_intr.ppx, depth_intr.ppy); for(int i=0; i<5; i++){ PrintInfo("%.6f ", depth_intr.coeffs[i]); } PrintInfo("\n\n"); auto color_stream = profile.get_stream(RS2_STREAM_COLOR).as<rs2::video_stream_profile>(); auto color_intr = color_stream.get_intrinsics(); PrintInfo("%d %d %.6f %.6f %.6f %.6f\n", color_intr.width, color_intr.height, color_intr.fx, color_intr.fy, color_intr.ppx, color_intr.ppy); for(int i=0; i<5; i++){ PrintInfo("%.6f ", color_intr.coeffs[i]); } PrintInfo("\n\n"); // get_extrinsics from api_how_to.h try { auto extrinsics = depth_stream.get_extrinsics_to(color_stream); for(int i=0; i<9; i++){ PrintInfo("%.6f ", extrinsics.rotation[i]); } PrintInfo("\n"); for(int i=0; i<3; i++){ PrintInfo("%.6f ", extrinsics.translation[i]); } PrintInfo("\n"); } catch(const std::exception& e) { std::cerr << "Failed to get extrinsics for the given streams. " << e.what() << std::endl; } auto depth_image_ptr = std::make_shared<Image>(); depth_image_ptr->PrepareImage(depth_intr.width, depth_intr.height, 1, 2); auto color_image_ptr = std::make_shared<Image>(); color_image_ptr->PrepareImage(color_intr.width, color_intr.height, 3, 1); FPSTimer timer("Realsense stream"); Visualizer depth_vis, color_vis; if(depth_vis.CreateWindow("Depth", depth_intr.width, depth_intr.height, 15, 50) == false || depth_vis.AddGeometry(depth_image_ptr) == false || color_vis.CreateWindow("Color", color_intr.width, color_intr.height, 675, 50) == false || color_vis.AddGeometry(color_image_ptr) == false) { return 0; } while(depth_vis.PollEvents() && color_vis.PollEvents()){ timer.Signal(); rs2::frameset data = pipe.wait_for_frames(); // Wait for next set of frames from the camera rs2::frame depth = data.get_depth_frame(); // Find the depth data rs2::frame color = data.get_color_frame(); // Find the color data memcpy(depth_image_ptr->data_.data(), depth.get_data(), depth_intr.width * depth_intr.height * 2); memcpy(color_image_ptr->data_.data(), color.get_data(), color_intr.width * color_intr.height * 3); depth_vis.UpdateGeometry(); color_vis.UpdateGeometry(); if(sensors[rgb_camera].supports(RS2_OPTION_WHITE_BALANCE)){ PrintInfo("\r %.2f\t", sensors[rgb_camera].get_option(RS2_OPTION_WHITE_BALANCE)); } } return 1; } catch(const rs2::error & e) { std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl; return EXIT_FAILURE; } catch(const std::exception& e) { std::cerr << e.what() << std::endl; return EXIT_FAILURE; }

CMakeを書くのが面倒(というより、書き方をよく分かってない)なので、大した量でもないのでオプションを平で書いてコンパイルします。cvlはユーザー名でworkspaceはソースを置いているディレクトリです。適当に置き換えてください。openFrameworksと共存させたい目的で、一部のライブラリ(libjsoncpp.a)を自前でインストールした物を使っています。必要なければ外してください。

g++ -std=c++14 -I/home/cvl/workspace/librealsense/include/ -I/home/cvl/workspace/Open3D/src/ -I/usr/include/eigen3/ TestRealSense.cpp /home/cvl/workspace/Open3D/build/lib/*.a `pkg-config --static --libs gl` `pkg-config --static --libs glew` -lglfw -lgomp -ljpeg /usr/local/lib/libjsoncpp.a -L/usr/local/lib -lrealsense2

2018/03/26 追記

if(Open3D_BUILD_LIBREALSENSE2) find_package(realsense2 REQUIRED) # native installed only add_executable(TestRealSense2 TestRealSense2.cpp) target_link_libraries(TestRealSense2 Visualization realsense2) set_target_properties(TestRealSense2 PROPERTIES FOLDER "Test" RUNTIME_OUTPUT_DIRECTORY "${CMAKE_RUNTIME_OUTPUT_DIRECTORY}/Test") endif(Open3D_BUILD_LIBREALSENSE2)

実行すると、

./a.out

There is a connected RealSense device.

Using device 0, an Intel RealSense 435

Serial number: #801212070***

Firmware version: 05.09.02.000: Stereo Module

1: RGB Camera

Supported range from option Enable Auto Exposure:

Min Value : 0

Max Value : 1

Default Value : 1

Step : 1

Supported range from option Exposure:

Min Value : 41

Max Value : 10000

Default Value : 166

Step : 1

Supported range from option Gain:

Min Value : 0

Max Value : 128

Default Value : 64

Step : 1

Supported range from option Enable Auto White Balance:

Min Value : 0

Max Value : 1

Default Value : 1

Step : 1

1280 720 643.699280 643.699280 640.845093 349.351471

0.000000 0.000000 0.000000 0.000000 0.0000001920 1080 1384.542603 1385.032715 961.749573 533.023682

0.000000 0.000000 0.000000 0.000000 0.0000000.999969 0.004246 -0.006592 -0.004221 0.999984 0.003856 0.006608 -0.003828 0.999971

0.014762 0.000241 -0.000011

4600.00 Realsense stream at 11.50 fps.

4600.00 Realsense stream at 16.31 fps.

4600.00 Realsense stream at 16.24 fps.

一応、ちゃんと動いているように見えます。フィードバック歓迎しますので(というかお願いしますから)、何か問題を見つけたら直して教えてください。

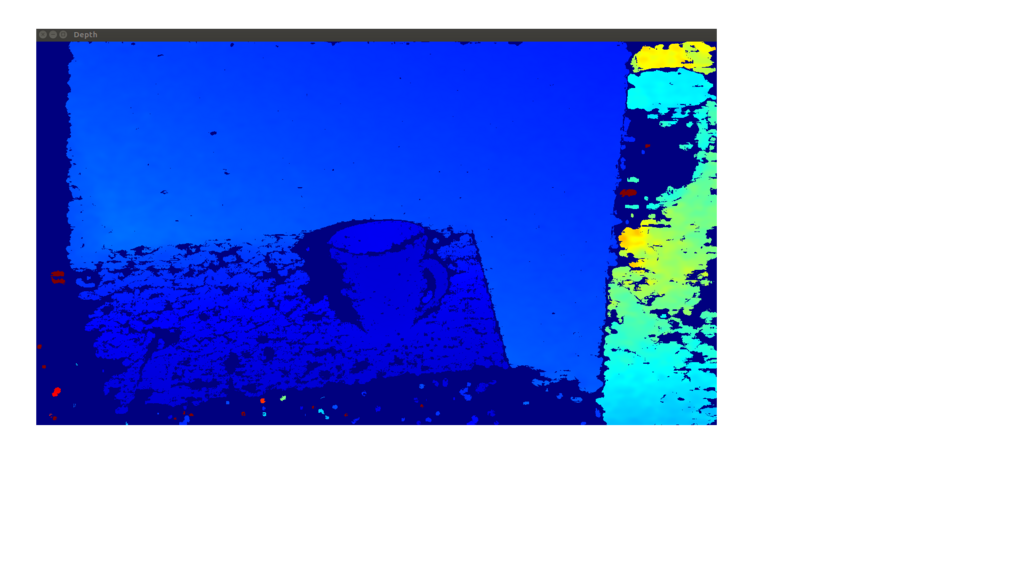

ほぼ、TestRealSense.cppをそのまま書き換えることには成功しましたが、もともとこのコードは素のデータを画面表示するだけで殆ど何もしてくれてない!Depthの値とか、Rectifyとかどうなってるのか知りたいのに!RealSenseをもっと勉強しないと、駄目ですね。

realsense2のc++コードの参考はここが良い

今日の本

東ロボの失敗?から副次的に洗い出された日本の教育の大問題の提起。

英語教育の前に日本語教育をちゃんとしないとだめですね!

- 作者: 新井紀子

- 出版社/メーカー: 東洋経済新報社

- 発売日: 2018/02/02

- メディア: 単行本

- この商品を含むブログ (7件) を見る

Open3DのPythonチュートリアルをC++に移植(その6) intaractive_visualization.py

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

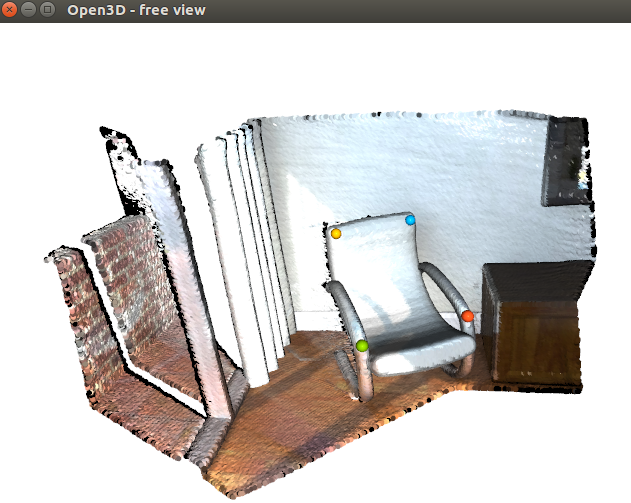

interactive_visualizationは、マウス操作によるクロップ(領域きりだし)と、ポイント選択による初期位置指定をつかった位置合わせを提供します。これも、c++のTestコードが用意されていますので、ここのソースは見る必要がありません。

python

Open3D/interactive_visualization.py at master · IntelVCL/Open3D · GitHub

c++

Open3D/ManuallyCropPointCloud.cpp at master · IntelVCL/Open3D · GitHub

Open3D/src/Tools/ManuallyAlignPointCloud at master · IntelVCL/Open3D · GitHub

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <memory> #include <thread> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <assert.h> using namespace three; void VisualizeRegistration(const three::PointCloud &source, const three::PointCloud &target, const Eigen::Matrix4d &Transformation) { std::shared_ptr<PointCloud> source_transformed_ptr(new PointCloud); std::shared_ptr<PointCloud> target_ptr(new PointCloud); *source_transformed_ptr = source; *target_ptr = target; source_transformed_ptr->Transform(Transformation); DrawGeometries({ source_transformed_ptr, target_ptr }, "Registration result"); } void DemoCropGeometry() { std::cout << "Demo for manual geometry cropping" << std::endl; std::cout << "1) Press 'Y' twice to align geometry with negative direction of y-axis" << std::endl; std::cout << "2) Press 'K' to lock screen and to switch to selection mode" << std::endl; std::cout << "3) Drag for rectangle selection," << std::endl; std::cout << " or use ctrl + left click for polygon selection" << std::endl; std::cout << "4) Press 'C' to get a selected geometry and to save it" << std::endl; std::cout << "5) Press 'F' to switch to freeview mode" << std::endl; // std::shared_ptr<PointCloud> auto pcd = CreatePointCloudFromFile("../../lib/TestData/ICP/cloud_bin_0.pcd"); DrawGeometriesWithEditing({pcd}); } std::vector<size_t>& PickPoints(std::shared_ptr<const Geometry> geom_ptr) { std::cout << std::endl; std::cout << "1) Please pick at least three correspondences using [shift + left click]" << std::endl; std::cout << " Press [shift + right click] to undo point picking" << std::endl; std::cout << "2) After picking points, press q for close the window" << std::endl; VisualizerWithEditing vis; vis.CreateWindow(); vis.AddGeometry(geom_ptr); vis.Run(); // user picks points vis.DestroyWindow(); std::cout << std::endl; return vis.GetPickedPoints(); } void DemoManualRegistration() { std::cout << "Demo for manual ICP" << std::endl; // std::shared_ptr<PointCloud> auto source = CreatePointCloudFromFile("../../lib/TestData/ICP/cloud_bin_0.pcd"); // std::shared_ptr<PointCloud> auto target = CreatePointCloudFromFile("../../lib/TestData/ICP/cloud_bin_2.pcd"); std::cout << "Visualization of two point clouds before manual alignment" << std::endl; //DrawRegistrationResult(source, target, Eigen::Matrix4d::Identity()); VisualizeRegistration(*source, *target, Eigen::Matrix4d::Identity()); // pick points from two point clouds and builds correspondences // std::vector<size_t>& auto picked_id_source = PickPoints(source); // std::vector<size_t>& auto picked_id_target = PickPoints(target); assert((picked_id_source.size() >=3) && (picked_id_target.size() >= 3)); assert(picked_id_source.size() == picked_id_target.size()); CorrespondenceSet corr; for(size_t i = 0; i < picked_id_source.size(); i++){ corr.push_back(Eigen::Vector2i(picked_id_source[i], picked_id_target[i])); } // estimate rough transformation using correspondences std::cout << "Compute a rough transformation using the correspondences given by user" << std::endl; TransformationEstimationPointToPoint p2p; // Eigen::Matrix4d auto trans_init = p2p.ComputeTransformation(*source, *target, corr); // point-to-point ICP for refinement std::cout << "Perform point-to-point ICP refinement" << std::endl; double threshold = 0.03; // 3cm distance threshold // RegistrationResult auto reg_p2p = RegistrationICP(*source, *target, threshold, trans_init, TransformationEstimationPointToPoint()); VisualizeRegistration(*source, *target, reg_p2p.transformation_); std::cout << std::endl; } int main(int argc, char *argv[]) { SetVerbosityLevel(VerbosityLevel::VerboseAlways); DemoCropGeometry(); DemoManualRegistration(); return 1; }

なかなかうまく合いません。Point-to-Point ICPが厳しいのだとは思いますが、ColoredICPかFPFHに任せてしまった方がよさそうですね。

Open3DのPythonチュートリアルをC++に移植(その5) customized_visualizer.py

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

customized_visualizerは、キー入力、アニメーション、画面キャプチャなどのサンプルを提供しています。

これと類似のテストがすでにc++で用意されていますので、基本的にはこのソースは見る必要がありません。(精神衛生上、取り残しがあると気持ち悪いから、移植してみたまでです。一部手抜きをしています。)

元のpythonソースはこちら。

Open3D/customized_visualization.py at 50a31d8daaf3218b767ddbacc0db4e4f125f13d6 · IntelVCL/Open3D · GitHub

C++の参考ソースはこちらとこちら。

Open3D/TestVisualizer.cpp at 50a31d8daaf3218b767ddbacc0db4e4f125f13d6 · IntelVCL/Open3D · GitHub

Open3D/TestDepthCapture.cpp at 50a31d8daaf3218b767ddbacc0db4e4f125f13d6 · IntelVCL/Open3D · GitHub

TestCustomizedVisualizer.cpp

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <memory> #include <thread> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <string> #include <iomanip> #include <sstream> using namespace three; bool CustomDrawGeometry(const std::vector<std::shared_ptr<const PointCloud>> &pcd_ptrs) { const std::string &window_name = "Open3D"; int width = 640; int height = 480; int left = 50; int top = 50; // The following code achieves the same effect as: // bool DrawGeometries({pcd}) Visualizer visualizer; if(visualizer.CreateWindow(window_name, width, height, left, top) == false) { std::cout << "[DrawGeometries] Failed creating OpenGL window." << std::endl; return false; } for(const auto &pcd_ptr : pcd_ptrs) { if(visualizer.AddGeometry(pcd_ptr) == false) { std::cout << "[DrawGeometries] Failed addomg geometry." << std::endl; std::cout << "[DrawGeometries] Possibly due to bad geometry or wrong geometry type." << std::endl; return false; } } visualizer.Run(); visualizer.DestroyWindow(); return true; } bool CustomDrawGeometryWithRotation(const std::vector<std::shared_ptr<const PointCloud>> &pcd_ptrs) { int width = 640; int height = 480; std::vector<std::shared_ptr<const Geometry>> geom_ptrs; geom_ptrs.assign(pcd_ptrs.begin(), pcd_ptrs.end()); // return DrawGeometriesWithAnimationCallback( {geom_ptrs}, [&](Visualizer *vis) { vis->GetViewControl().Rotate(10, 0); std::this_thread::sleep_for(std::chrono::milliseconds(30)); return false; }, "Spin", width, height); } bool CustomDrawGeometryLoadOption(const std::vector<std::shared_ptr<const PointCloud>> &pcd_ptrs) { const std::string &window_name = "Open3D"; int width = 640; int height = 480; int left = 50; int top = 50; Visualizer visualizer; if(visualizer.CreateWindow(window_name, width, height, left, top) == false) { std::cout << "[DrawGeometries] Failed creating OpenGL window." << std::endl; return false; } for(const auto &pcd_ptr : pcd_ptrs) { if(visualizer.AddGeometry(pcd_ptr) == false) { std::cout << "[DrawGeometries] Failed addomg geometry." << std::endl; std::cout << "[DrawGeometries] Possibly due to bad geometry or wrong geomeetry type." << std::endl; return false; } } RenderOption ro; ReadIJsonConvertible("../../lib/TestData/renderoption.json", ro); visualizer.GetRenderOption() = ro; visualizer.Run(); visualizer.DestroyWindow(); return true; } class CustomVisualizerWithKeyCallback : public Visualizer { protected: void KeyPressCallback(GLFWwindow *window, int key, int scannode, int action, int mods) override { if(action == GLFW_RELEASE){ return; } if(key == GLFW_KEY_K){ Eigen::Vector3d background_color = Eigen::Vector3d::Zero(); this->GetRenderOption().background_color_ = background_color; } if(key == GLFW_KEY_R){ RenderOption ro; ReadIJsonConvertible("../../lib/TestData/renderoption.json", ro); this->GetRenderOption() = ro; } if(key == GLFW_KEY_COMMA){ std::string depth_filename = "depth.png"; bool do_render = true; double depth_scale = 1000.0; std::string camera_filename = "camera.json"; this->CaptureDepthImage(depth_filename, do_render, depth_scale); PinholeCameraTrajectory camera; camera.extrinsic_.resize(1); this->view_control_ptr_->ConvertToPinholeCameraParameters( camera.intrinsic_, camera.extrinsic_[0]); WriteIJsonConvertible(camera_filename, camera); //auto depth = this->CaptureDepthFloatBuffer(); //DrawGeometries({depth}, "Depth", depth->width_, depth->height_); // Segmentation falt // maybe, a best solution is to use cv::imshow() } if(key == GLFW_KEY_PERIOD){ std::string filename = "image.jpg"; bool do_render =true; this->CaptureScreenImage(filename, do_render); //auto image = this->CaptureScreenFloatBuffer(); //DrawGeometries({image}, "Image", image->width_, image->height_); } UpdateRender(); } }; bool CustomDrawGeometryWithKeyCallback(const std::vector<std::shared_ptr<const PointCloud>> &pcd_ptrs) { const std::string &window_name = "Open3D"; int width = 640; int height = 480; int left = 50; int top = 50; // The following code achieves the same effect as: // bool DrawGeometries({pcd}) CustomVisualizerWithKeyCallback visualizer; if(visualizer.CreateWindow(window_name, width, height, left, top) == false) { std::cout << "[DrawGeometries] Failed creating OpenGL window." << std::endl; return false; } for(const auto &pcd_ptr : pcd_ptrs) { if(visualizer.AddGeometry(pcd_ptr) == false) { std::cout << "[DrawGeometries] Failed addomg geometry." << std::endl; std::cout << "[DrawGeometries] Possibly due to bad geometry or wrong geometry type." << std::endl; return false; } } visualizer.Run(); visualizer.DestroyWindow(); return true; } bool CustomDrawGeometryWithCameraTrajectory(const std::vector<std::shared_ptr<const PointCloud>> &pcd_ptrs) { PinholeCameraTrajectory trajectory; ReadPinholeCameraTrajectory("../../lib/TestData/camera_trajectory.json", trajectory); std::vector<std::shared_ptr<const Geometry>> geom_ptrs; geom_ptrs.assign(pcd_ptrs.begin(), pcd_ptrs.end()); // return DrawGeometriesWithAnimationCallback({geom_ptrs}, [&](Visualizer *vis) { static int index = -1; static PinholeCameraTrajectory trajectory; if(index == -1){ // overhead ReadPinholeCameraTrajectory("../../lib/TestData/camera_trajectory.json", trajectory); } if(index >= 0){ std::cout << "Capture image " << std::setfill('0') << std::setw(5) << std::to_string(index) << std::endl; std::string dpath, cpath; std::stringstream ss1, ss2; ss1 << "TempData/depth" << std::setfill('0') << std::setw(5) << std::to_string(index) << ".png"; ss1 >> dpath; ss2 << "TempData/image" << std::setfill('0') << std::setw(5) << std::to_string(index) << ".jpg"; ss2 >> cpath; // auto depth = vis->CaptureDepthFloatBuffer(); // auto image = vis->CaptureScreenFloatBuffer(); // WriteImage(dpath, *CreateImageFromFloatImage<uint16_t>(*depth)); // int quality = 90; // WriteImage(cpath, *CreateImageFromFloatImage<uint16_t>(*image), quality); // above is not work due to mendoukusai. vis->CaptureDepthImage(dpath, false); vis->CaptureScreenImage(cpath, false); } index++; if(index < trajectory.extrinsic_.size()){ // ViewControlWithCustomAnimation & auto ctr = vis->GetViewControl(); ctr.ConvertFromPinholeCameraParameters(trajectory.intrinsic_, trajectory.extrinsic_[index]); } else { vis->RegisterAnimationCallback(nullptr); } return true; }, "Capture Animation", trajectory.intrinsic_.width_, trajectory.intrinsic_.height_); } int main(int argc, char *argv[]) { SetVerbosityLevel(VerbosityLevel::VerboseAlways); std::shared_ptr<PointCloud> pcd; pcd = CreatePointCloudFromFile("../../lib/TestData/fragment.ply"); std::cout << "1. Customized visualization to mimic DrawGeometry" << std::endl; CustomDrawGeometry({pcd}); std::cout << "2. Customized visualization with a rotating view" << std::endl; CustomDrawGeometryWithRotation({pcd}); std::cout << "3. Customized visualization showing normal rendering" << std::endl; CustomDrawGeometryLoadOption({pcd}); std::cout << "4. Customized visualization with key press callbacks" << std::endl; std::cout << " Press 'K' to change background color to black" << std::endl; std::cout << " Press 'R' to load a customized render option, showing normals" << std::endl; std::cout << " Press ',' to capture the depth buffer and show it" << std::endl; std::cout << " Press '.' to capture the screen and show it" << std::endl; CustomDrawGeometryWithKeyCallback({pcd}); std::cout << "5. Customized visualization playing a camera trajectory" << std::endl; CustomDrawGeometryWithCameraTrajectory({pcd}); return 1; }

1. DrawGeometriesの代わりにVisualizerを自前で作って単に表示

2. コールバックを使って、アニメーション表示

3. jsonファイルに保存された画面設定を読み込んで表示

4. キー入力イベントコールバックを使って、インタラクティブに表示の変更、と、画面キャプチャ

5. アニメーションをしながらコールバックして、アニメーションを画像として保存。ただし、実画面サイズとカメラ内部パラメータの幅、高さが一致していないと上手く動かない。

Open3DのPythonチュートリアルをC++に移植(その4) rgbd_integration.py

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

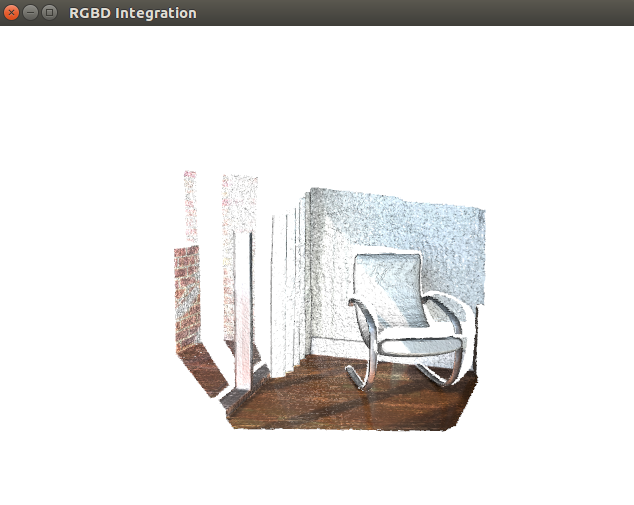

rgbd_integrationは、マージングなどの名前で知られる手法と同じで、位置合わせされた複数組の3次元点群から、無駄な点や面のない綺麗な1つのメッシュデータを生成します。

pythonチュートリアルのオリジナルはこちらです。

Open3D/rgbd_integration.py at master · IntelVCL/Open3D · GitHub

TestRGBDIntegration.cpp

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <memory> #include <Eigen/Dense> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <Core/Utility/Timer.h> #include <fstream> #include <sstream> #include <iomanip> using namespace three; typedef int Metadata[3]; class CameraPose { public: CameraPose(Metadata meta, Eigen::Matrix4d mat) { for(int i=0; i<3; i++) metadata[i] = meta[i]; pose = mat; }; friend std::ostream& operator << (std::ostream& os, const CameraPose& p); Metadata metadata; Eigen::Matrix4d pose; }; std::ostream& operator << (std::ostream& os, const CameraPose& p) { os << "Metadata : "; for(int i=0; i<3; i++){ os << p.metadata[i] << " "; } os << std::endl; os << "Pose : " << std::endl; os << p.pose << std::endl; } std::shared_ptr<std::vector<CameraPose>> ReadTrajectory(std::string filename) { std::shared_ptr<std::vector<CameraPose>> traj = std::make_shared<std::vector<CameraPose>>(); traj->clear(); std::ifstream fin; fin.open(filename, std::ios::in); if(!fin.fail()){ Metadata meta; Eigen::Matrix4d pose = Eigen::Matrix4d::Identity(); fin >> meta[0] >> meta[1] >> meta[2]; while(fin) { for(int i=0; i<4; i++){ fin >> pose(i, 0) >> pose(i,1) >> pose(i, 2) >> pose(i, 3); } traj->push_back(CameraPose(meta, pose)); fin >> meta[0] >> meta[1] >> meta[2]; } fin.close(); } else { std::cout << "Can't open " << filename << std::endl; } return traj; } void WriteTrajectory(std::vector<CameraPose>& traj, std::string filename) { std::ofstream fout; fout.open(filename, std::ios::out); for(unsigned int i=0; i<traj.size(); i++){ fout << traj[i].metadata[0] << " " << traj[i].metadata[1] << " " << traj[i].metadata[2] << std::endl; fout << traj[i].pose << std::endl; } fout.close(); } int main(int argc, char *argv[]) { SetVerbosityLevel(VerbosityLevel::VerboseAlways); bool visualization = true; // false; #ifdef _OPENMP PrintDebug("OpenMP is supported. Using %d threads.", omp_get_num_threads()); #endif std::shared_ptr<std::vector<CameraPose>> camera_poses = ReadTrajectory("../../lib/TestData/RGBD/odometry.log"); double voxel_length = 4.0 / 512.0; double sdf_trunc = 0.04; bool with_color = true; auto volume = std::shared_ptr<ScalableTSDFVolume>( new ScalableTSDFVolume(voxel_length,sdf_trunc, with_color)); for(unsigned int i=0; i<camera_poses->size(); i++){ std::cout << "Integrate " << i << "-th image into the volume." << std::endl; std::string cpath, dpath; std::stringstream ss1, ss2; ss1 << "../../lib/TestData/RGBD/color/" << std::setfill('0') << std::setw(5) << i << ".jpg"; ss1 >> cpath; ss2 << "../../lib/TestData/RGBD/depth/" << std::setfill('0') << std::setw(5) << i << ".png"; ss2 >> dpath; // std::shared_ptr<Image> auto color = CreateImageFromFile(cpath); // std::shared_ptr<Image> auto depth = CreateImageFromFile(dpath); double depth_scale = 1000; double depth_trunc = 4.0; bool convert_rgb_to_intensity = false; // std::shared_ptr<RGBDImage> auto rgbd = CreateRGBDImageFromColorAndDepth(*color, *depth, depth_scale, depth_trunc, convert_rgb_to_intensity); volume->Integrate(*rgbd, PinholeCameraIntrinsic(PinholeCameraIntrinsicParameters::PrimeSenseDefault) (*camera_poses)[i].pose.inverse()); // PinholeCameraIntrinsic::PrimeSenseDefault, old style } std::cout << "Extract a triangle mesh from the volume and visualize it." << std::endl; // std::shared_ptr<TriangleMesh> auto mesh = volume->ExtractTriangleMesh(); mesh->ComputeVertexNormals(); if(visualization){ DrawGeometries({mesh}, "RGBD Integration"); } return 0; }

> TestRGBDIntegration

Add geometry and update bounding box to [(0.6055, 0.8320, 0.6680) - (3.0742, 2.4249, 2.4512)]

拡大してみると、ちゃんとマーチングキューブ法でメッシュが貼られていることが分かります。

自前で用意したjsoncppライブラリを使いたい場合

jsoncppのcmakeをするときに-fPICオプションを使用する必要があります。

cmake -DCMAKE_CXX_FLAGS=-fPIC -DCMAKE_C_FLAGS=-fPIC ../

Today's must buy item.

- 作者: 友納正裕

- 出版社/メーカー: オーム社

- 発売日: 2018/03/03

- メディア: 単行本(ソフトカバー)

- この商品を含むブログ (2件) を見る

Open3DのPythonチュートリアルをC++に移植(その3) multiway_registration.py

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

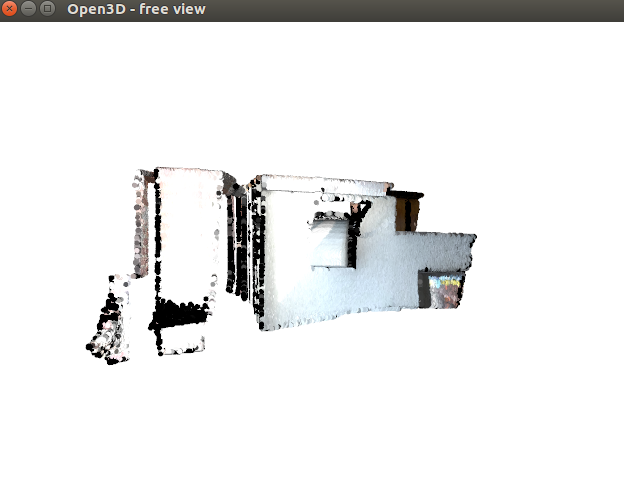

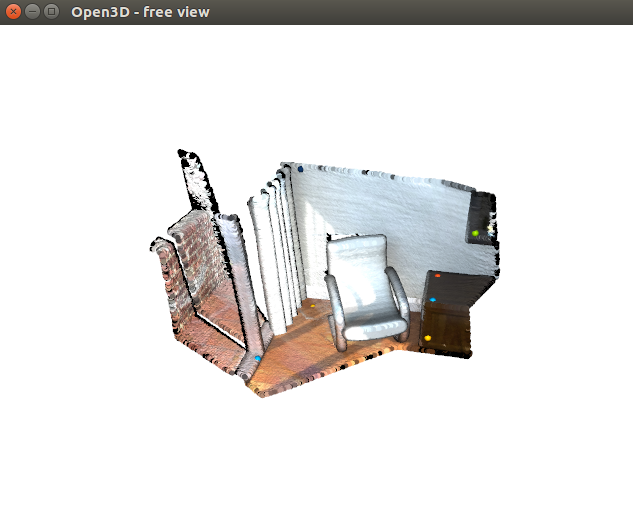

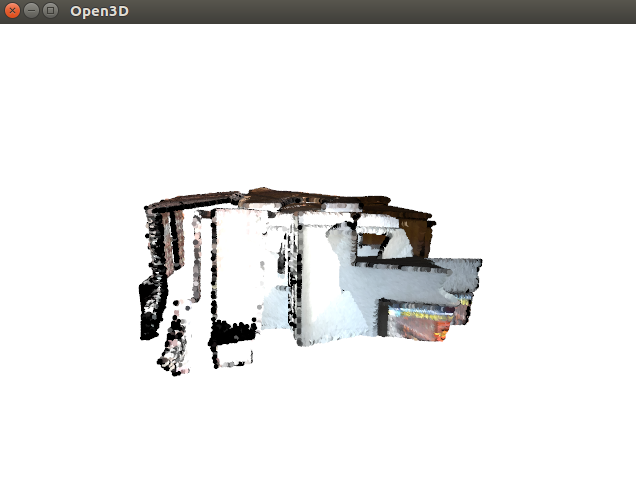

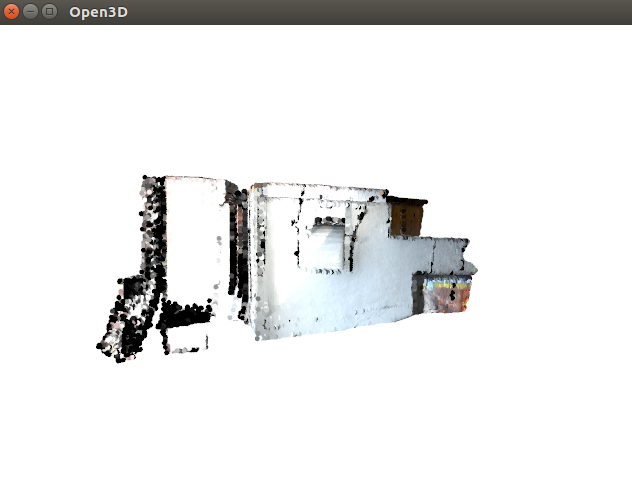

multiway_registrationは、複数の3次元点群のシーケンスから、総当たりでPoseGraphを作って全体最適化を行って、3次元形状復元をします。

pythonチュートリアルはこちら。

Open3D/multiway_registration.py at master · IntelVCL/Open3D · GitHub

その際、連番になっているところはodometryが得られるものとしてICPで相対関係を得て、連番じゃないところはLoopClosureの可能性があるとフラグを立ててPoseGraphに格納します。その後、全体最適化を行います。こんなのが、わずか数行で書けちゃうのはすごいですね。

TestMultiwayRegistration.cpp

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <memory> #include <Eigen/Dense> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <Core/Utility/Timer.h> #include <Core/Registration/PoseGraph.h> #include <Core/Registration/GlobalOptimization.h> using namespace three; int main(int argc, char *argv[]) { SetVerbosityLevel(VerbosityLevel::VerboseAlways); bool visualization = true; // false; #ifdef _OPENMP PrintDebug("OpenMP is supported. Using %d threads.", omp_get_num_threads()); #endif std::vector<std::shared_ptr<PointCloud> > pcds; for(int i=0; i<3; i++){ std::string filename = std::string("../../lib/TestData/ICP/cloud_bin_") + std::to_string(i) + std::string(".pcd"); std::shared_ptr<PointCloud> pcd = CreatePointCloudFromFile(filename); double voxel_size = 0.02; std::shared_ptr<PointCloud> downpcd = VoxelDownSample(*pcd, voxel_size); pcds.push_back(downpcd); } if(visualization){ std::vector<std::shared_ptr<const Geometry> > geoms; // cast? geoms.assign(pcds.begin(), pcds.end()); DrawGeometries({geoms}); geoms.clear(); } PoseGraph pose_graph; Eigen::Matrix4d odometry = Eigen::Matrix4d::Identity(); pose_graph.nodes_.push_back(PoseGraphNode(odometry)); int n_pcds = pcds.size(); Eigen::Matrix4d transformation_icp = Eigen::Matrix4d::Identity(); Eigen::Matrix6d information_icp = Eigen::Matrix6d::Identity(); for(int source_id = 0; source_id < n_pcds; source_id++){ for(int target_id = source_id + 1; target_id < n_pcds; target_id++){ std::shared_ptr<PointCloud> source = pcds[source_id]; std::shared_ptr<PointCloud> target = pcds[target_id]; std::cout << "Apply point-to-plane ICP" << std::endl; // RegistrationResult auto icp_coarse = RegistrationICP(*source, *target, 0.3, Eigen::Matrix4d::Identity(), TransformationEstimationPointToPlane()); // RegistrationResult auto icp_fine = RegistrationICP(*source, *target, 0.03, icp_coarse.transformation_, TransformationEstimationPointToPlane()); transformation_icp = icp_fine.transformation_; information_icp = GetInformationMatrixFromPointClouds( *source, *target, 0.03f, icp_fine.transformation_); std::cout << information_icp << std::endl; // draw_registration_result(source, target, np.identity(4)) // VisualizeRegistration(*source, *target, Eigen::Matrix4d::Identity()); std::cout << "Build PoseGraph" << std::endl; bool uncertain; if(target_id == source_id + 1){ // odometry case odometry *= transformation_icp; pose_graph.nodes_.push_back( PoseGraphNode(odometry.inverse())); uncertain = false; pose_graph.edges_.push_back( PoseGraphEdge(source_id, target_id, transformation_icp, information_icp, uncertain)); } else { // loop closure case uncertain = true; pose_graph.edges_.push_back( PoseGraphEdge(source_id, target_id, transformation_icp, information_icp, uncertain)); } } } std::cout << "Optimizing PoseGraph ..." << std::endl; GlobalOptimizationConvergenceCriteria criteria; double max_correspondence_distance = 0.03; double edge_prune_threshold = 0.25; int reference_node = 0; GlobalOptimizationOption option(max_correspondence_distance, edge_prune_threshold, reference_node); GlobalOptimizationLevenbergMarquardt optimization_method; GlobalOptimization(pose_graph, optimization_method, criteria, option); std::cout << "Transform points and display" << std::endl; for(int point_id=0; point_id<n_pcds; point_id++){ std::cout << pose_graph.nodes_[point_id].pose_ << std::endl; pcds[point_id]->Transform(pose_graph.nodes_[point_id].pose_); } if(visualization){ std::vector<std::shared_ptr<const Geometry> > geoms; // cast? geoms.assign(pcds.begin(), pcds.end()); DrawGeometries({geoms}); geoms.clear(); } return 0; }

> ./TestMultiwayRegistration

(Transform points and display)

1 -3.77438e-18 -4.33681e-19 1.38778e-17

3.16113e-18 1 0 6.93889e-18

0 0 1 0

0 0 0 1

0.840534 -0.14724 0.521367 0.350979

0.00666122 0.965095 0.261816 -0.396388

-0.541718 -0.216592 0.812176 1.72979

0 0 0 1

0.963186 -0.0751313 0.258124 0.38868

0.00126401 0.96141 0.275118 -0.4987

-0.268833 -0.264663 0.926111 1.29867

0 0 0 1

ここの書き方は怪しいので注意

DrawGeometryの引数のところ、std::vector< std::shared_ptr < const Geometry > >型を渡すことになっていて、普通にPointCloud型を渡しても良さそうなのに、上手く渡す方法が分からなかったため苦肉の策でこう書いているだけです。無駄が多いので注意。

if(visualization){ std::vector<std::shared_ptr<const Geometry> > geoms; // cast? geoms.assign(pcds.begin(), pcds.end()); DrawGeometries({geoms}); geoms.clear(); }

Open3DのPythonチュートリアルをC++に移植(その2) global_registration.py

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

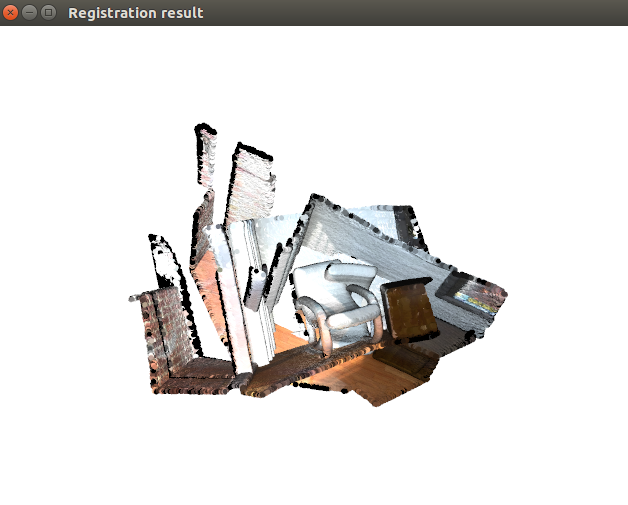

global_registrationは、初期位置合わせの要らない位置合わせ手法を提供します。

pythonチュートリアルはこちら。

Open3D/global_registration.py at master · IntelVCL/Open3D · GitHub

2つの3次元点群のそれぞれでFPFH特徴量を求め、特徴量を用いて粗々の初期位置合わせを行います。その後、ICPにて精密に相対位置を得ます。

TestGlobarlRegistration.cpp

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <memory> #include <Eigen/Dense> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <Core/Utility/Timer.h> using namespace three; void VisualizeRegistration(const three::PointCloud &source, const three::PointCloud &target, const Eigen::Matrix4d &Transformation) { std::shared_ptr<PointCloud> source_transformed_ptr(new PointCloud); std::shared_ptr<PointCloud> target_ptr(new PointCloud); *source_transformed_ptr = source; *target_ptr = target; source_transformed_ptr->Transform(Transformation); DrawGeometries({ source_transformed_ptr, target_ptr }, "Registration result"); } int main(int argc, char *argv[]) { SetVerbosityLevel(VerbosityLevel::VerboseAlways); if (argc != 3) { PrintDebug("Usage : %s [path_to_first_point_cloud] [path_to_second_point_cloud]\n", argv[0]); return 1; } bool visualization = true; // false; #ifdef _OPENMP PrintDebug("OpenMP is supported. Using %d threads.", omp_get_num_threads()); #endif std::cout << "1. Load two point clouds and disturb initial pose." << std::endl; std::shared_ptr<PointCloud> source, target; source = three::CreatePointCloudFromFile(argv[1]); target = three::CreatePointCloudFromFile(argv[2]); Eigen::Matrix4d trans_init; // = Eigen::Matrix4d::Identity(); trans_init << 0.0, 0.0, 1.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.0; source->Transform(trans_init); if(visualization){ VisualizeRegistration(*source, *target, Eigen::Matrix4d::Identity()); } std::cout << "2. Downsample with a voxel size 0.05." << std::endl; std::shared_ptr<PointCloud> source_down, target_down; double voxel_size = 0.05; source_down = VoxelDownSample(*source, voxel_size); target_down = VoxelDownSample(*target, voxel_size); std::cout << "3. Estimate normal with search radius 0.1." << std::endl; double radius = 0.1; int max_nn = 30; EstimateNormals(*source_down, KDTreeSearchParamHybrid(radius, max_nn)); EstimateNormals(*target_down, KDTreeSearchParamHybrid(radius, max_nn)); std::cout << "4. Compute FPFH feature with search radius 0.25" << std::endl; std::shared_ptr<Feature> source_fpfh, target_fpfh; radius = 0.25; max_nn = 100; source_fpfh = ComputeFPFHFeature( *source_down, three::KDTreeSearchParamHybrid(radius, max_nn)); target_fpfh = ComputeFPFHFeature( *target_down, three::KDTreeSearchParamHybrid(radius, max_nn)); std::cout << "5. RANSAC registration on downsampled point clouds." << std::endl; std::cout << " Since the downsampling voxel size is 0.05, we use a liberal" << std::endl; std::cout << " distance threshold 0.075." << std::endl; std::vector<std::reference_wrapper<const CorrespondenceChecker>> correspondence_checker; // std::reference_wrapper<const CorrespondenceChecker> auto correspondence_checker_edge_length = CorrespondenceCheckerBasedOnEdgeLength(0.9); // std::reference_wrapper<const CorrespondenceChecker> auto correspondence_checker_distance = CorrespondenceCheckerBasedOnDistance(0.075); correspondence_checker.push_back(correspondence_checker_edge_length); correspondence_checker.push_back(correspondence_checker_distance); // correspondence_checker.push_back(correspondence_checker_normal); // RegistrationResult auto result_ransac = RegistrationRANSACBasedOnFeatureMatching( *source_down, *target_down, *source_fpfh, *target_fpfh, 0.075, TransformationEstimationPointToPoint(false), 4, correspondence_checker, RANSACConvergenceCriteria(4000000, 500)); std::cout << result_ransac.transformation_ << std::endl; if(visualization){ VisualizeRegistration(*source, *target, result_ransac.transformation_); } std::cout << "6. Point-to-plane ICP registration is applied on original point" << std::endl; std::cout << " clouds to refine the alignment. This time we use a strict" << std::endl; std::cout << " distance threshold 0.02." << std::endl; // RegistrationResult auto result_icp = RegistrationICP(*source, *target, 0.02, result_ransac.transformation_, TransformationEstimationPointToPlane()); std::cout << result_icp.transformation_ << std::endl; if(visualization){ VisualizeRegistration(*source, *target, result_icp.transformation_); } return 0; }

./TestGlobalRegistration ../../lib/TestData/ICP/cloud_bin_0.pcd ../../lib/TestData/ICP/cloud_bin_1.pcd

(5. Ransac Registration)

0.85476 0.00934008 -0.518939 0.586452

-0.159339 0.956279 -0.24524 0.895341

0.49396 0.292308 0.818877 -1.47391

0 0 0 1

(6. Point-to-plane ICP registration)

0.840472 0.00663037 -0.541814 0.644871

-0.147343 0.965043 -0.216752 0.809509

0.521437 0.262007 0.812069 -1.48472

0 0 0 1

FastGlobalRegistration will added to Open3d of next version.