Open3DのPythonチュートリアルをC++に移植(その4) rgbd_integration.py

Open3Dは、2018年3月現在v0.1です。頻繁にアップデートがあると思われますので、この記事はすぐ風化する予定です。

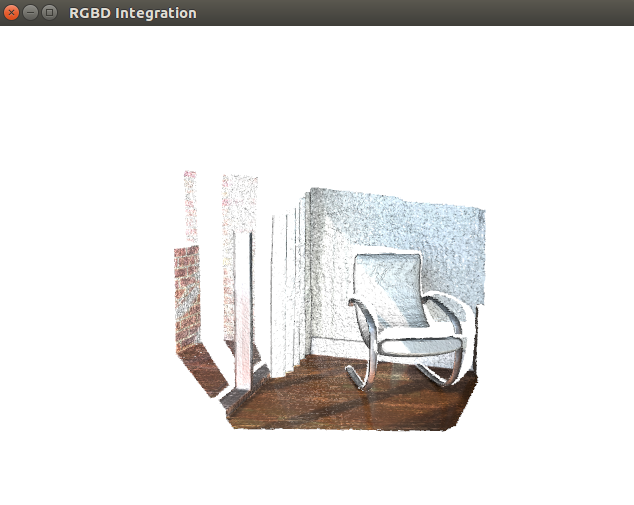

rgbd_integrationは、マージングなどの名前で知られる手法と同じで、位置合わせされた複数組の3次元点群から、無駄な点や面のない綺麗な1つのメッシュデータを生成します。

pythonチュートリアルのオリジナルはこちらです。

Open3D/rgbd_integration.py at master · IntelVCL/Open3D · GitHub

TestRGBDIntegration.cpp

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <memory> #include <Eigen/Dense> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <Core/Utility/Timer.h> #include <fstream> #include <sstream> #include <iomanip> using namespace three; typedef int Metadata[3]; class CameraPose { public: CameraPose(Metadata meta, Eigen::Matrix4d mat) { for(int i=0; i<3; i++) metadata[i] = meta[i]; pose = mat; }; friend std::ostream& operator << (std::ostream& os, const CameraPose& p); Metadata metadata; Eigen::Matrix4d pose; }; std::ostream& operator << (std::ostream& os, const CameraPose& p) { os << "Metadata : "; for(int i=0; i<3; i++){ os << p.metadata[i] << " "; } os << std::endl; os << "Pose : " << std::endl; os << p.pose << std::endl; } std::shared_ptr<std::vector<CameraPose>> ReadTrajectory(std::string filename) { std::shared_ptr<std::vector<CameraPose>> traj = std::make_shared<std::vector<CameraPose>>(); traj->clear(); std::ifstream fin; fin.open(filename, std::ios::in); if(!fin.fail()){ Metadata meta; Eigen::Matrix4d pose = Eigen::Matrix4d::Identity(); fin >> meta[0] >> meta[1] >> meta[2]; while(fin) { for(int i=0; i<4; i++){ fin >> pose(i, 0) >> pose(i,1) >> pose(i, 2) >> pose(i, 3); } traj->push_back(CameraPose(meta, pose)); fin >> meta[0] >> meta[1] >> meta[2]; } fin.close(); } else { std::cout << "Can't open " << filename << std::endl; } return traj; } void WriteTrajectory(std::vector<CameraPose>& traj, std::string filename) { std::ofstream fout; fout.open(filename, std::ios::out); for(unsigned int i=0; i<traj.size(); i++){ fout << traj[i].metadata[0] << " " << traj[i].metadata[1] << " " << traj[i].metadata[2] << std::endl; fout << traj[i].pose << std::endl; } fout.close(); } int main(int argc, char *argv[]) { SetVerbosityLevel(VerbosityLevel::VerboseAlways); bool visualization = true; // false; #ifdef _OPENMP PrintDebug("OpenMP is supported. Using %d threads.", omp_get_num_threads()); #endif std::shared_ptr<std::vector<CameraPose>> camera_poses = ReadTrajectory("../../lib/TestData/RGBD/odometry.log"); double voxel_length = 4.0 / 512.0; double sdf_trunc = 0.04; bool with_color = true; auto volume = std::shared_ptr<ScalableTSDFVolume>( new ScalableTSDFVolume(voxel_length,sdf_trunc, with_color)); for(unsigned int i=0; i<camera_poses->size(); i++){ std::cout << "Integrate " << i << "-th image into the volume." << std::endl; std::string cpath, dpath; std::stringstream ss1, ss2; ss1 << "../../lib/TestData/RGBD/color/" << std::setfill('0') << std::setw(5) << i << ".jpg"; ss1 >> cpath; ss2 << "../../lib/TestData/RGBD/depth/" << std::setfill('0') << std::setw(5) << i << ".png"; ss2 >> dpath; // std::shared_ptr<Image> auto color = CreateImageFromFile(cpath); // std::shared_ptr<Image> auto depth = CreateImageFromFile(dpath); double depth_scale = 1000; double depth_trunc = 4.0; bool convert_rgb_to_intensity = false; // std::shared_ptr<RGBDImage> auto rgbd = CreateRGBDImageFromColorAndDepth(*color, *depth, depth_scale, depth_trunc, convert_rgb_to_intensity); volume->Integrate(*rgbd, PinholeCameraIntrinsic(PinholeCameraIntrinsicParameters::PrimeSenseDefault) (*camera_poses)[i].pose.inverse()); // PinholeCameraIntrinsic::PrimeSenseDefault, old style } std::cout << "Extract a triangle mesh from the volume and visualize it." << std::endl; // std::shared_ptr<TriangleMesh> auto mesh = volume->ExtractTriangleMesh(); mesh->ComputeVertexNormals(); if(visualization){ DrawGeometries({mesh}, "RGBD Integration"); } return 0; }

> TestRGBDIntegration

Add geometry and update bounding box to [(0.6055, 0.8320, 0.6680) - (3.0742, 2.4249, 2.4512)]

拡大してみると、ちゃんとマーチングキューブ法でメッシュが貼られていることが分かります。

自前で用意したjsoncppライブラリを使いたい場合

jsoncppのcmakeをするときに-fPICオプションを使用する必要があります。

cmake -DCMAKE_CXX_FLAGS=-fPIC -DCMAKE_C_FLAGS=-fPIC ../

Today's must buy item.

- 作者: 友納正裕

- 出版社/メーカー: オーム社

- 発売日: 2018/03/03

- メディア: 単行本(ソフトカバー)

- この商品を含むブログ (2件) を見る