Open3DのReconstruction systemをC++に移植(その3-1 integrate_scene)

integrate_scene.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void integrate_scene_main(std::string path_dataset, std::string path_intrinsic);

integrate_scene.cpp

#include "integrate_scene.h" #include "common_py.h" #include <Eigen/Dense> extern std::string template_global_posegraph_optimized; extern std::string template_fragment_posegraph_optimized; extern std::string template_global_mesh; extern three::PinholeCameraIntrinsic read_pinhole_camera_intrinsic(const std::string &filename); void scalable_integrate_rgb_frames(std::string path_dataset, three::PinholeCameraIntrinsic intrinsic, bool draw_result = false) { std::shared_ptr<std::vector<std::string>> color_files; std::shared_ptr<std::vector<std::string>> depth_files; get_rgbd_file_lists(color_files, depth_files, path_dataset); int n_files = static_cast<int>(color_files->size()); int n_frames_per_fragment = 100; int n_fragments = static_cast<int>(std::ceil(float(n_files) / n_frames_per_fragment)); double voxel_length = 3.0 / 512.0; double sdf_trunc = 0.04; bool with_color = true; three::ScalableTSDFVolume volume = three::ScalableTSDFVolume(voxel_length, sdf_trunc, with_color); three::PoseGraph pose_graph_fragment; three::ReadIJsonConvertibleFromJSON(path_dataset + template_global_posegraph_optimized, pose_graph_fragment); for (int fragment_id = 0; fragment_id < pose_graph_fragment.nodes_.size(); fragment_id++) { three::PoseGraph pose_graph_rgbd; std::string filename(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), fragment_id); three::ReadIJsonConvertibleFromJSON(filename, pose_graph_rgbd); for (int frame_id = 0; frame_id < pose_graph_rgbd.nodes_.size(); frame_id++) { int frame_id_abs = fragment_id * n_frames_per_fragment + frame_id; std::cout << "Fragment " << fragment_id << " / " << n_fragments - 1 << " :: integrate rgbd frame " << frame_id_abs << " (" << frame_id + 1 << " of " << pose_graph_rgbd.nodes_.size() << ")." << std::endl; std::shared_ptr<three::Image> color = std::make_shared<three::Image>(); std::shared_ptr<three::Image> depth = std::make_shared<three::Image>(); three::ReadImage((*color_files)[frame_id_abs], *color); three::ReadImage((*depth_files)[frame_id_abs], *depth); double depth_trunc = 3.0; bool convert_rgb_to_intensity = false; std::shared_ptr<three::RGBDImage> rgbd = std::make_shared<three::RGBDImage>(); rgbd = three::CreateRGBDImageFromColorAndDepth(*color, *depth, 1000.0, depth_trunc, convert_rgb_to_intensity); Eigen::Matrix4d pose = pose_graph_fragment.nodes_[fragment_id].pose_ * pose_graph_rgbd.nodes_[frame_id].pose_; volume.Integrate(*rgbd, intrinsic, pose.inverse()); } } std::shared_ptr<three::TriangleMesh> mesh = volume.ExtractTriangleMesh(); mesh->ComputeVertexNormals(); if (draw_result) { three::DrawGeometries({ mesh }); } std::string mesh_name = path_dataset + template_global_mesh; three::WriteTriangleMeshToPLY(mesh_name, *mesh, false, true); } void integrate_scene_main(std::string path_dataset, std::string path_intrinsic) { std::cout << "integrate the whole RGBD sequence using estimated camera pose" << std::endl; std::cout << "path to the dataset " << path_dataset << std::endl; std::cout << "path to the RGBD camera intrinsic " << path_intrinsic << std::endl; three::PinholeCameraIntrinsic intrinsic; if (!path_intrinsic.empty()) { intrinsic = read_pinhole_camera_intrinsic(path_intrinsic); } else { intrinsic = three::PinholeCameraIntrinsic( three::PinholeCameraIntrinsicParameters::PrimeSenseDefault); } scalable_integrate_rgb_frames(path_dataset, intrinsic); }

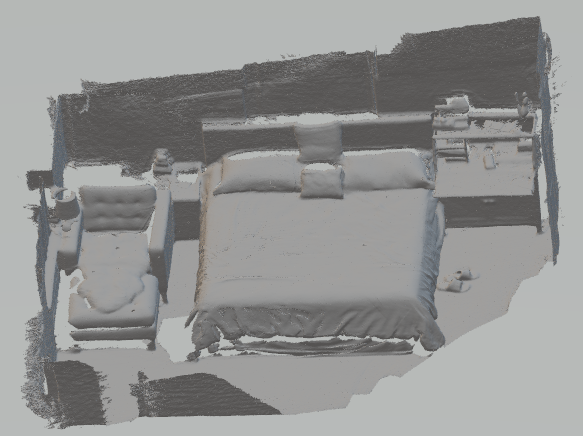

実行結果

Python版の出力サイズと微妙に異なるので、何か間違えているのかも。