Open3DのReconstruction systemをC++に移植(その3-1 integrate_scene)

integrate_scene.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void integrate_scene_main(std::string path_dataset, std::string path_intrinsic);

integrate_scene.cpp

#include "integrate_scene.h" #include "common_py.h" #include <Eigen/Dense> extern std::string template_global_posegraph_optimized; extern std::string template_fragment_posegraph_optimized; extern std::string template_global_mesh; extern three::PinholeCameraIntrinsic read_pinhole_camera_intrinsic(const std::string &filename); void scalable_integrate_rgb_frames(std::string path_dataset, three::PinholeCameraIntrinsic intrinsic, bool draw_result = false) { std::shared_ptr<std::vector<std::string>> color_files; std::shared_ptr<std::vector<std::string>> depth_files; get_rgbd_file_lists(color_files, depth_files, path_dataset); int n_files = static_cast<int>(color_files->size()); int n_frames_per_fragment = 100; int n_fragments = static_cast<int>(std::ceil(float(n_files) / n_frames_per_fragment)); double voxel_length = 3.0 / 512.0; double sdf_trunc = 0.04; bool with_color = true; three::ScalableTSDFVolume volume = three::ScalableTSDFVolume(voxel_length, sdf_trunc, with_color); three::PoseGraph pose_graph_fragment; three::ReadIJsonConvertibleFromJSON(path_dataset + template_global_posegraph_optimized, pose_graph_fragment); for (int fragment_id = 0; fragment_id < pose_graph_fragment.nodes_.size(); fragment_id++) { three::PoseGraph pose_graph_rgbd; std::string filename(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), fragment_id); three::ReadIJsonConvertibleFromJSON(filename, pose_graph_rgbd); for (int frame_id = 0; frame_id < pose_graph_rgbd.nodes_.size(); frame_id++) { int frame_id_abs = fragment_id * n_frames_per_fragment + frame_id; std::cout << "Fragment " << fragment_id << " / " << n_fragments - 1 << " :: integrate rgbd frame " << frame_id_abs << " (" << frame_id + 1 << " of " << pose_graph_rgbd.nodes_.size() << ")." << std::endl; std::shared_ptr<three::Image> color = std::make_shared<three::Image>(); std::shared_ptr<three::Image> depth = std::make_shared<three::Image>(); three::ReadImage((*color_files)[frame_id_abs], *color); three::ReadImage((*depth_files)[frame_id_abs], *depth); double depth_trunc = 3.0; bool convert_rgb_to_intensity = false; std::shared_ptr<three::RGBDImage> rgbd = std::make_shared<three::RGBDImage>(); rgbd = three::CreateRGBDImageFromColorAndDepth(*color, *depth, 1000.0, depth_trunc, convert_rgb_to_intensity); Eigen::Matrix4d pose = pose_graph_fragment.nodes_[fragment_id].pose_ * pose_graph_rgbd.nodes_[frame_id].pose_; volume.Integrate(*rgbd, intrinsic, pose.inverse()); } } std::shared_ptr<three::TriangleMesh> mesh = volume.ExtractTriangleMesh(); mesh->ComputeVertexNormals(); if (draw_result) { three::DrawGeometries({ mesh }); } std::string mesh_name = path_dataset + template_global_mesh; three::WriteTriangleMeshToPLY(mesh_name, *mesh, false, true); } void integrate_scene_main(std::string path_dataset, std::string path_intrinsic) { std::cout << "integrate the whole RGBD sequence using estimated camera pose" << std::endl; std::cout << "path to the dataset " << path_dataset << std::endl; std::cout << "path to the RGBD camera intrinsic " << path_intrinsic << std::endl; three::PinholeCameraIntrinsic intrinsic; if (!path_intrinsic.empty()) { intrinsic = read_pinhole_camera_intrinsic(path_intrinsic); } else { intrinsic = three::PinholeCameraIntrinsic( three::PinholeCameraIntrinsicParameters::PrimeSenseDefault); } scalable_integrate_rgb_frames(path_dataset, intrinsic); }

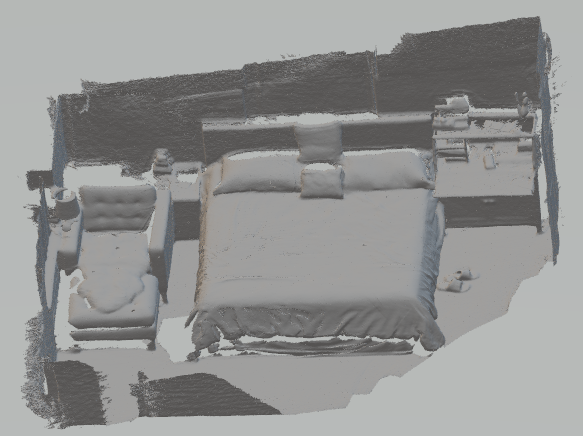

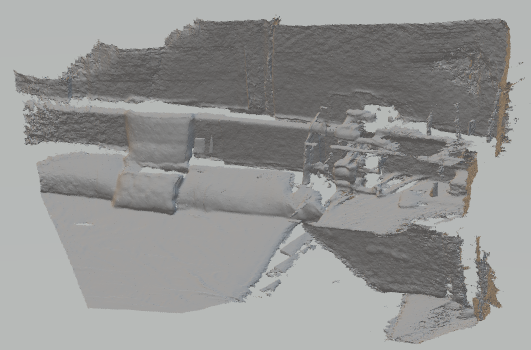

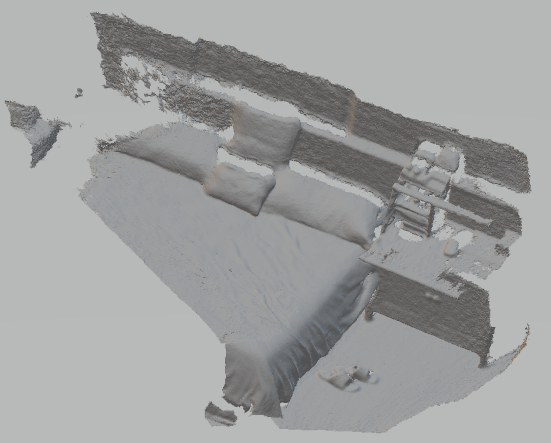

実行結果

Python版の出力サイズと微妙に異なるので、何か間違えているのかも。

Open3DのReconstruction systemをC++に移植(その2-1 register_fragment)

register_fragments.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void register_fragments_main(std::string path_dataset_);

register_fragments.cpp

#include "register_fragments.h" #include "common_py.h" #include "optimize_posegraph.h" #include <Core/Registration/PoseGraph.h> #include <Core/Registration/FastGlobalRegistration.h> #include <Core/Registration/ColoredICP.h> #include <Eigen/Dense> ///////////////////////// // some global parameters for the global registration ///////////////////////// extern int n_frames_per_fragment; extern int n_keyframes_per_n_frame; ////////////////////////// // file related ////////////////////////// extern std::string folder_fragment; extern std::string template_fragment_posegraph; extern std::string template_fragment_posegraph_optimized; extern std::string template_fragment_mesh; extern std::string folder_scene; extern std::string template_global_posegraph; extern std::string template_global_posegraph_optimized; extern std::string template_global_mesh; std::tuple<std::shared_ptr<three::PointCloud>, std::shared_ptr<three::Feature>> preprocess_point_cloud(three::PointCloud& pcd) { std::shared_ptr<three::PointCloud> pcd_down = std::make_shared<three::PointCloud>(); pcd_down = three::VoxelDownSample(pcd, 0.05); double radius = 0.1; int max_nn = 30; three::EstimateNormals(*pcd_down, three::KDTreeSearchParamHybrid(radius, max_nn)); radius = 0.25; max_nn = 100; std::shared_ptr<three::Feature> pcd_fpfh = std::make_shared<three::Feature>(); pcd_fpfh = three::ComputeFPFHFeature(*pcd_down, three::KDTreeSearchParamHybrid(radius, max_nn)); return std::tuple<std::shared_ptr<three::PointCloud>, std::shared_ptr<three::Feature>>(pcd_down, pcd_fpfh); } std::tuple<bool, three::RegistrationResult> register_point_cloud_fpfh(three::PointCloud& source, three::PointCloud& target, three::Feature& source_fpfh, three::Feature& target_fpfh) { double maximum_correspondence_distance = 0.07; three::RegistrationResult result = three::FastGlobalRegistration( source, target, source_fpfh, target_fpfh, three::FastGlobalRegistrationOption(1.399999999999911, false, true, maximum_correspondence_distance)); if (result.transformation_.trace() == 4.0) { return std::tuple<bool, three::RegistrationResult>(false, Eigen::Matrix4d::Identity()); } return std::tuple<bool, three::RegistrationResult>(true, result); } void draw_registration_result(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d transformation) { std::shared_ptr<three::PointCloud> source_temp = std::make_shared<three::PointCloud>(source); std::shared_ptr<three::PointCloud> target_temp = std::make_shared<three::PointCloud>(target); source_temp->PaintUniformColor(Eigen::Vector3d(1., 0.706, 0.)); target_temp->PaintUniformColor(Eigen::Vector3d(0., 0.651, 0.929)); source_temp->Transform(transformation); three::DrawGeometries({ source_temp, target_temp }); } std::tuple<bool, Eigen::Matrix4d> compute_initial_registartion(int s, int t, three::PointCloud& source_down, three::PointCloud& target_down, three::Feature& source_fpfh, three::Feature& target_fpfh, std::string path_dataset, bool draw_result = false) { Eigen::Matrix4d transformation; if (t == s + 1) { // odomety case std::cout << "Using RGBD odometry" << std::endl; std::string filename(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), s); three::PoseGraph pose_graph_frag; three::ReadIJsonConvertibleFromJSON(filename, pose_graph_frag); int n_nodes = static_cast<int>(pose_graph_frag.nodes_.size()); transformation = pose_graph_frag.nodes_[n_nodes - 1].pose_.inverse(); std::cout << pose_graph_frag.nodes_[0].pose_ << std::endl; std::cout << transformation << std::endl; } else { // loop closure case std::cout << "register_point_cloud_fpfh" << std::endl; auto ret = register_point_cloud_fpfh( source_down, target_down, source_fpfh, target_fpfh); bool success_ransac = std::get<0>(ret); three::RegistrationResult result_ransac = std::get<1>(ret); if (!success_ransac) { std::cout << "No reasonable solution. Skip this pair" << std::endl; return std::tuple<bool, Eigen::Matrix4d>(false, Eigen::Matrix4d::Identity()); } else { transformation = result_ransac.transformation_; } std::cout << transformation << std::endl; } if (draw_result) { draw_registration_result(source_down, target_down, transformation); } return std::tuple<bool, Eigen::Matrix4d>(true, transformation); } // colored pointcloud registration // This is implementation of following paper // J. Park, Q.-Y. Zhou, V. Koltun, // Colored Point Cloud Registration Revisited, ICCV 2017 std::tuple<Eigen::Matrix4d, Eigen::Matrix6d> register_colored_point_cloud_icp(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d init_transformation = Eigen::Matrix4d::Identity(), std::vector<double> voxel_radius = std::vector<double>({ 0.05, 0.025, 0.0125 }), std::vector<int> max_iter = std::vector<int>({ 50, 30, 14 }), bool draw_result = false) { Eigen::Matrix4d current_transformation = init_transformation; three::RegistrationResult result_icp; for (int scale = 0; scale < max_iter.size(); scale++) { // multi-scale approach int iter = max_iter[scale]; double radius = voxel_radius[scale]; std::cout << "radius " << radius << std::endl; std::shared_ptr<three::PointCloud> source_down = three::VoxelDownSample(source, radius); std::shared_ptr<three::PointCloud> target_down = three::VoxelDownSample(target, radius); double radius_n = radius * 2; int max_nn = 30; three::EstimateNormals(*source_down, three::KDTreeSearchParamHybrid(radius_n, max_nn)); if (0) { // print(np.assarray(source_down.normals)) for (int i = 0; i < source_down->normals_.size(); i++) { std::cout << source_down->normals_[i] << " "; } std::cout << std::endl; } three::EstimateNormals(*target_down, three::KDTreeSearchParamHybrid(radius_n, max_nn)); double relative_fitness = 1e-6; double relative_rmse = 1e-6; int max_iteration = iter; result_icp = three::RegistrationColoredICP(*source_down, *target_down, radius, current_transformation, three::ICPConvergenceCriteria(relative_fitness, relative_rmse, max_iteration)); current_transformation = result_icp.transformation_; } Eigen::Matrix6d information_matrix = three::GetInformationMatrixFromPointClouds( source, target, 0.07, result_icp.transformation_); if (draw_result) { draw_registration_result_original_color(source, target, result_icp.transformation_); } return std::tuple<Eigen::Matrix4d, Eigen::Matrix6d>(result_icp.transformation_, information_matrix); } std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d> local_refinement(int s, int t, three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d transformation_init, bool draw_result = false) { Eigen::Matrix4d transformation; Eigen::Matrix6d information; if (t == s + 1) { // odometry case std::cout << "register_point_cloud_icp" << std::endl; std::vector<double> voxel_radius({ 0.0125 }); std::vector<int> max_iter({ 30 }); auto ret = register_colored_point_cloud_icp( source, target, transformation_init, voxel_radius, max_iter); transformation = std::get<0>(ret); information = std::get<1>(ret); } else { // loop closure case std::cout << "register_colored_point_cloud" << std::endl; auto ret = register_colored_point_cloud_icp( source, target, transformation_init); transformation = std::get<0>(ret); information = std::get<1>(ret); } bool success_local = false; if ((information(5, 5) / std::min(source.points_.size(), target.points_.size())) > 0.3) { success_local = true; } if (draw_result) { draw_registration_result_original_color( source, target, transformation); } return std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d>(success_local, transformation, information); } std::tuple<Eigen::Matrix4d, three::PoseGraph> update_odometry_posegraph(int s, int t, Eigen::Matrix4d transformation, Eigen::Matrix6d information, Eigen::Matrix4d odometry, three::PoseGraph& pose_graph) { std::cout << "Update PoseGraph" << std::endl; if (t == s + 1) { // odometry case odometry = transformation * odometry; Eigen::Matrix4d odometry_inv = odometry.inverse(); pose_graph.nodes_.push_back(three::PoseGraphNode(odometry_inv)); bool uncertain = false; pose_graph.edges_.push_back( three::PoseGraphEdge(s, t, transformation, information, uncertain)); } else { // loop closure case bool uncertain = true; pose_graph.edges_.push_back( three::PoseGraphEdge(s, t, transformation, information, uncertain)); } return std::tuple<Eigen::Matrix4d, three::PoseGraph>(odometry, pose_graph); } void register_point_cloud(std::string path_dataset, std::vector<std::string>& ply_file_names, bool draw_result = false) { three::PoseGraph pose_graph = three::PoseGraph(); Eigen::Matrix4d odometry = Eigen::Matrix4d::Identity(); pose_graph.nodes_.push_back(three::PoseGraphNode(odometry)); Eigen::Matrix6d info = Eigen::Matrix6d::Identity(); int n_files = static_cast<int>(ply_file_names.size()); for (int s = 0; s < n_files; s++) { for (int t = s + 1; t < n_files; t++) { std::shared_ptr<three::PointCloud> source = std::make_shared<three::PointCloud>(); std::shared_ptr<three::PointCloud> target = std::make_shared<three::PointCloud>(); std::cout << "reading " << ply_file_names[s] << " ..."; bool ret = three::ReadPointCloud(ply_file_names[s], *source); std::cout << "reading " << ply_file_names[s] << " ..."; ret = three::ReadPointCloud(ply_file_names[t], *target); auto ret_s = preprocess_point_cloud(*source); std::shared_ptr<three::PointCloud> source_down = std::get<0>(ret_s); std::shared_ptr<three::Feature> source_fpfh = std::get<1>(ret_s); auto ret_t = preprocess_point_cloud(*target); std::shared_ptr<three::PointCloud> target_down = std::get<0>(ret_t); std::shared_ptr<three::Feature> target_fpfh = std::get<1>(ret_t); auto ret_c = compute_initial_registartion( s, t, *source_down, *target_down, *source_fpfh, *target_fpfh, path_dataset, draw_result); bool success_global = std::get<0>(ret_c); Eigen::Matrix4d transformation_init = std::get<1>(ret_c); if (!success_global) { continue; } //std::cout << "success global" << s << " , " << t << std::endl; auto ret_l = local_refinement(s, t, *source, *target, transformation_init, draw_result); bool success_local = std::get<0>(ret_l); Eigen::Matrix4d transformation_icp = std::get<1>(ret_l); Eigen::Matrix6d information_icp = std::get<2>(ret_l); if (!success_local) { continue; } //std::cout << "success local" << s << " , " << t << std::endl; auto ret_u = update_odometry_posegraph(s, t, transformation_icp, information_icp, odometry, pose_graph); odometry = std::get<0>(ret_u); // pose_graph = std::get<1>(ret_u); // std::cout << pose_graph << std::endl; } } // three::WritePoseGraph(path_dataset + template_global_posegraph, pose_graph); three::WriteIJsonConvertibleToJSON(path_dataset + template_global_posegraph, pose_graph); } void register_fragments_main(std::string path_dataset) { three::SetVerbosityLevel(three::VerbosityLevel::VerboseDebug); std::shared_ptr<std::vector<std::string>> ply_file_names = get_file_list(path_dataset + folder_fragment, ".ply"); make_folder(path_dataset + folder_scene); register_point_cloud(path_dataset, *ply_file_names); optimize_posegraph_for_scene(path_dataset); }

Open3DのReconstruction systemをC++に移植(その1-3 make_fragment)

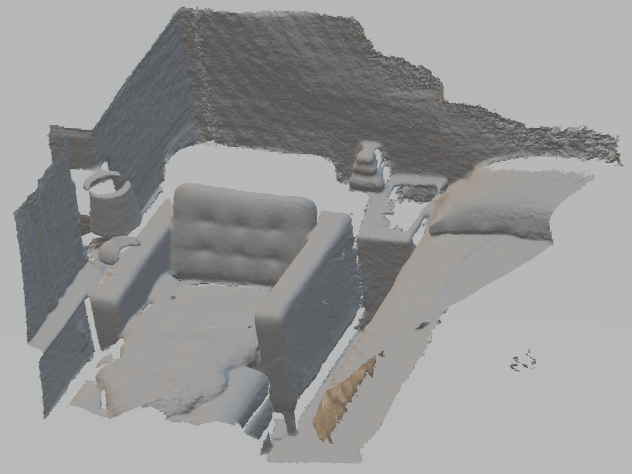

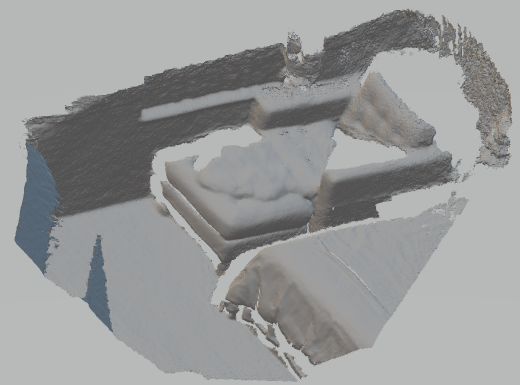

openFrameworksの呼び出し部分の例と実行結果。

ofApp.cpp

// Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include "ofApp.h" #include "make_fragments.h" #pragma comment(lib, "Open3D.lib") //#pragma comment(lib, "Core.lib") //#pragma comment(lib, "IO.lib") //#pragma comment(lib, "Visualization.lib") #pragma comment(lib, "jsoncpp.lib") #pragma comment(lib, "jpeg.lib") #pragma comment(lib, "tinyfiledialogs.lib") #pragma comment(lib, "png.lib") #pragma comment(lib, "opencv_core400.lib") #pragma comment(lib, "opencv_features2d400.lib") #pragma comment(lib, "opencv_highgui400.lib") #pragma comment(lib, "opencv_calib3d400.lib") //-------------------------------------------------------------- void ofApp::setup() { make_fragments_main("D:/workspace/016", ""); register_fragments_main("D:/workspace/016"); integrate_scene_main("D:/workspace/016", ""); } //-------------------------------------------------------------- void ofApp::update() { } //-------------------------------------------------------------- void ofApp::draw() { } //-------------------------------------------------------------- void ofApp::keyPressed(int key) { } //-------------------------------------------------------------- void ofApp::keyReleased(int key) { } //-------------------------------------------------------------- void ofApp::mouseMoved(int x, int y) { } //-------------------------------------------------------------- void ofApp::mouseDragged(int x, int y, int button) { } //-------------------------------------------------------------- void ofApp::mousePressed(int x, int y, int button) { } //-------------------------------------------------------------- void ofApp::mouseReleased(int x, int y, int button) { } //-------------------------------------------------------------- void ofApp::mouseEntered(int x, int y) { } //-------------------------------------------------------------- void ofApp::mouseExited(int x, int y) { } //-------------------------------------------------------------- void ofApp::windowResized(int w, int h) { } //-------------------------------------------------------------- void ofApp::gotMessage(ofMessage msg) { } //-------------------------------------------------------------- void ofApp::dragEvent(ofDragInfo dragInfo) { }

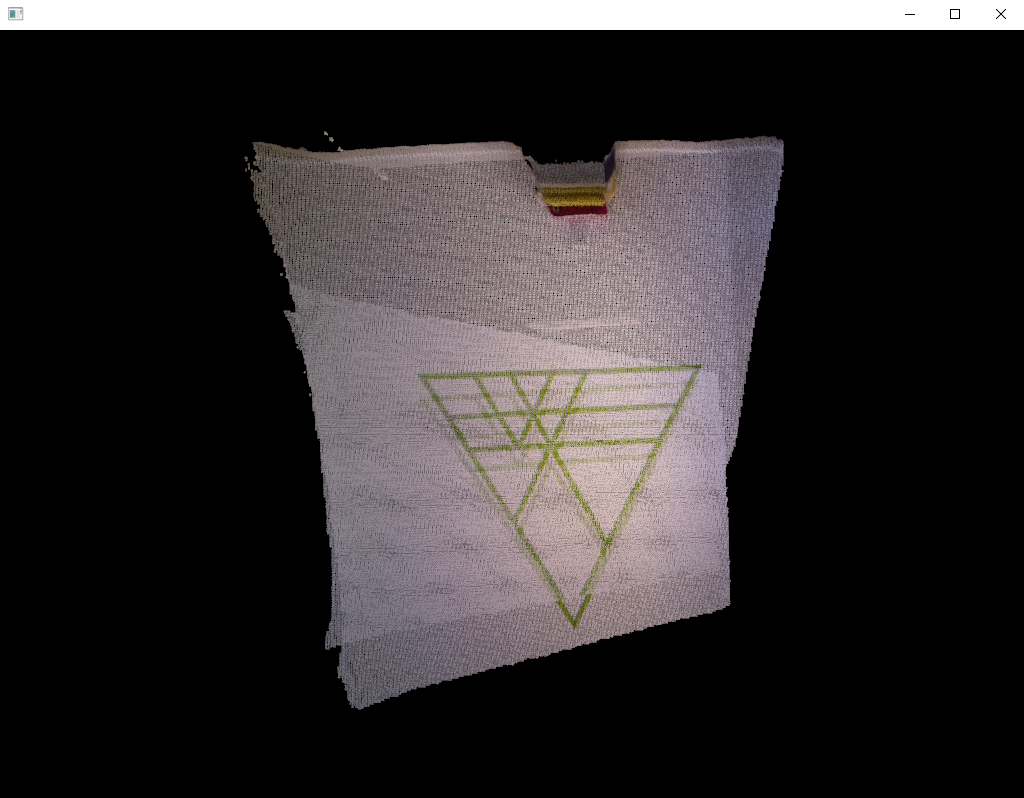

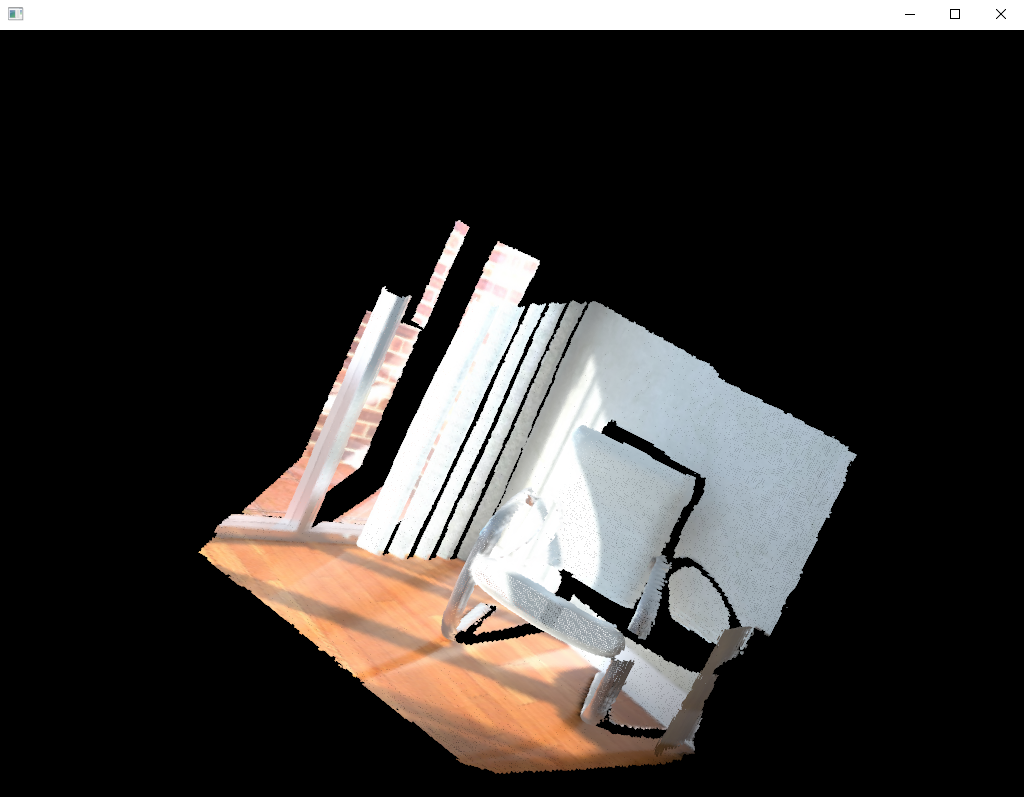

実行結果

一つのセグメントの処理が一巡すると下のようなメッセージが表示されて/fragmentsフォルダの下に次の3つのファイルが生成されます。

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 118 edges.

Line process weight : 44793.956121

[Initial ] residual : 2.438500e+04, lambda : 2.543928e+02

[Iteration 00] residual : 5.961908e+03, valid edges : 118, time : 0.005 sec.

[Iteration 01] residual : 3.527648e+03, valid edges : 118, time : 0.005 sec.

[Iteration 02] residual : 3.525901e+03, valid edges : 118, time : 0.005 sec.

[Iteration 03] residual : 3.525896e+03, valid edges : 118, time : 0.005 sec.

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.025 sec.

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 118 edges.

Line process weight : 44793.956121

[Initial ] residual : 3.525896e+03, lambda : 2.608960e+02

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.003 sec.

CompensateReferencePoseGraphNode : reference : 0

fragment_000.json (posegarph)

fragment_000.ply (mesh)

fragment_optimized_000.json (最適化したposegraph)

今日のそうめん

もっともっと大きなのもオススメ。

store.shopping.yahoo.co.jp

Open3DのReconstruction systemをC++に移植(その1-2 make_fragment)

make_fragmentのc++移植の続き

opencv_pose_estimation.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <opencv/cv.h> std::tuple<bool, Eigen::Matrix4d> pose_estimation(three::RGBDImage& source_rgbd_image, three::RGBDImage& target_rgbd_image, three::PinholeCameraIntrinsic intrinsic, bool debug_draw_correspondences); std::tuple<bool, Eigen::Matrix4d, std::vector<int>&> estimate_3D_transform_RANSAC(std::vector<Eigen::Vector3d>& pts_xyz_s, std::vector<Eigen::Vector3d>& pts_xyz_t); std::tuple<Eigen::Matrix3d, Eigen::Vector3d> estimate_3D_transform(std::vector<Eigen::Vector3d>& input_xyz_s, std::vector<Eigen::Vector3d>& input_xyz_t); Eigen::Vector3d get_xyz_from_pts(cv::Point2d pts_row, cv::Mat& depth, double px, double py, double focal); Eigen::Vector3d get_xyz_from_uv(double u, double v, double d, double px, double py, double focal);

opencv_pose_estimation.cpp

// following original code is tested with OpenCV 3.2.0 and Python2.7 #include "opencv_pose_estimation.h" #include <random> #include <Eigen/SVD> #include <Eigen/LU> #include <opencv/cv.h> #include <opencv2/features2d.hpp> #include <opencv2/highgui.hpp> #include <opencv2/calib3d.hpp> std::tuple<bool, Eigen::Matrix4d> pose_estimation(three::RGBDImage& source_rgbd_image, three::RGBDImage& target_rgbd_image, three::PinholeCameraIntrinsic pinhole_camera_intrinsic, bool debug_draw_correspondences) { bool success = false; Eigen::Matrix4d trans = Eigen::Matrix4d::Identity(); // transform double array to unit8 array cv::Mat color_cv_s_f(source_rgbd_image.color_.height_, source_rgbd_image.color_.width_, CV_32FC1); cv::Mat color_cv_t_f(target_rgbd_image.color_.height_, target_rgbd_image.color_.width_, CV_32FC1); memcpy((void*)(color_cv_s_f.data), (void*)(&source_rgbd_image.color_.data_[0]), source_rgbd_image.color_.width_ * source_rgbd_image.color_.height_ * source_rgbd_image.color_.num_of_channels_ * source_rgbd_image.color_.bytes_per_channel_); memcpy((void*)(color_cv_t_f.data), (void*)(&target_rgbd_image.color_.data_[0]), target_rgbd_image.color_.width_ * target_rgbd_image.color_.height_ * target_rgbd_image.color_.num_of_channels_ * target_rgbd_image.color_.bytes_per_channel_); cv::Mat color_cv_s; cv::Mat color_cv_t; color_cv_s_f.convertTo(color_cv_s, CV_8UC1, 255); color_cv_t_f.convertTo(color_cv_t, CV_8UC1, 255); int nfeatures = 100; float scaleFactor = 1.2f; int nlevels = 8; int edgeThreshold = 31; int firstLevel = 0; int WTA_K = 2; int scoreType = cv::ORB::HARRIS_SCORE; int patchSize = 31; cv::Ptr<cv::ORB> orb = cv::ORB::create(nfeatures, scaleFactor, nlevels, edgeThreshold, firstLevel, WTA_K, scoreType, patchSize); // to save time cv::Mat des_s, des_t; std::vector<cv::KeyPoint> kp_s, kp_t; orb->detect(color_cv_s, kp_s); orb->detect(color_cv_t, kp_t); orb->compute(color_cv_s, kp_s, des_s); orb->compute(color_cv_t, kp_t, des_t); if ((kp_s.size() == 0) || (kp_t.size() == 0)) { return std::tuple<bool, Eigen::Matrix4d>(success, trans); } cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming"); std::vector<cv::DMatch> dmatch; dmatch.clear(); std::vector<cv::DMatch> dmatch12, dmatch21; matcher->match(des_s, des_t, dmatch12); matcher->match(des_t, des_s, dmatch21); // CrossCheck = True for (size_t i = 0; i < dmatch12.size(); ++i) { cv::DMatch m12 = dmatch12[i]; cv::DMatch m21 = dmatch21[m12.trainIdx]; if (m21.trainIdx == m12.queryIdx) dmatch.push_back(m12); } int count = static_cast<int>(dmatch.size()); std::vector<cv::Point2d> pts_s; std::vector<cv::Point2d> pts_t; pts_s.clear(); pts_t.clear(); for (int i = 0; i < dmatch.size(); i++) { pts_t.push_back(kp_t[dmatch[i].trainIdx].pt); pts_s.push_back(kp_s[dmatch[i].queryIdx].pt); } // inlier points after initial BF matching if (debug_draw_correspondences) { cv::Mat result; drawMatches(color_cv_s, kp_s, color_cv_t, kp_t, dmatch, result); cv::imshow("Initial BF matching", result); cv::waitKey(1); // draw_correspondences(); } double focal_input = (pinhole_camera_intrinsic.intrinsic_matrix_(0, 0) + pinhole_camera_intrinsic.intrinsic_matrix_(1, 1)) / 2.0; double pp_x = pinhole_camera_intrinsic.intrinsic_matrix_(0, 2); double pp_y = pinhole_camera_intrinsic.intrinsic_matrix_(1, 2); // Essential matrix is made for masking inliers std::vector<cv::Point2i> pts_s_int; std::vector<cv::Point2i> pts_t_int; for (int i = 0; i < pts_s.size(); i++) { pts_s_int.push_back(cv::Point2i(pts_s[i].x + 0.5, pts_s[i].y + 0.5)); } for (int i = 0; i < pts_t.size(); i++) { pts_t_int.push_back(cv::Point2i(pts_t[i].x + 0.5, pts_t[i].y + 0.5)); } cv::Mat E, mask; double focal = focal_input; cv::Point2d pp(pp_x, pp_y); int method = cv::RANSAC; double prob = 0.999; double threshold = 1.0; E = cv::findEssentialMat(pts_s_int, pts_t_int, focal, pp, method, prob, threshold, mask); if (mask.empty()) { return std::tuple<bool, Eigen::Matrix4d>(success, trans); } // inlier points after 5pt algorithm if (debug_draw_correspondences) { } // make 3D correspondences cv::Mat depth_s(source_rgbd_image.depth_.height_, source_rgbd_image.depth_.width_, CV_32FC1); cv::Mat depth_t(source_rgbd_image.depth_.height_, source_rgbd_image.depth_.width_, CV_32FC1); memcpy((void*)(depth_s.data), (void*)(&source_rgbd_image.depth_.data_[0]), source_rgbd_image.depth_.width_ * source_rgbd_image.depth_.height_ * source_rgbd_image.depth_.num_of_channels_ * source_rgbd_image.depth_.bytes_per_channel_); memcpy((void*)(depth_t.data), (void*)(&target_rgbd_image.depth_.data_[0]), target_rgbd_image.depth_.width_ * target_rgbd_image.depth_.height_ * target_rgbd_image.depth_.num_of_channels_ * target_rgbd_image.depth_.bytes_per_channel_); std::vector<Eigen::Vector3d> pts_xyz_s; std::vector<Eigen::Vector3d> pts_xyz_t; pts_xyz_s.clear(); pts_xyz_t.clear(); int cnt = 0; for (int i = 0; i < pts_s.size(); i++) { if (mask.at<int>(i)) { pts_xyz_s.push_back(get_xyz_from_pts(pts_s[i], depth_s, pp_x, pp_y, focal_input)); pts_xyz_t.push_back(get_xyz_from_pts(pts_t[i], depth_t, pp_x, pp_y, focal_input)); cnt = cnt + 1; } } auto ret = estimate_3D_transform_RANSAC(pts_xyz_s, pts_xyz_t); success = std::get<0>(ret); trans = std::get<1>(ret); std::vector<int> inlier_id_vec = std::get<2>(ret); if (debug_draw_correspondences) { } return std::tuple<bool, Eigen::Matrix4d>(success, trans); } std::tuple<bool, Eigen::Matrix4d, std::vector<int>&> estimate_3D_transform_RANSAC(std::vector<Eigen::Vector3d>& pts_xyz_s, std::vector<Eigen::Vector3d>& pts_xyz_t) { int max_iter = 1000; double max_distance = 0.05; int n_sample = 5; int n_points = static_cast<int>(pts_xyz_s.size()); Eigen::Matrix4d Transform_good = Eigen::Matrix4d::Identity(); int max_inlier = n_sample; std::vector<int> inlier_vec_good; inlier_vec_good.clear(); bool success = false; if (n_points < n_sample) { return std::tuple<bool, Eigen::Matrix4d, std::vector<int>&>(success, Transform_good, inlier_vec_good); } for (int i = 0; i < max_iter; i++) { std::vector<Eigen::Vector3d> sample_xyz_s; std::vector<Eigen::Vector3d> sample_xyz_t; // sampling std::random_device rnd; std::mt19937 mt(rnd()); std::uniform_int_distribution<> randn(0, n_points); for (int j = 0; j < n_sample; j++) { int rand_idx = randn(mt); sample_xyz_s.push_back(pts_xyz_s[rand_idx]); sample_xyz_t.push_back(pts_xyz_t[rand_idx]); } auto ret = estimate_3D_transform(sample_xyz_s, sample_xyz_t); Eigen::Matrix3d R_approx = std::get<0>(ret); Eigen::Vector3d t_approx = std::get<1>(ret); // evaluation std::vector<Eigen::Vector3d> diff_mat; for (int j = 0; j < n_points; j++) { diff_mat.push_back(pts_xyz_t[j] - (R_approx * pts_xyz_s[j] + t_approx)); } std::vector<double> diff; for (int j = 0; j < n_points; j++) { diff.push_back(diff_mat[j].norm()); } int n_inlier = 0; for (int j = 0; j < diff.size(); j++) { double diff_iter = diff[j]; if (diff_iter < max_distance) { n_inlier += 1; } } // note: diag(R_approx) > 0 prevents ankward transformation between // RGBD pair of relatively small amount of baseline. if ((n_inlier > max_inlier) && (R_approx.determinant() != 0.) && (R_approx(0, 0) > 0) && (R_approx(1, 1) > 0) && (R_approx(2, 2) > 0)) { for (int j = 0; j < 3; j++) { for (int k = 0; k < 3; k++) { Transform_good(j, k) = R_approx(j, k); } Transform_good(j, 3) = t_approx(j); } // std::cout << "Transform_good = \n" << Transform_good << std::endl; max_inlier = n_inlier; inlier_vec_good.clear(); for (int id_iter = 0; id_iter < n_points; id_iter++) { double diff_iter = diff[id_iter]; if (diff_iter < max_distance) { inlier_vec_good.push_back(id_iter); } } success = true; } } return std::tuple<bool, Eigen::Matrix4d, std::vector<int>&>(success, Transform_good, inlier_vec_good); } // signular value decomposition approach // based on the description in the sec 3.1.2 in // http::/graphics.stanford.edu/~smr/ICP/comparison/eggert_comparison_mva97.pdf std::tuple<Eigen::Matrix3d, Eigen::Vector3d> estimate_3D_transform(std::vector<Eigen::Vector3d>& input_xyz_s, std::vector<Eigen::Vector3d>& input_xyz_t) { // compute H int n_points = static_cast<int>(input_xyz_s.size()); Eigen::Vector3d mean_s(0., 0., 0.); for (int i = 0; i < input_xyz_s.size(); i++) { mean_s += input_xyz_s[i]; } mean_s /= n_points; Eigen::Vector3d mean_t(0., 0., 0.); for (int i = 0; i < input_xyz_t.size(); i++) { mean_t += input_xyz_t[i]; } mean_t /= n_points; Eigen::MatrixXd xyz_diff_s(3, input_xyz_s.size()); for (int i = 0; i < input_xyz_s.size(); i++) { for (int j = 0; j < 3; j++) { xyz_diff_s(j, i) = input_xyz_s[i](j) - mean_s(j); } } Eigen::MatrixXd xyz_diff_t(3, input_xyz_t.size()); for (int i = 0; i < input_xyz_t.size(); i++) { for (int j = 0; j < 3; j++) { xyz_diff_t(j, i) = input_xyz_t[i](j) - mean_t(j); } } Eigen::MatrixXd H; H.resize(3, 3); H = xyz_diff_s * xyz_diff_t.transpose(); // solve system Eigen::JacobiSVD<Eigen::MatrixXd> svd(H, Eigen::ComputeFullU | Eigen::ComputeFullV); Eigen::Matrix3d R_approx = svd.matrixV().transpose() * svd.matrixU().transpose(); if (R_approx.determinant() < 0.0) { double det = (svd.matrixU() * svd.matrixV()).determinant(); Eigen::Matrix3d D = Eigen::Matrix3d::Identity(); D(2, 2) = det; R_approx = svd.matrixU() * (D * svd.matrixV()); } Eigen::Vector3d t_approx = mean_t - (R_approx * mean_s); return std::tuple<Eigen::Matrix3d, Eigen::Vector3d>(R_approx, t_approx); } Eigen::Vector3d get_xyz_from_pts(cv::Point2d pts_row, cv::Mat& depth, double px, double py, double focal) { double u = pts_row.x; double v = pts_row.y; int u0 = int(u); int v0 = int(v); int height = depth.rows; int width = depth.cols; // bilinear depth interpolation if ((u0 > 0) && (u0 < width - 1) && (v0 > 0) && (v0 < height - 1)) { double up = pts_row.x - u0; double vp = pts_row.y - v0; double d0 = depth.at<double>(v0, u0); double d1 = depth.at<double>(v0, u0 + 1); double d2 = depth.at<double>(v0 + 1, u0); double d3 = depth.at<double>(v0 + 1, u0); double d = (1 - vp) * (d1 * up + d0 * (1 - up)) + vp * (d3 * up + d2 * (1 - up)); return get_xyz_from_uv(u, v, d, px, py, focal); } return Eigen::Vector3d(0., 0., 0.); } Eigen::Vector3d get_xyz_from_uv(double u, double v, double d, double px, double py, double focal) { double x, y; if (focal != 0.) { x = (u - px) / focal + d; y = (v - py) / focal + d; } else { x = 0.; y = 0.; } return Eigen::Vector3d(x, y, d).transpose(); }

optimize_posegraph.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void optimize_posegraph_for_fragment(std::string path_dataset, int fragment_id); void optimize_posegraph_for_scene(std::string path_dataset);

optimize_posegraph.cpp

#include "optimize_posegraph.h" #include <Core/Registration/PoseGraph.h> #include <Core/Registration/GlobalOptimizationMethod.h> #include <Core/Registration/GlobalOptimizationConvergenceCriteria.h> #include <Core/Registration/GlobalOptimization.h> #include <IO/ClassIO/PoseGraphIO.h> extern std::string template_fragment_posegraph; extern std::string template_fragment_posegraph_optimized; extern std::string template_global_posegraph; extern std::string template_global_posegraph_optimized; void run_posegraph_optimization(std::string pose_graph_name, std::string pose_graph_optimized_name, double max_correspondence_distance, double preference_loop_closure) { // to display messages from global optimization three::SetVerbosityLevel(three::VerbosityLevel::VerboseDebug); const three::GlobalOptimizationMethod & method = three::GlobalOptimizationLevenbergMarquardt(); const three::GlobalOptimizationConvergenceCriteria &criteria = three::GlobalOptimizationConvergenceCriteria(); const three::GlobalOptimizationOption &option = three::GlobalOptimizationOption(max_correspondence_distance, 0.25, preference_loop_closure, 0); three::PoseGraph pose_graph; //three::ReadPoseGraph(pose_graph_name, pose_graph); // Read PoseGraph failed: unknown file extension. three::ReadIJsonConvertibleFromJSON(pose_graph_name, pose_graph); three::GlobalOptimization(pose_graph, method, criteria, option); //three::WritePoseGraph(pose_graph_optimized_name, pose_graph); // Write PoseGraph failed: unknown file extension. three::WriteIJsonConvertibleToJSON(pose_graph_optimized_name, pose_graph); three::SetVerbosityLevel(three::VerbosityLevel::VerboseError); } void optimize_posegraph_for_fragment(std::string path_dataset, int fragment_id) { std::string pose_graph_name(path_dataset + template_fragment_posegraph); sprintf((char*)pose_graph_name.data(), (path_dataset + template_fragment_posegraph).c_str(), fragment_id); std::string pose_graph_optimized_name(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)pose_graph_optimized_name.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), fragment_id); run_posegraph_optimization(pose_graph_name, pose_graph_optimized_name, 0.07, 0.1); } void optimize_posegraph_for_scene(std::string path_dataset) { std::string pose_graph_name(path_dataset + template_global_posegraph); std::string pose_graph_optimized_name(path_dataset + template_global_posegraph_optimized); double max_correspondence_distance = 0.07; double preference_loop_closure = 2.0; run_posegraph_optimization(pose_graph_name, pose_graph_optimized_name, max_correspondence_distance, preference_loop_closure); }

注意点

- ファイル入出力だけにopenFrameworksの関数を使っています。ofDirなど。代替のライブラリがあれば、そちらに書き換えてください。

- ReadPoseGraph, WritePoseGraphなどファイルの入出力のユーティリティ関数において、拡張子の照合が上手くいかなくて正しく動作しません。代わりに、拡張子を特定してWriteJsonConvertibleToJSONなどを直接呼んでいます。

- デバッグ用の表示関数を省略しています。つまり、ちゃんとデバッグできていない可能性があります。

- Eigenを使い慣れてないせいで無駄にstd::vectorと組み合わせたり、汚い書き方していますがご容赦を。もしくはきれいに書き換えてください。

今日のそうめん

そうめんは古くなるほどおいしくなるそうなので、もっと大きなやつもオススメ。

store.shopping.yahoo.co.jp

Open3DのReconstruction systemをC++に移植(その1-1 make_fragment)

Open3DのReconstruction Systetmは,距離画像とカラー画像の動画シーケンスから立体モデルを復元するためのPythonチュートリアルです。

詳細はこちらにあります。

Reconstruction system — Open3D 0.1 dev documentation

テストデータは、上記で紹介されているこれを使います。(約500MB)

016.zip - Google ドライブ

これをc++(openFrameworks)に移植していきます。開発環境はWindows10とopenFrameworks0.10.0です。

あらかじめ断っておくと、Open3Dはもともとc++で実装されていてpythonはインターフェースしているだけなので、Reconstruction systemをc++で書いたところでたいして速くなりません。

また、まだ全部のコンポーネントの移植が済んでいないのでデバッグが完ぺきではありません。

Reconstruction systemは、Make fragments、Register fragments、Integrate sceneの3つのコンポーネントにより構成されています。

make_fragments

make_framgentsは,ひと続きの動画シーケンスから時間的に連続するフレームとループクロージャーの検出をしてPoseGraphを作って、そこから小さめのメッシュの復元をします。

詳細はこちら。

Make fragments — Open3D 0.1 dev documentation

pythonソースは、次の5個から構成されています。

make_fragments.py

common.py

opencv.py

opencv_pose_estimation.py

optimize_posegraph.py

make_fragments.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d> register_one_rgbd_pair(int s, int t, std::vector<std::string>& color_files, std::vector<std::string>& depth_files, three::PinholeCameraIntrinsic intrinsic, bool with_opencv); void make_posegraph_for_fragment(std::string path_dataset, int sid, int eid, std::vector<std::string> color_files, std::vector<std::string> depth_files, int fragment_id, int n_fragments, three::PinholeCameraIntrinsic intrinsic, bool with_opencv); std::shared_ptr<three::TriangleMesh> integrate_rgb_frames_for_fragment(std::vector<std::string>& color_files, std::vector<std::string>& depth_files, int fragment_id, int n_fragments, std::string pose_graph_name, three::PinholeCameraIntrinsic intrinsic); void make_mesh_for_fragment(std::string path_dataset, std::vector<std::string>& color_files, std::vector<std::string>& depth_files, int fragment_id, int n_fragments, three::PinholeCameraIntrinsic intrinsic); void process_fragments(std::string path_dataset, std::string path_intrinsic, bool with_opencv); int make_fragments_main(std::string path_dataset_, std::string path_intrisic_);

make_fragments.cpp

#include "make_fragments.h" #include "common_py.h" #include "opencv_py.h" #include "opencv_pose_estimation.h" #include "optimize_posegraph.h" #include <Core/Registration/PoseGraph.h> #include <Eigen/Dense> #include <iostream> #include <thread> ///////////////////////// // some global parameters for the global registration ///////////////////////// extern int n_frames_per_fragment; extern int n_keyframes_per_n_frame; ////////////////////////// // file related ////////////////////////// extern std::string folder_fragment; extern std::string template_fragment_posegraph; extern std::string template_fragment_posegraph_optimized; extern std::string template_fragment_mesh; extern std::string folder_scene; extern std::string template_global_posegraph; extern std::string template_global_posegraph_optimized; extern std::string template_global_mesh; std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d> register_one_rgbd_pair(int s, int t, std::vector<std::string>& color_files, std::vector<std::string>& depth_files, three::PinholeCameraIntrinsic intrinsic, bool with_opencv) { // read_images auto color_s = std::make_shared<three::Image>(); auto depth_s = std::make_shared<three::Image>(); auto color_t = std::make_shared<three::Image>(); auto depth_t = std::make_shared<three::Image>(); three::ReadImage(color_files[s], *color_s); three::ReadImage(depth_files[s], *depth_s); three::ReadImage(color_files[t], *color_t); three::ReadImage(depth_files[t], *depth_t); auto source_rgbd_image = std::make_shared<three::RGBDImage>(); auto target_rgbd_image = std::make_shared<three::RGBDImage>(); source_rgbd_image = three::CreateRGBDImageFromColorAndDepth(*color_s, *depth_s, 1000.0, 3.0, true); target_rgbd_image = three::CreateRGBDImageFromColorAndDepth(*color_t, *depth_t, 1000.0, 3.0, true); if (abs(s - t) != 1) { if (with_opencv) { auto ret = pose_estimation( *source_rgbd_image, *target_rgbd_image, intrinsic, true/*false*/); bool success_5pt = std::get<0>(ret); Eigen::Matrix4d odo_init = std::get<1>(ret); if (success_5pt) { auto ret = three::ComputeRGBDOdometry( *source_rgbd_image, *target_rgbd_image, intrinsic, odo_init, three::RGBDOdometryJacobianFromHybridTerm(), three::OdometryOption()); return ret; } } return std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d>(false, Eigen::Matrix4d::Identity(), Eigen::Matrix6d::Identity()); } else { Eigen::Matrix4d odo_init = Eigen::Matrix4d::Identity(); return three::ComputeRGBDOdometry( *source_rgbd_image, *target_rgbd_image, intrinsic, odo_init, three::RGBDOdometryJacobianFromHybridTerm(), three::OdometryOption()); } return std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d>(false, Eigen::Matrix4d::Identity(), Eigen::Matrix6d::Identity()); // dummy } void make_posegraph_for_fragment(std::string path_dataset, int sid, int eid, std::vector<std::string> color_files, std::vector<std::string> depth_files, int fragment_id, int n_fragments, three::PinholeCameraIntrinsic intrinsic, bool with_opencv) { three::SetVerbosityLevel(three::VerbosityLevel::VerboseError); three::PoseGraph pose_graph; Eigen::Matrix4d trans_odometry = Eigen::Matrix4d::Identity(); pose_graph.nodes_.push_back(three::PoseGraphNode(trans_odometry)); for (int s = sid; s < eid; s++) { for (int t = s + 1; t < eid; t++) { // odometry if (t == s + 1) { std::cout << "Fragment " << fragment_id << " / " << n_fragments - 1 << " :: RGBD matching between frame : " << s << " and " << t << std::endl; auto ret = register_one_rgbd_pair( s, t, color_files, depth_files, intrinsic, with_opencv); bool& success = std::get<0>(ret); Eigen::Matrix4d& trans = std::get<1>(ret); Eigen::Matrix6d& info = std::get<2>(ret); trans_odometry = trans * trans_odometry; Eigen::Matrix4d trans_odometry_inv = trans_odometry.inverse(); pose_graph.nodes_.push_back(three::PoseGraphNode(trans_odometry_inv)); pose_graph.edges_.push_back( three::PoseGraphEdge(s - sid, t - sid, trans, info, false)); } // keyframe loop closure if ((s % n_keyframes_per_n_frame == 0) && (t % n_keyframes_per_n_frame == 0)) { std::cout << "Fragment " << fragment_id << " / " << n_fragments - 1 << " :: RGBD matching between frame : " << s << " and " << t << std::endl; auto ret = register_one_rgbd_pair( s, t, color_files, depth_files, intrinsic, with_opencv); bool success = std::get<0>(ret); Eigen::Matrix4d trans = std::get<1>(ret); Eigen::Matrix6d info = std::get<2>(ret); if (success) { pose_graph.edges_.push_back( three::PoseGraphEdge(s - sid, t - sid, trans, info, true)); } } } } std::string filename(path_dataset + template_fragment_posegraph); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph).c_str(), fragment_id); WriteIJsonConvertibleToJSON(filename, pose_graph); //int ret = three::WritePoseGraph(filename, pose_graph); } std::shared_ptr<three::TriangleMesh> integrate_rgb_frames_for_fragment(std::vector<std::string>& color_files, std::vector<std::string>& depth_files, int fragment_id, int n_fragments, std::string pose_graph_name, three::PinholeCameraIntrinsic intrinsic) { three::PoseGraph pose_graph; three::ReadIJsonConvertibleFromJSON(pose_graph_name, pose_graph); three::ScalableTSDFVolume volume = three::ScalableTSDFVolume(3.0 / 512.0, 0.04, true); for (int i = 0; i < pose_graph.nodes_.size(); i++) { int i_abs = fragment_id * n_frames_per_fragment + i; std::cout << "Fragment " << fragment_id << " / " << n_fragments - 1 << " : integrate rgbd frame " << i_abs << " (" << i + 1 << " of " << pose_graph.nodes_.size() << ")." << std::endl; std::shared_ptr<three::Image> color = std::make_shared<three::Image>(); std::shared_ptr<three::Image> depth = std::make_shared<three::Image>(); three::ReadImage(color_files[i_abs], *color); three::ReadImage(depth_files[i_abs], *depth); std::shared_ptr<three::RGBDImage> rgbd = three::CreateRGBDImageFromColorAndDepth(*color, *depth, 1000.0, 3.0, false); Eigen::Matrix4d pose = pose_graph.nodes_[i].pose_; volume.Integrate(*rgbd, intrinsic, pose.inverse()); } std::shared_ptr<three::TriangleMesh> mesh = volume.ExtractTriangleMesh(); mesh->ComputeVertexNormals(); return mesh; } void make_mesh_for_fragment(std::string path_dataset, std::vector<std::string>& color_files, std::vector<std::string>& depth_files, int fragment_id, int n_fragments, three::PinholeCameraIntrinsic intrinsic) { std::string filename(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), fragment_id); std::shared_ptr<three::TriangleMesh> mesh = integrate_rgb_frames_for_fragment( color_files, depth_files, fragment_id, n_fragments, filename, intrinsic ); std::string mesh_name(path_dataset + template_fragment_mesh); sprintf((char*)mesh_name.data(), (path_dataset + template_fragment_mesh).c_str(), fragment_id); //three::WriteTriangleMesh(mesh_name, *mesh, false, true); three::WriteTriangleMeshToPLY(mesh_name, *mesh, false, true); } three::PinholeCameraIntrinsic read_pinhole_camera_intrinsic(const std::string &filename) { three::PinholeCameraIntrinsic intrinsic; ReadIJsonConvertible(filename, intrinsic); return intrinsic; } void process_fragments(std::string path_dataset, std::string path_intrinsic, bool with_opencv) { three::PinholeCameraIntrinsic intrinsic; if (!path_intrinsic.empty()) { intrinsic = read_pinhole_camera_intrinsic(path_intrinsic); } else { intrinsic = three::PinholeCameraIntrinsic( three::PinholeCameraIntrinsicParameters::PrimeSenseDefault); } make_folder(path_dataset + folder_fragment); std::shared_ptr<std::vector<std::string>> color_files; std::shared_ptr<std::vector<std::string>> depth_files; get_rgbd_file_lists(color_files, depth_files, path_dataset); int n_files = static_cast<int>(color_files->size()); int n_fragments = static_cast<int>(ceil(float(n_files) / n_frames_per_fragment)); for (int fragment_id = 0; fragment_id < n_fragments; fragment_id++) { int sid = fragment_id * n_frames_per_fragment; int eid = std::min(sid + n_frames_per_fragment, n_files); make_posegraph_for_fragment(path_dataset, sid, eid, *color_files, *depth_files, fragment_id, n_fragments, intrinsic, with_opencv); optimize_posegraph_for_fragment(path_dataset, fragment_id); make_mesh_for_fragment(path_dataset, *color_files, *depth_files, fragment_id, n_fragments, intrinsic); } } int make_fragments_main(std::string path_dataset_, std::string path_intrinsic_) { std::cout << "making fragments from RGBD sequence." << std::endl; std::string path_dataset = path_dataset_; std::cout << "path to the dataset " << path_dataset << std::endl; std::string path_intrinsic = path_intrinsic_; std::cout << "path to the RGBD camera intrinsic " << path_intrinsic << std::endl; // check opencv package bool with_opencv = initialize_opencv(true); process_fragments(path_dataset, path_intrinsic, with_opencv); return 0; }

common_py.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> std::shared_ptr<std::vector<std::string>> get_file_list(std::string path, std::string extension); void get_rgbd_file_lists(std::shared_ptr<std::vector<std::string>>& color_files, std::shared_ptr<std::vector<std::string>>& depth_files, std::string path_dataset); void make_folder(std::string path_folder); //////////////////////// // visualization related //////////////////////// void draw_pcd(std::shared_ptr<three::PointCloud>& pcd); void draw_registration(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d& transformation); void draw_registration_result_original_color(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d transformation);

common_py.cpp

#include "common_py.h" #include <ofFileUtils.h> using namespace three; ///////////////////////// // some global parameters for the global registration ///////////////////////// int n_frames_per_fragment = 100; int n_keyframes_per_n_frame = 5; ////////////////////////// // file related ////////////////////////// std::string folder_fragment = "/fragments/"; std::string template_fragment_posegraph = folder_fragment + "fragment_%03d.json"; std::string template_fragment_posegraph_optimized = folder_fragment + "fragment_optimized_%03d.json"; std::string template_fragment_mesh = folder_fragment + "fragment_%03d.ply"; std::string folder_scene = "/scene/"; std::string template_global_posegraph = folder_scene + "global_registration.json"; std::string template_global_posegraph_optimized = folder_scene + "global_registration_optimized.json"; std::string template_global_mesh = folder_scene + "integrated.ply"; std::shared_ptr<std::vector<std::string>> get_file_list(std::string path, std::string extension) { auto file_list = std::make_shared<std::vector<std::string>>(); //std::cout << path << " " << extension << std::endl; if (extension == "None") { ofDirectory dir(path); for (int i = 0; i < dir.getFiles().size(); i++) { std::string f = dir.getFile(i).getFileName(); ofFile path_f; path_f.open(path + f, ofFile::Mode::ReadOnly); if (path_f.exists()) { if (path_f.isFile()) { file_list->push_back(path_f.getAbsolutePath()); } } } } else { ofDirectory dir(path); for (int i = 0; i < dir.getFiles().size(); i++) { std::string f = dir.getFile(i).getFileName(); ofFile path_f; path_f.open(path + f, ofFile::Mode::ReadOnly); if (path_f.exists()) { if (path_f.isFile()) { if ('.' + path_f.getExtension() == extension) { file_list->push_back(path_f.getAbsolutePath()); } } } } } std::sort(file_list->begin(), file_list->end()); return file_list; } void get_rgbd_file_lists(std::shared_ptr<std::vector<std::string>>& color_files, std::shared_ptr<std::vector<std::string>>& depth_files, std::string path_dataset) { std::string path_color = path_dataset + "/image/"; std::string path_depth = path_dataset + "/depth/"; color_files = get_file_list(path_color, ".jpg"); auto color_files_png = get_file_list(path_color, ".png"); std::copy(color_files_png->begin(), color_files_png->end(), std::back_inserter(*color_files)); depth_files = get_file_list(path_depth, ".png"); } void make_folder(std::string path_folder) { ofDirectory dir(path_folder); if (!dir.exists()) { dir.create(); } } //////////////////////// // visualization related //////////////////////// void draw_pcd(std::shared_ptr<three::PointCloud>& pcd) { Eigen::Matrix4d flip_transform; flip_transform << 1., 0., 0., 0., 0., -1., 0., 0., 0., 0., -1., 0., 0., 0., 0., 1.; auto pcd_temp = std::make_shared<three::PointCloud>(*pcd); pcd_temp->Transform(flip_transform); three::DrawGeometries({ pcd_temp }); } void draw_registration(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d& transformation) { Eigen::Matrix4d flip_transform; flip_transform << 1., 0., 0., 0., 0., -1., 0., 0., 0., 0., -1., 0., 0., 0., 0., 1.; auto source_temp = std::make_shared<three::PointCloud>(source); auto target_temp = std::make_shared<three::PointCloud>(target); source_temp->PaintUniformColor(Eigen::Vector3d(1.f, 0.706f, 0.f)); target_temp->PaintUniformColor(Eigen::Vector3d(0.f, 0.651f, 0.929f)); source_temp->Transform(transformation); source_temp->Transform(flip_transform); target_temp->Transform(flip_transform); three::DrawGeometries({ source_temp, target_temp }); } void draw_registration_result_original_color(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d transformation) { Eigen::Matrix4d flip_transform; flip_transform << 1., 0., 0., 0., 0., -1., 0., 0., 0., 0., -1., 0., 0., 0., 0., 1.; auto source_temp = std::make_shared<three::PointCloud>(source); auto target_temp = std::make_shared<three::PointCloud>(target); source_temp->Transform(transformation); source_temp->Transform(flip_transform); target_temp->Transform(flip_transform); three::DrawGeometries({ source_temp, target_temp }); }

opencv_py.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details bool initialize_opencv(bool use);

opencv_py.cpp

#include "opencv_py.h" #include <iostream> // opencv bool initialize_opencv(bool use = true) { bool opencv_installed = use; if (!opencv_installed) { std::cout << "OpenCV is not detected. Using Identity as an initial" << std::endl; } else { std::cout << "OpenCV is detected. using ORB + 5pt algorithm" << std::endl; } return opencv_installed; }

長くなったので続きは次のページ。

今日のそうめん

この業務用そうめんが安くてとても美味しい。

store.shopping.yahoo.co.jp

c++版Open3DのVisualizationは、openFrameworks0.10.0で自前で作ると便利。

Open3Dは、3次元点群処理が簡単に出来て便利です。ですが、いくつか弱点があって

・Visualizationが便利なようで自由が利かず不便

・フォーマットが良く分からなくなることがある。ex. PointCloudとTriangleMeshの違いは?

・他のライブラリとのリンクが不便、だった(過去形)。

openFrameworks0.9.8以前では、Open3Dのリンクはとても面倒くさかったのですが、openFramworks0.10.0からは普通にリンクできるようになりました。

インタラクティブな3D表示はopenFrameworksの得意技なので、もうこっちに任せてしまいましょう。

編集中

ofxThreeGeometries.h

#pragma once #include <iostream> #include <memory> #include <Eigen/Dense> #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <ofNode.h> #include <ofMesh.h> class ofxThreeGeometries : public ofNode { public: ofVboMesh mesh; // ofMesh mesh; std::vector<ofMatrix4x4> mat; int mat_id; ofxThreeGeometries() { mat.clear(); mat_id = -1; } ~ofxThreeGeometries() { mat.clear(); mesh.clear(); } int set(const three::TriangleMesh &source) { switch (source.GetGeometryType()) { case three::Geometry::GeometryType::Unspecified: std::cout << "Unspecified" << std::endl; break; case three::Geometry::GeometryType::PointCloud: std::cout << "PointCloud" << std::endl; break; case three::Geometry::GeometryType::LineSet: std::cout << "LineSet" << std::endl; break; case three::Geometry::GeometryType::TriangleMesh: std::cout << "TriangleMesh" << std::endl; mesh.clear(); if (source.HasVertices()) { for (int i = 0; i < source.vertices_.size(); i++) { ofVec3f v(source.vertices_.at(i).x(), source.vertices_.at(i).y(), source.vertices_.at(i).z()); mesh.addVertex(v); if (source.HasVertexColors()) { ofColor c(source.vertex_colors_.at(i).x() * 255, source.vertex_colors_.at(i).y() * 255, source.vertex_colors_.at(i).z() * 255); mesh.addColor(c); } if (source.HasVertexNormals()) { ofVec3f n(source.vertex_normals_.at(i).x(), source.vertex_normals_.at(i).y(), source.vertex_normals_.at(i).z()); mesh.addNormal(n); } } } if (source.HasTriangles()) { for (int i = 0; i < source.triangles_.size(); i++) { mesh.addTriangle(source.triangles_.at(i).x(), source.triangles_.at(i).y(), source.triangles_.at(i).z()); if (source.HasTriangleNormals()) { // source.triangle_normals_.at(i).x(); } } } { Eigen::Vector3d c = 0.5 * (source.GetMinBound() + source.GetMaxBound()); this->setPosition(c.x(), c.y(), c.z()); this->setOrientation(ofQuaternion()); } break; case three::Geometry::GeometryType::Image: std::cout << "Image" << std::endl; break; default: break; } return 0; } int set(const three::PointCloud &source) { switch (source.GetGeometryType()) { case three::Geometry::GeometryType::Unspecified: std::cout << "Unspecified" << std::endl; break; case three::Geometry::GeometryType::PointCloud: //std::cout << "PointCloud" << std::endl; mesh.clear(); if (source.HasPoints()) { for (int i = 0; i < source.points_.size(); i++) { ofVec3f v(source.points_.at(i).x(), source.points_.at(i).y(), source.points_.at(i).z()); mesh.addVertex(v); if (source.HasColors()) { ofColor c(source.colors_.at(i).x() * 255, source.colors_.at(i).y() * 255, source.colors_.at(i).z() * 255); mesh.addColor(c); } if (source.HasNormals()) { ofVec3f n(source.normals_.at(i).x(), source.colors_.at(i).y(), source.colors_.at(i).z()); mesh.addNormal(n); } } } { Eigen::Vector3d c = 0.5 * (source.GetMinBound() + source.GetMaxBound()); this->setPosition(c.x(), c.y(), c.z()); } break; case three::Geometry::GeometryType::LineSet: std::cout << "LineSet" << std::endl; break; case three::Geometry::GeometryType::TriangleMesh: std::cout << "TriangleMesh" << std::endl; break; case three::Geometry::GeometryType::Image: std::cout << "Image" << std::endl; break; default: break; } } void addTransformation(const Eigen::Matrix4d &Transformation) { // Transposed ofMatrix4x4 m(Transformation(0, 0), Transformation(1, 0), Transformation(2, 0), Transformation(3, 0), Transformation(0, 1), Transformation(1, 1), Transformation(2, 1), Transformation(3, 1), Transformation(0, 2), Transformation(1, 2), Transformation(2, 2), Transformation(3, 2), Transformation(0, 3), Transformation(1, 3), Transformation(2, 3), Transformation(3, 3)); mat.push_back(m); } void setTransformationId(int i) { if (i >= 0 && i < mat.size()) { mat_id = i; this->setPosition(mat[mat_id].getTranslation()); this->setOrientation(mat[mat_id].getRotate()); } } int getTransformationId() { return mat_id; } void draw(ofPolyRenderMode renderMode) { ofPushMatrix(); if (mat_id >= 0 && mat_id < mat.size()) { ofMultMatrix(mat[mat_id]); } mesh.draw(renderMode); ofPopMatrix(); } void draw() { draw(ofPolyRenderMode::OF_MESH_POINTS); } };

自分用読むべき論文メモ 2018年06月版

Optimal and Autonomous Control Using Reinforcement Learning: A Survey - IEEE Journals & Magazine

機械学習は、制御の自動化の方向へ進んでいくのは間違いない。

Kinematic and Dynamic Solvers | The Orocos Project

KDL IK。シンプルなC++で書かれたIKソルバー。

bitbucket.org

TRAC-IK。KDL-IKよりも性能が良いと謳うが、ROS必須。

[1712.07576] Learning to Act Properly: Predicting and Explaining Affordances from Images

アフォーダンス推定

Networked Virtual Environments: Design and Implementation (Siggraph Series)

- 作者: Sandeep Singhal,Michael Zyda

- 出版社/メーカー: Addison-Wesley

- 発売日: 1999/08/01

- メディア: ハードカバー

- クリック: 6回

- この商品を含むブログを見る

[1603.00448] Guided Cost Learning: Deep Inverse Optimal Control via Policy Optimization

Guided Cost Learning

https://deepmind.com/blog/neural-scene-representation-and-rendering/

NN-SFM

densepose.org

表現が不適切だけれど、FacebookのopenPose3D版

CHOMP - Optimized Robotics

https://personalrobotics.ri.cmu.edu/files/courses/papers/Kalakrishnan11-stomp.pdf

RRTよりスマートな経路計画ライブラリ

ros.youtalk.jp — ROS Industrial MoveIt!事始め

http://www.mech.tohoku-gakuin.ac.jp/rde/contents/sendai/mechatro/archive/RMSeminar_No19.pdf

今日の漫画

数学を題材にした漫画。こんな天才数学少年も探せばいるのかな。

- 作者: 三原和人

- 出版社/メーカー: 講談社

- 発売日: 2017/11/22

- メディア: コミック

- この商品を含むブログ (1件) を見る

- 作者: 三原和人

- 出版社/メーカー: 講談社

- 発売日: 2018/02/23

- メディア: コミック

- この商品を含むブログを見る

- 作者: 三原和人

- 出版社/メーカー: 講談社

- 発売日: 2018/05/23

- メディア: コミック

- この商品を含むブログを見る