xmlrpc for c and c++(xmlrpc-c)をWindows10, openFrameworks環境で使う

XML-RPCは古いインターフェースですが、Pythonの標準ライブラリでサーバーが簡単に作れるので、C++からPythonを呼び出したい時など、時と場合によっては便利に使えます。以前libxmlrpc++を紹介したことがありますが、今回のはxmlrpc for c and c++(xmlrpc-c)です。違いは、xmlrpc-cは非同期(Asynch≒non-blocking)に対応していること(ただしインターフェースは洗練されていない)です。

CMakeサポート版のフォーク xmlrpc-c

github.com

個人のgraugansさんが作られたもので、5か月ぐらい前が最終更新のタイムスタンプです。

HTTP用の外部ライブラリが必要で、

- Curl

- Libwww

- Wininet

から選んで使います。Curlが推奨されていますが、Windowsでは標準で使えるWininetでも特に問題は感じません。ここでは、Wininetを使うことにします。

CMakeLists.txtを編集

どのライブラリを選択してもfindCurlを必ず呼んでここで引っかかってしまうので、コメントアウトしてしまいます。乱暴なことをしていますので、何があっても責任取れません。自分で判断して使ってください。

#find_package(CURL ${tmp})

#ensc_set_bool(MUST_BUILD_CURL_CLIENT ${CURL_FOUND} "Set if Curl client transport shall be built")

あとは普通にcmakeでconfigureとgenerateしてプロジェクトを作ってください。

build

普通にビルドをすると半分ぐらいビルドできますが、半分ぐらいエラーで落ちます。適当にCMakeLists.txtを適当に編集したためにMUST_BUILD_CURL_CLIENTの定義が抜けてしまっているためです。次のように修正します。

transport_config.h

/* -*- c -*- */ #define MUST_BUILD_WININET_CLIENT 1 #define MUST_BUILD_LIBWWW_CLIENT 0 #define MUST_BUILD_CURL_CLIENT 0 //////

bin, include, libを集める

出力ファイルは何故かあちこちにバラバラに配置されています。

cライブラリは、build/src/ReleaseもしくはDebugに.dllと.libがあります。

c++ライブラリは、build/src/cpp/Release もしくはDebugに.dllと.libファイルがあります。

xmlrpc_util.dllとxmlrpc_util.libは、build/lib/libutil/ReleaseもしくはDebugにあります。

includeファイルは、include/xmlrpc-cにあります。

config.hは、build/include/xmlrpc-cにあります。

自分の都合の良いように、適当なフォルダの中にそれぞれ放り込んでください。

サンプルプログラム

たとえば非同期クライアントを動かしてみます。Curlを使っていませんので、Curl対応の関数を読んでいるところをwininet用関数に置き換えます。

https://sourceforge.net/p/xmlrpc-c/code/HEAD/tree/trunk/examples/cpp/asynch_client.cpp

//xmlrpc_c::clientXmlTransport_curl myTransport;

xmlrpc_c::clientXmlTransport_wininet myTransport;

Resultが構造体だったりArrayだったりするときのデータ受け取り方法

xmlrpc++はとてもスマートに直感的にかけたのですが、xmlrpc-cは残念ながらいまいちです。返り値が構造体の場合は、下記のようにデータを解釈させます。

構造体受信の例

jntという名前のdouble型arrayが入っている構造体の受信例です。

string const serverUrl("http://192.168.0.11:4321"); string const methodName("get_joint"); xmlrpc_c::clientSimple myClient; xmlrpc_c::value result; myClient.call(serverUrl, methodName, "", &result); xmlrpc_c::value_struct s(result.cValue()); xmlrpc_c::value_array a(s.cvalue()["jnt"]); for (int i = 0; i < 6; i++) { joint[i] = xmlrpc_c::value_double(a.cvalue()[i]); }

構造体送信の例

xmlrpc_c::clientXmlTransport_wininet myTransport; xmlrpc_c::client_xml myClient(&myTransport); string const methodName("move_joint"); xmlrpc_c::carray a; for (int i = 0; i < 6; i++) { a.push_back(xmlrpc_c::value_double(joint[i])); } xmlrpc_c::cstruct s; s.insert(std::make_pair<std::string, xmlrpc_c::value>("", xmlrpc_c::value_array(a))); xmlrpc_c::paramList sampleAddParms1; sampleAddParms1.add(xmlrpc_c::value_struct(s)); xmlrpc_c::rpcPtr rpc1P(methodName, sampleAddParms1); string const serverUrl("http://192.168.0.11:4321"); xmlrpc_c::carriageParm_curl0 myCarriageParm(serverUrl); rpc1P->start(&myClient, &myCarriageParm); myClient.finishAsync(xmlrpc_c::timeout(100));

今日のノートPC

アマゾン専売のMSIの軽量14インチノート。11万円ぐらいでGeForceMX150搭載。自宅用はもうこれで良いね。

[asin:B07D8PJBGS:detail]

[asin:B07FB27SNB:detail]

自分用読むべき論文メモ 2018年07月版

nuitrack. RealSenseD400シリーズと組み合わせてKinect2の人体認識の代替に使えそうな有料ライブラリ。30$/センサーなので十分安い。

qiita.com

画像処理を高速化できる(2~10倍ぐらい見込める)Halide言語について。

[1807.05520] Deep Clustering for Unsupervised Learning of Visual Features

あとで読む

shiropen.com

アフォーダンス推定、精度がすごい。だけどまだ半信半疑。

プレイヤーが自然に感じる乱数の作り方 - A Successful Failure

騙されないように、知識として知らねば。

blogs.yahoo.co.jp

MARGセンサーのMadgwickフィルター

qiita.com

もう一回見直す。

Dex-Net as a Service (DNaaS)

DEX-NETのWEBサービス版。openFrameworksからアクセスできるようにしてみようかな。

blog.openai.com

regrasping

github.com

seiya-kumada.blogspot.com

3D LevelSet Method。Triangular-faced, watertight 3D object mesh fileな感じのメッシュ再構成などに使う。

av.watch.impress.co.jp

http://www.jiki-sensor.com/topics/files_2017/AK31xx_2017.pdf

超低ノイズ電流センサー「AK31XX」。超興味があるが、これだけの資料じゃ、どう使えばいいのか分からない。

今日のコンピュータビジョン

各章、とてもよくまとまっていて読みやすい。

コンピュータビジョン: 広がる要素技術と応用 (未来へつなぐデジタルシリーズ)

- 作者: 米谷竜,斎藤英雄

- 出版社/メーカー: 共立出版

- 発売日: 2018/06/28

- メディア: 単行本

- この商品を含むブログを見る

メモ:OpenGLの描画結果のデプスバッファから距離情報を復元する方法

OpenGLの描画結果のデプス値を単位を直して画像データとして取得するサンプル。詳細は[1]を参照のこと。

1. glReadPixelsでデプスバッファのデータを読み込み

2. nearClipとfarClipでデプス値を正規化

3. 上下反転されているので入れ替え

ofPixels pix; pix.allocate(ofGetWidth(), ofGetHeight(), OF_IMAGE_COLOR); double depth_scale = 1000.0; std::vector<float> depth_image; depth_image.resize(ofGetWidth() * ofGetHeight()); glReadPixels(0, 0, ofGetWidth(), ofGetHeight(), GL_DEPTH_COMPONENT, GL_FLOAT, depth_image.data()); double z_near = cam.getNearClip(); double z_far = cam.getFarClip(); for (int i = 0; i < ofGetHeight(); i++) { for (int j = 0; j < ofGetWidth(); j++) { double z_depth = 2.0 * z_near * z_far / (z_far + z_near - (2.0 * (double)depth_image[(ofGetHeight() - i - 1) * ofGetWidth() + j] - 1.0) * (z_far - z_near)); uint16_t p = (uint16_t)std::min(std::round(depth_scale * z_depth), (double)INT16_MAX); pix.setColor(j, i, ofColor((float)p / 255, (float)p%255, 0)); } }

plyデータに復元

std::vector<float> depth_image; depth_image.resize(ofGetWidth() * ofGetHeight()); ofMesh depth_pointcloud, output_pointcloud; glFinish(); glReadPixels(0, 0, ofGetWidth(), ofGetHeight(), GL_DEPTH_COMPONENT, GL_FLOAT, depth_image.data()); double z_near = cam.getNearClip(); double z_far = cam.getFarClip(); ofMatrix4x4 mvp_matrix = cam.getProjectionMatrix(); int width = ofGetWidth(); int height = ofGetHeight(); double depth_scale = 1000.0; depth_pointcloud.clear(); for (int i = 0; i < height; i++) { for (int j = 0; j < width; j++) { double p = (double)depth_image[(height - i - 1) * width + j]; double z_depth = 2.0 * z_near * z_far / (z_far + z_near - (2.0 * p - 1.0) * (z_far - z_near)); uint16_t q = (uint16_t)std::min(std::round(depth_scale * z_depth), (double)INT16_MAX); ofVec3f u = Unproject(ofVec3f(j + 0.5, i + 0.5, p), mvp_matrix, width, height); if (u != ofVec3f()) { depth_pointcloud.addVertex(ofVec3f(u.x, -u.y, u.z)); } else { depth_pointcloud.addVertex(u); } } } // add triangle meshes for (int i = 1; i < height; i++) { for (int j = 1; j < width; j++) { ofVec3f a = depth_pointcloud.getVertex(i * width + j); ofVec3f b = depth_pointcloud.getVertex(i * width + (j - 1)); ofVec3f c = depth_pointcloud.getVertex((i - 1) * width + (j - 1)); ofVec3f d = depth_pointcloud.getVertex((i - 1) * width + j); if (a != ofVec3f() && b != ofVec3f() && c != ofVec3f()) { depth_pointcloud.addTriangle(i * width + j, (i - 1)*width + (j - 1), i * width + (j - 1)); } if (a != ofVec3f() && c != ofVec3f() && d != ofVec3f()) { depth_pointcloud.addTriangle(i * width + j, (i - 1)*width + j, (i - 1) * width + (j - 1)); } } } // remove verbose meshes std::map<int, int> idx; int cnt = 0; output_pointcloud.clear(); for (int i = 0; i < depth_pointcloud.getNumVertices(); i++) { if (depth_pointcloud.getVertex(i) == ofVec3f()) { idx[i] = -1; } else { idx[i] = cnt++; output_pointcloud.addVertex(depth_pointcloud.getVertex(i)); } } for (int i = depth_pointcloud.getNumVertices() - 1; i >= 0; i--) { if(idx[i] == -1) { depth_pointcloud.removeVertex(i); } } for (int i = 0; i < depth_pointcloud.getNumIndices(); i++) { depth_pointcloud.setIndex(i, idx[depth_pointcloud.getIndex(i)]); } for (int i = 0; i < depth_pointcloud.getNumIndices(); i+=3) { ofIndexType index1 = depth_pointcloud.getIndex(i); ofIndexType index2 = depth_pointcloud.getIndex(i + 1); ofIndexType index3 = depth_pointcloud.getIndex(i + 2); ofVec3f p1 = depth_pointcloud.getVertex(index1); ofVec3f p2 = depth_pointcloud.getVertex(index2); ofVec3f p3 = depth_pointcloud.getVertex(index3); float len12 = (p2 - p1).length(); float len23 = (p3 - p2).length(); float len31 = (p1 - p3).length(); float len_thresh = 1.f; if ((index1 >= cnt) || (index2 >= cnt) || (index3 >= cnt)) { } else if ((len12 >= len_thresh) || (len23 >= len_thresh) || (len31 >= len_thresh)) { } else { output_pointcloud.addTriangle(index1, index2, index3); } } // save to file output_pointcloud.save(path);

ofVec3f Unproject(const ofVec3f &screen_point, const ofMatrix4x4 &mvp_matrix, const int width, const int height) { ofMatrix4x4 inv_mvp_matrix_t = ofMatrix4x4::getTransposedOf(mvp_matrix.getInverse()); ofVec4f point = inv_mvp_matrix_t * ofVec4f(screen_point.x / (double)width * 2.0 - 1.0, screen_point.y / (double)height * 2.0 - 1.0, screen_point.z * 2.0 - 1.0, 1.0); if (point.w == 0.0) { return ofVec3f(); } point /= point.w; return ofVec3f(point.x, point.y, point.z); }

[1] Open3D Visualization

github.com

今日の座布団

安い椅子での長時間の座り仕事で、お尻が痛くなります。そんな時にはこれ。ちょっと暑いけど。

東京西川 エアー クッション スクエア 5×40×40cm HDB5001016LG

- 出版社/メーカー: AIR(エアー)

- メディア: ホーム&キッチン

- この商品を含むブログを見る

Open3DのReconstruction systemをC++に移植(その3-1 integrate_scene)

integrate_scene.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void integrate_scene_main(std::string path_dataset, std::string path_intrinsic);

integrate_scene.cpp

#include "integrate_scene.h" #include "common_py.h" #include <Eigen/Dense> extern std::string template_global_posegraph_optimized; extern std::string template_fragment_posegraph_optimized; extern std::string template_global_mesh; extern three::PinholeCameraIntrinsic read_pinhole_camera_intrinsic(const std::string &filename); void scalable_integrate_rgb_frames(std::string path_dataset, three::PinholeCameraIntrinsic intrinsic, bool draw_result = false) { std::shared_ptr<std::vector<std::string>> color_files; std::shared_ptr<std::vector<std::string>> depth_files; get_rgbd_file_lists(color_files, depth_files, path_dataset); int n_files = static_cast<int>(color_files->size()); int n_frames_per_fragment = 100; int n_fragments = static_cast<int>(std::ceil(float(n_files) / n_frames_per_fragment)); double voxel_length = 3.0 / 512.0; double sdf_trunc = 0.04; bool with_color = true; three::ScalableTSDFVolume volume = three::ScalableTSDFVolume(voxel_length, sdf_trunc, with_color); three::PoseGraph pose_graph_fragment; three::ReadIJsonConvertibleFromJSON(path_dataset + template_global_posegraph_optimized, pose_graph_fragment); for (int fragment_id = 0; fragment_id < pose_graph_fragment.nodes_.size(); fragment_id++) { three::PoseGraph pose_graph_rgbd; std::string filename(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), fragment_id); three::ReadIJsonConvertibleFromJSON(filename, pose_graph_rgbd); for (int frame_id = 0; frame_id < pose_graph_rgbd.nodes_.size(); frame_id++) { int frame_id_abs = fragment_id * n_frames_per_fragment + frame_id; std::cout << "Fragment " << fragment_id << " / " << n_fragments - 1 << " :: integrate rgbd frame " << frame_id_abs << " (" << frame_id + 1 << " of " << pose_graph_rgbd.nodes_.size() << ")." << std::endl; std::shared_ptr<three::Image> color = std::make_shared<three::Image>(); std::shared_ptr<three::Image> depth = std::make_shared<three::Image>(); three::ReadImage((*color_files)[frame_id_abs], *color); three::ReadImage((*depth_files)[frame_id_abs], *depth); double depth_trunc = 3.0; bool convert_rgb_to_intensity = false; std::shared_ptr<three::RGBDImage> rgbd = std::make_shared<three::RGBDImage>(); rgbd = three::CreateRGBDImageFromColorAndDepth(*color, *depth, 1000.0, depth_trunc, convert_rgb_to_intensity); Eigen::Matrix4d pose = pose_graph_fragment.nodes_[fragment_id].pose_ * pose_graph_rgbd.nodes_[frame_id].pose_; volume.Integrate(*rgbd, intrinsic, pose.inverse()); } } std::shared_ptr<three::TriangleMesh> mesh = volume.ExtractTriangleMesh(); mesh->ComputeVertexNormals(); if (draw_result) { three::DrawGeometries({ mesh }); } std::string mesh_name = path_dataset + template_global_mesh; three::WriteTriangleMeshToPLY(mesh_name, *mesh, false, true); } void integrate_scene_main(std::string path_dataset, std::string path_intrinsic) { std::cout << "integrate the whole RGBD sequence using estimated camera pose" << std::endl; std::cout << "path to the dataset " << path_dataset << std::endl; std::cout << "path to the RGBD camera intrinsic " << path_intrinsic << std::endl; three::PinholeCameraIntrinsic intrinsic; if (!path_intrinsic.empty()) { intrinsic = read_pinhole_camera_intrinsic(path_intrinsic); } else { intrinsic = three::PinholeCameraIntrinsic( three::PinholeCameraIntrinsicParameters::PrimeSenseDefault); } scalable_integrate_rgb_frames(path_dataset, intrinsic); }

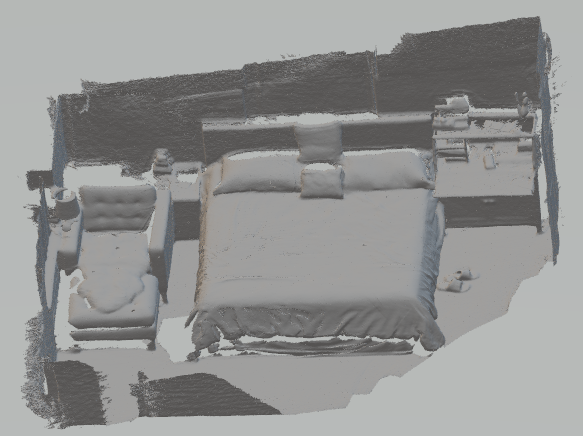

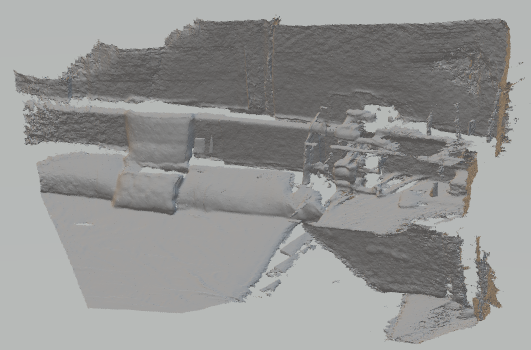

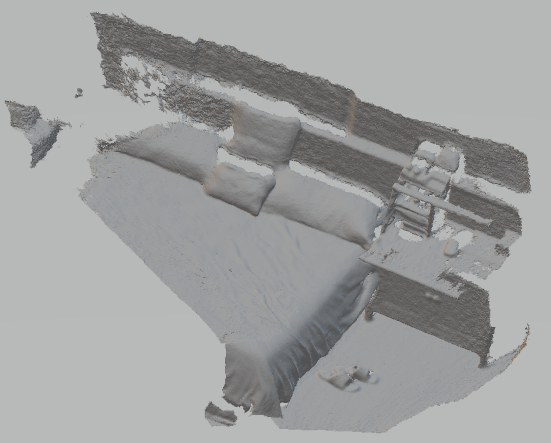

実行結果

Python版の出力サイズと微妙に異なるので、何か間違えているのかも。

Open3DのReconstruction systemをC++に移植(その2-1 register_fragment)

register_fragments.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void register_fragments_main(std::string path_dataset_);

register_fragments.cpp

#include "register_fragments.h" #include "common_py.h" #include "optimize_posegraph.h" #include <Core/Registration/PoseGraph.h> #include <Core/Registration/FastGlobalRegistration.h> #include <Core/Registration/ColoredICP.h> #include <Eigen/Dense> ///////////////////////// // some global parameters for the global registration ///////////////////////// extern int n_frames_per_fragment; extern int n_keyframes_per_n_frame; ////////////////////////// // file related ////////////////////////// extern std::string folder_fragment; extern std::string template_fragment_posegraph; extern std::string template_fragment_posegraph_optimized; extern std::string template_fragment_mesh; extern std::string folder_scene; extern std::string template_global_posegraph; extern std::string template_global_posegraph_optimized; extern std::string template_global_mesh; std::tuple<std::shared_ptr<three::PointCloud>, std::shared_ptr<three::Feature>> preprocess_point_cloud(three::PointCloud& pcd) { std::shared_ptr<three::PointCloud> pcd_down = std::make_shared<three::PointCloud>(); pcd_down = three::VoxelDownSample(pcd, 0.05); double radius = 0.1; int max_nn = 30; three::EstimateNormals(*pcd_down, three::KDTreeSearchParamHybrid(radius, max_nn)); radius = 0.25; max_nn = 100; std::shared_ptr<three::Feature> pcd_fpfh = std::make_shared<three::Feature>(); pcd_fpfh = three::ComputeFPFHFeature(*pcd_down, three::KDTreeSearchParamHybrid(radius, max_nn)); return std::tuple<std::shared_ptr<three::PointCloud>, std::shared_ptr<three::Feature>>(pcd_down, pcd_fpfh); } std::tuple<bool, three::RegistrationResult> register_point_cloud_fpfh(three::PointCloud& source, three::PointCloud& target, three::Feature& source_fpfh, three::Feature& target_fpfh) { double maximum_correspondence_distance = 0.07; three::RegistrationResult result = three::FastGlobalRegistration( source, target, source_fpfh, target_fpfh, three::FastGlobalRegistrationOption(1.399999999999911, false, true, maximum_correspondence_distance)); if (result.transformation_.trace() == 4.0) { return std::tuple<bool, three::RegistrationResult>(false, Eigen::Matrix4d::Identity()); } return std::tuple<bool, three::RegistrationResult>(true, result); } void draw_registration_result(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d transformation) { std::shared_ptr<three::PointCloud> source_temp = std::make_shared<three::PointCloud>(source); std::shared_ptr<three::PointCloud> target_temp = std::make_shared<three::PointCloud>(target); source_temp->PaintUniformColor(Eigen::Vector3d(1., 0.706, 0.)); target_temp->PaintUniformColor(Eigen::Vector3d(0., 0.651, 0.929)); source_temp->Transform(transformation); three::DrawGeometries({ source_temp, target_temp }); } std::tuple<bool, Eigen::Matrix4d> compute_initial_registartion(int s, int t, three::PointCloud& source_down, three::PointCloud& target_down, three::Feature& source_fpfh, three::Feature& target_fpfh, std::string path_dataset, bool draw_result = false) { Eigen::Matrix4d transformation; if (t == s + 1) { // odomety case std::cout << "Using RGBD odometry" << std::endl; std::string filename(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)filename.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), s); three::PoseGraph pose_graph_frag; three::ReadIJsonConvertibleFromJSON(filename, pose_graph_frag); int n_nodes = static_cast<int>(pose_graph_frag.nodes_.size()); transformation = pose_graph_frag.nodes_[n_nodes - 1].pose_.inverse(); std::cout << pose_graph_frag.nodes_[0].pose_ << std::endl; std::cout << transformation << std::endl; } else { // loop closure case std::cout << "register_point_cloud_fpfh" << std::endl; auto ret = register_point_cloud_fpfh( source_down, target_down, source_fpfh, target_fpfh); bool success_ransac = std::get<0>(ret); three::RegistrationResult result_ransac = std::get<1>(ret); if (!success_ransac) { std::cout << "No reasonable solution. Skip this pair" << std::endl; return std::tuple<bool, Eigen::Matrix4d>(false, Eigen::Matrix4d::Identity()); } else { transformation = result_ransac.transformation_; } std::cout << transformation << std::endl; } if (draw_result) { draw_registration_result(source_down, target_down, transformation); } return std::tuple<bool, Eigen::Matrix4d>(true, transformation); } // colored pointcloud registration // This is implementation of following paper // J. Park, Q.-Y. Zhou, V. Koltun, // Colored Point Cloud Registration Revisited, ICCV 2017 std::tuple<Eigen::Matrix4d, Eigen::Matrix6d> register_colored_point_cloud_icp(three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d init_transformation = Eigen::Matrix4d::Identity(), std::vector<double> voxel_radius = std::vector<double>({ 0.05, 0.025, 0.0125 }), std::vector<int> max_iter = std::vector<int>({ 50, 30, 14 }), bool draw_result = false) { Eigen::Matrix4d current_transformation = init_transformation; three::RegistrationResult result_icp; for (int scale = 0; scale < max_iter.size(); scale++) { // multi-scale approach int iter = max_iter[scale]; double radius = voxel_radius[scale]; std::cout << "radius " << radius << std::endl; std::shared_ptr<three::PointCloud> source_down = three::VoxelDownSample(source, radius); std::shared_ptr<three::PointCloud> target_down = three::VoxelDownSample(target, radius); double radius_n = radius * 2; int max_nn = 30; three::EstimateNormals(*source_down, three::KDTreeSearchParamHybrid(radius_n, max_nn)); if (0) { // print(np.assarray(source_down.normals)) for (int i = 0; i < source_down->normals_.size(); i++) { std::cout << source_down->normals_[i] << " "; } std::cout << std::endl; } three::EstimateNormals(*target_down, three::KDTreeSearchParamHybrid(radius_n, max_nn)); double relative_fitness = 1e-6; double relative_rmse = 1e-6; int max_iteration = iter; result_icp = three::RegistrationColoredICP(*source_down, *target_down, radius, current_transformation, three::ICPConvergenceCriteria(relative_fitness, relative_rmse, max_iteration)); current_transformation = result_icp.transformation_; } Eigen::Matrix6d information_matrix = three::GetInformationMatrixFromPointClouds( source, target, 0.07, result_icp.transformation_); if (draw_result) { draw_registration_result_original_color(source, target, result_icp.transformation_); } return std::tuple<Eigen::Matrix4d, Eigen::Matrix6d>(result_icp.transformation_, information_matrix); } std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d> local_refinement(int s, int t, three::PointCloud& source, three::PointCloud& target, Eigen::Matrix4d transformation_init, bool draw_result = false) { Eigen::Matrix4d transformation; Eigen::Matrix6d information; if (t == s + 1) { // odometry case std::cout << "register_point_cloud_icp" << std::endl; std::vector<double> voxel_radius({ 0.0125 }); std::vector<int> max_iter({ 30 }); auto ret = register_colored_point_cloud_icp( source, target, transformation_init, voxel_radius, max_iter); transformation = std::get<0>(ret); information = std::get<1>(ret); } else { // loop closure case std::cout << "register_colored_point_cloud" << std::endl; auto ret = register_colored_point_cloud_icp( source, target, transformation_init); transformation = std::get<0>(ret); information = std::get<1>(ret); } bool success_local = false; if ((information(5, 5) / std::min(source.points_.size(), target.points_.size())) > 0.3) { success_local = true; } if (draw_result) { draw_registration_result_original_color( source, target, transformation); } return std::tuple<bool, Eigen::Matrix4d, Eigen::Matrix6d>(success_local, transformation, information); } std::tuple<Eigen::Matrix4d, three::PoseGraph> update_odometry_posegraph(int s, int t, Eigen::Matrix4d transformation, Eigen::Matrix6d information, Eigen::Matrix4d odometry, three::PoseGraph& pose_graph) { std::cout << "Update PoseGraph" << std::endl; if (t == s + 1) { // odometry case odometry = transformation * odometry; Eigen::Matrix4d odometry_inv = odometry.inverse(); pose_graph.nodes_.push_back(three::PoseGraphNode(odometry_inv)); bool uncertain = false; pose_graph.edges_.push_back( three::PoseGraphEdge(s, t, transformation, information, uncertain)); } else { // loop closure case bool uncertain = true; pose_graph.edges_.push_back( three::PoseGraphEdge(s, t, transformation, information, uncertain)); } return std::tuple<Eigen::Matrix4d, three::PoseGraph>(odometry, pose_graph); } void register_point_cloud(std::string path_dataset, std::vector<std::string>& ply_file_names, bool draw_result = false) { three::PoseGraph pose_graph = three::PoseGraph(); Eigen::Matrix4d odometry = Eigen::Matrix4d::Identity(); pose_graph.nodes_.push_back(three::PoseGraphNode(odometry)); Eigen::Matrix6d info = Eigen::Matrix6d::Identity(); int n_files = static_cast<int>(ply_file_names.size()); for (int s = 0; s < n_files; s++) { for (int t = s + 1; t < n_files; t++) { std::shared_ptr<three::PointCloud> source = std::make_shared<three::PointCloud>(); std::shared_ptr<three::PointCloud> target = std::make_shared<three::PointCloud>(); std::cout << "reading " << ply_file_names[s] << " ..."; bool ret = three::ReadPointCloud(ply_file_names[s], *source); std::cout << "reading " << ply_file_names[s] << " ..."; ret = three::ReadPointCloud(ply_file_names[t], *target); auto ret_s = preprocess_point_cloud(*source); std::shared_ptr<three::PointCloud> source_down = std::get<0>(ret_s); std::shared_ptr<three::Feature> source_fpfh = std::get<1>(ret_s); auto ret_t = preprocess_point_cloud(*target); std::shared_ptr<three::PointCloud> target_down = std::get<0>(ret_t); std::shared_ptr<three::Feature> target_fpfh = std::get<1>(ret_t); auto ret_c = compute_initial_registartion( s, t, *source_down, *target_down, *source_fpfh, *target_fpfh, path_dataset, draw_result); bool success_global = std::get<0>(ret_c); Eigen::Matrix4d transformation_init = std::get<1>(ret_c); if (!success_global) { continue; } //std::cout << "success global" << s << " , " << t << std::endl; auto ret_l = local_refinement(s, t, *source, *target, transformation_init, draw_result); bool success_local = std::get<0>(ret_l); Eigen::Matrix4d transformation_icp = std::get<1>(ret_l); Eigen::Matrix6d information_icp = std::get<2>(ret_l); if (!success_local) { continue; } //std::cout << "success local" << s << " , " << t << std::endl; auto ret_u = update_odometry_posegraph(s, t, transformation_icp, information_icp, odometry, pose_graph); odometry = std::get<0>(ret_u); // pose_graph = std::get<1>(ret_u); // std::cout << pose_graph << std::endl; } } // three::WritePoseGraph(path_dataset + template_global_posegraph, pose_graph); three::WriteIJsonConvertibleToJSON(path_dataset + template_global_posegraph, pose_graph); } void register_fragments_main(std::string path_dataset) { three::SetVerbosityLevel(three::VerbosityLevel::VerboseDebug); std::shared_ptr<std::vector<std::string>> ply_file_names = get_file_list(path_dataset + folder_fragment, ".ply"); make_folder(path_dataset + folder_scene); register_point_cloud(path_dataset, *ply_file_names); optimize_posegraph_for_scene(path_dataset); }

Open3DのReconstruction systemをC++に移植(その1-3 make_fragment)

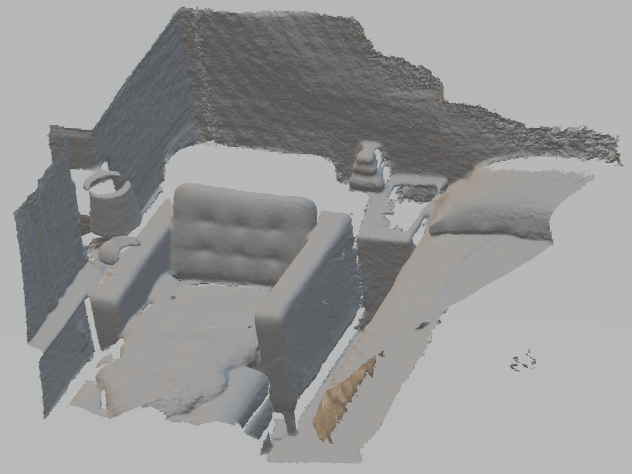

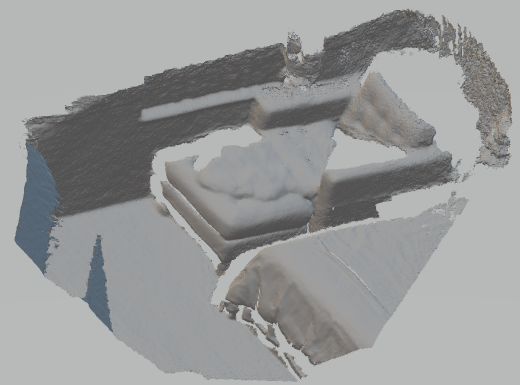

openFrameworksの呼び出し部分の例と実行結果。

ofApp.cpp

// Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include "ofApp.h" #include "make_fragments.h" #pragma comment(lib, "Open3D.lib") //#pragma comment(lib, "Core.lib") //#pragma comment(lib, "IO.lib") //#pragma comment(lib, "Visualization.lib") #pragma comment(lib, "jsoncpp.lib") #pragma comment(lib, "jpeg.lib") #pragma comment(lib, "tinyfiledialogs.lib") #pragma comment(lib, "png.lib") #pragma comment(lib, "opencv_core400.lib") #pragma comment(lib, "opencv_features2d400.lib") #pragma comment(lib, "opencv_highgui400.lib") #pragma comment(lib, "opencv_calib3d400.lib") //-------------------------------------------------------------- void ofApp::setup() { make_fragments_main("D:/workspace/016", ""); register_fragments_main("D:/workspace/016"); integrate_scene_main("D:/workspace/016", ""); } //-------------------------------------------------------------- void ofApp::update() { } //-------------------------------------------------------------- void ofApp::draw() { } //-------------------------------------------------------------- void ofApp::keyPressed(int key) { } //-------------------------------------------------------------- void ofApp::keyReleased(int key) { } //-------------------------------------------------------------- void ofApp::mouseMoved(int x, int y) { } //-------------------------------------------------------------- void ofApp::mouseDragged(int x, int y, int button) { } //-------------------------------------------------------------- void ofApp::mousePressed(int x, int y, int button) { } //-------------------------------------------------------------- void ofApp::mouseReleased(int x, int y, int button) { } //-------------------------------------------------------------- void ofApp::mouseEntered(int x, int y) { } //-------------------------------------------------------------- void ofApp::mouseExited(int x, int y) { } //-------------------------------------------------------------- void ofApp::windowResized(int w, int h) { } //-------------------------------------------------------------- void ofApp::gotMessage(ofMessage msg) { } //-------------------------------------------------------------- void ofApp::dragEvent(ofDragInfo dragInfo) { }

実行結果

一つのセグメントの処理が一巡すると下のようなメッセージが表示されて/fragmentsフォルダの下に次の3つのファイルが生成されます。

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 118 edges.

Line process weight : 44793.956121

[Initial ] residual : 2.438500e+04, lambda : 2.543928e+02

[Iteration 00] residual : 5.961908e+03, valid edges : 118, time : 0.005 sec.

[Iteration 01] residual : 3.527648e+03, valid edges : 118, time : 0.005 sec.

[Iteration 02] residual : 3.525901e+03, valid edges : 118, time : 0.005 sec.

[Iteration 03] residual : 3.525896e+03, valid edges : 118, time : 0.005 sec.

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.025 sec.

[GlobalOptimizationLM] Optimizing PoseGraph having 100 nodes and 118 edges.

Line process weight : 44793.956121

[Initial ] residual : 3.525896e+03, lambda : 2.608960e+02

Current_residual - new_residual < 1.000000e-06 * current_residual

[GlobalOptimizationLM] total time : 0.003 sec.

CompensateReferencePoseGraphNode : reference : 0

fragment_000.json (posegarph)

fragment_000.ply (mesh)

fragment_optimized_000.json (最適化したposegraph)

今日のそうめん

もっともっと大きなのもオススメ。

store.shopping.yahoo.co.jp

Open3DのReconstruction systemをC++に移植(その1-2 make_fragment)

make_fragmentのc++移植の続き

opencv_pose_estimation.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> #include <opencv/cv.h> std::tuple<bool, Eigen::Matrix4d> pose_estimation(three::RGBDImage& source_rgbd_image, three::RGBDImage& target_rgbd_image, three::PinholeCameraIntrinsic intrinsic, bool debug_draw_correspondences); std::tuple<bool, Eigen::Matrix4d, std::vector<int>&> estimate_3D_transform_RANSAC(std::vector<Eigen::Vector3d>& pts_xyz_s, std::vector<Eigen::Vector3d>& pts_xyz_t); std::tuple<Eigen::Matrix3d, Eigen::Vector3d> estimate_3D_transform(std::vector<Eigen::Vector3d>& input_xyz_s, std::vector<Eigen::Vector3d>& input_xyz_t); Eigen::Vector3d get_xyz_from_pts(cv::Point2d pts_row, cv::Mat& depth, double px, double py, double focal); Eigen::Vector3d get_xyz_from_uv(double u, double v, double d, double px, double py, double focal);

opencv_pose_estimation.cpp

// following original code is tested with OpenCV 3.2.0 and Python2.7 #include "opencv_pose_estimation.h" #include <random> #include <Eigen/SVD> #include <Eigen/LU> #include <opencv/cv.h> #include <opencv2/features2d.hpp> #include <opencv2/highgui.hpp> #include <opencv2/calib3d.hpp> std::tuple<bool, Eigen::Matrix4d> pose_estimation(three::RGBDImage& source_rgbd_image, three::RGBDImage& target_rgbd_image, three::PinholeCameraIntrinsic pinhole_camera_intrinsic, bool debug_draw_correspondences) { bool success = false; Eigen::Matrix4d trans = Eigen::Matrix4d::Identity(); // transform double array to unit8 array cv::Mat color_cv_s_f(source_rgbd_image.color_.height_, source_rgbd_image.color_.width_, CV_32FC1); cv::Mat color_cv_t_f(target_rgbd_image.color_.height_, target_rgbd_image.color_.width_, CV_32FC1); memcpy((void*)(color_cv_s_f.data), (void*)(&source_rgbd_image.color_.data_[0]), source_rgbd_image.color_.width_ * source_rgbd_image.color_.height_ * source_rgbd_image.color_.num_of_channels_ * source_rgbd_image.color_.bytes_per_channel_); memcpy((void*)(color_cv_t_f.data), (void*)(&target_rgbd_image.color_.data_[0]), target_rgbd_image.color_.width_ * target_rgbd_image.color_.height_ * target_rgbd_image.color_.num_of_channels_ * target_rgbd_image.color_.bytes_per_channel_); cv::Mat color_cv_s; cv::Mat color_cv_t; color_cv_s_f.convertTo(color_cv_s, CV_8UC1, 255); color_cv_t_f.convertTo(color_cv_t, CV_8UC1, 255); int nfeatures = 100; float scaleFactor = 1.2f; int nlevels = 8; int edgeThreshold = 31; int firstLevel = 0; int WTA_K = 2; int scoreType = cv::ORB::HARRIS_SCORE; int patchSize = 31; cv::Ptr<cv::ORB> orb = cv::ORB::create(nfeatures, scaleFactor, nlevels, edgeThreshold, firstLevel, WTA_K, scoreType, patchSize); // to save time cv::Mat des_s, des_t; std::vector<cv::KeyPoint> kp_s, kp_t; orb->detect(color_cv_s, kp_s); orb->detect(color_cv_t, kp_t); orb->compute(color_cv_s, kp_s, des_s); orb->compute(color_cv_t, kp_t, des_t); if ((kp_s.size() == 0) || (kp_t.size() == 0)) { return std::tuple<bool, Eigen::Matrix4d>(success, trans); } cv::Ptr<cv::DescriptorMatcher> matcher = cv::DescriptorMatcher::create("BruteForce-Hamming"); std::vector<cv::DMatch> dmatch; dmatch.clear(); std::vector<cv::DMatch> dmatch12, dmatch21; matcher->match(des_s, des_t, dmatch12); matcher->match(des_t, des_s, dmatch21); // CrossCheck = True for (size_t i = 0; i < dmatch12.size(); ++i) { cv::DMatch m12 = dmatch12[i]; cv::DMatch m21 = dmatch21[m12.trainIdx]; if (m21.trainIdx == m12.queryIdx) dmatch.push_back(m12); } int count = static_cast<int>(dmatch.size()); std::vector<cv::Point2d> pts_s; std::vector<cv::Point2d> pts_t; pts_s.clear(); pts_t.clear(); for (int i = 0; i < dmatch.size(); i++) { pts_t.push_back(kp_t[dmatch[i].trainIdx].pt); pts_s.push_back(kp_s[dmatch[i].queryIdx].pt); } // inlier points after initial BF matching if (debug_draw_correspondences) { cv::Mat result; drawMatches(color_cv_s, kp_s, color_cv_t, kp_t, dmatch, result); cv::imshow("Initial BF matching", result); cv::waitKey(1); // draw_correspondences(); } double focal_input = (pinhole_camera_intrinsic.intrinsic_matrix_(0, 0) + pinhole_camera_intrinsic.intrinsic_matrix_(1, 1)) / 2.0; double pp_x = pinhole_camera_intrinsic.intrinsic_matrix_(0, 2); double pp_y = pinhole_camera_intrinsic.intrinsic_matrix_(1, 2); // Essential matrix is made for masking inliers std::vector<cv::Point2i> pts_s_int; std::vector<cv::Point2i> pts_t_int; for (int i = 0; i < pts_s.size(); i++) { pts_s_int.push_back(cv::Point2i(pts_s[i].x + 0.5, pts_s[i].y + 0.5)); } for (int i = 0; i < pts_t.size(); i++) { pts_t_int.push_back(cv::Point2i(pts_t[i].x + 0.5, pts_t[i].y + 0.5)); } cv::Mat E, mask; double focal = focal_input; cv::Point2d pp(pp_x, pp_y); int method = cv::RANSAC; double prob = 0.999; double threshold = 1.0; E = cv::findEssentialMat(pts_s_int, pts_t_int, focal, pp, method, prob, threshold, mask); if (mask.empty()) { return std::tuple<bool, Eigen::Matrix4d>(success, trans); } // inlier points after 5pt algorithm if (debug_draw_correspondences) { } // make 3D correspondences cv::Mat depth_s(source_rgbd_image.depth_.height_, source_rgbd_image.depth_.width_, CV_32FC1); cv::Mat depth_t(source_rgbd_image.depth_.height_, source_rgbd_image.depth_.width_, CV_32FC1); memcpy((void*)(depth_s.data), (void*)(&source_rgbd_image.depth_.data_[0]), source_rgbd_image.depth_.width_ * source_rgbd_image.depth_.height_ * source_rgbd_image.depth_.num_of_channels_ * source_rgbd_image.depth_.bytes_per_channel_); memcpy((void*)(depth_t.data), (void*)(&target_rgbd_image.depth_.data_[0]), target_rgbd_image.depth_.width_ * target_rgbd_image.depth_.height_ * target_rgbd_image.depth_.num_of_channels_ * target_rgbd_image.depth_.bytes_per_channel_); std::vector<Eigen::Vector3d> pts_xyz_s; std::vector<Eigen::Vector3d> pts_xyz_t; pts_xyz_s.clear(); pts_xyz_t.clear(); int cnt = 0; for (int i = 0; i < pts_s.size(); i++) { if (mask.at<int>(i)) { pts_xyz_s.push_back(get_xyz_from_pts(pts_s[i], depth_s, pp_x, pp_y, focal_input)); pts_xyz_t.push_back(get_xyz_from_pts(pts_t[i], depth_t, pp_x, pp_y, focal_input)); cnt = cnt + 1; } } auto ret = estimate_3D_transform_RANSAC(pts_xyz_s, pts_xyz_t); success = std::get<0>(ret); trans = std::get<1>(ret); std::vector<int> inlier_id_vec = std::get<2>(ret); if (debug_draw_correspondences) { } return std::tuple<bool, Eigen::Matrix4d>(success, trans); } std::tuple<bool, Eigen::Matrix4d, std::vector<int>&> estimate_3D_transform_RANSAC(std::vector<Eigen::Vector3d>& pts_xyz_s, std::vector<Eigen::Vector3d>& pts_xyz_t) { int max_iter = 1000; double max_distance = 0.05; int n_sample = 5; int n_points = static_cast<int>(pts_xyz_s.size()); Eigen::Matrix4d Transform_good = Eigen::Matrix4d::Identity(); int max_inlier = n_sample; std::vector<int> inlier_vec_good; inlier_vec_good.clear(); bool success = false; if (n_points < n_sample) { return std::tuple<bool, Eigen::Matrix4d, std::vector<int>&>(success, Transform_good, inlier_vec_good); } for (int i = 0; i < max_iter; i++) { std::vector<Eigen::Vector3d> sample_xyz_s; std::vector<Eigen::Vector3d> sample_xyz_t; // sampling std::random_device rnd; std::mt19937 mt(rnd()); std::uniform_int_distribution<> randn(0, n_points); for (int j = 0; j < n_sample; j++) { int rand_idx = randn(mt); sample_xyz_s.push_back(pts_xyz_s[rand_idx]); sample_xyz_t.push_back(pts_xyz_t[rand_idx]); } auto ret = estimate_3D_transform(sample_xyz_s, sample_xyz_t); Eigen::Matrix3d R_approx = std::get<0>(ret); Eigen::Vector3d t_approx = std::get<1>(ret); // evaluation std::vector<Eigen::Vector3d> diff_mat; for (int j = 0; j < n_points; j++) { diff_mat.push_back(pts_xyz_t[j] - (R_approx * pts_xyz_s[j] + t_approx)); } std::vector<double> diff; for (int j = 0; j < n_points; j++) { diff.push_back(diff_mat[j].norm()); } int n_inlier = 0; for (int j = 0; j < diff.size(); j++) { double diff_iter = diff[j]; if (diff_iter < max_distance) { n_inlier += 1; } } // note: diag(R_approx) > 0 prevents ankward transformation between // RGBD pair of relatively small amount of baseline. if ((n_inlier > max_inlier) && (R_approx.determinant() != 0.) && (R_approx(0, 0) > 0) && (R_approx(1, 1) > 0) && (R_approx(2, 2) > 0)) { for (int j = 0; j < 3; j++) { for (int k = 0; k < 3; k++) { Transform_good(j, k) = R_approx(j, k); } Transform_good(j, 3) = t_approx(j); } // std::cout << "Transform_good = \n" << Transform_good << std::endl; max_inlier = n_inlier; inlier_vec_good.clear(); for (int id_iter = 0; id_iter < n_points; id_iter++) { double diff_iter = diff[id_iter]; if (diff_iter < max_distance) { inlier_vec_good.push_back(id_iter); } } success = true; } } return std::tuple<bool, Eigen::Matrix4d, std::vector<int>&>(success, Transform_good, inlier_vec_good); } // signular value decomposition approach // based on the description in the sec 3.1.2 in // http::/graphics.stanford.edu/~smr/ICP/comparison/eggert_comparison_mva97.pdf std::tuple<Eigen::Matrix3d, Eigen::Vector3d> estimate_3D_transform(std::vector<Eigen::Vector3d>& input_xyz_s, std::vector<Eigen::Vector3d>& input_xyz_t) { // compute H int n_points = static_cast<int>(input_xyz_s.size()); Eigen::Vector3d mean_s(0., 0., 0.); for (int i = 0; i < input_xyz_s.size(); i++) { mean_s += input_xyz_s[i]; } mean_s /= n_points; Eigen::Vector3d mean_t(0., 0., 0.); for (int i = 0; i < input_xyz_t.size(); i++) { mean_t += input_xyz_t[i]; } mean_t /= n_points; Eigen::MatrixXd xyz_diff_s(3, input_xyz_s.size()); for (int i = 0; i < input_xyz_s.size(); i++) { for (int j = 0; j < 3; j++) { xyz_diff_s(j, i) = input_xyz_s[i](j) - mean_s(j); } } Eigen::MatrixXd xyz_diff_t(3, input_xyz_t.size()); for (int i = 0; i < input_xyz_t.size(); i++) { for (int j = 0; j < 3; j++) { xyz_diff_t(j, i) = input_xyz_t[i](j) - mean_t(j); } } Eigen::MatrixXd H; H.resize(3, 3); H = xyz_diff_s * xyz_diff_t.transpose(); // solve system Eigen::JacobiSVD<Eigen::MatrixXd> svd(H, Eigen::ComputeFullU | Eigen::ComputeFullV); Eigen::Matrix3d R_approx = svd.matrixV().transpose() * svd.matrixU().transpose(); if (R_approx.determinant() < 0.0) { double det = (svd.matrixU() * svd.matrixV()).determinant(); Eigen::Matrix3d D = Eigen::Matrix3d::Identity(); D(2, 2) = det; R_approx = svd.matrixU() * (D * svd.matrixV()); } Eigen::Vector3d t_approx = mean_t - (R_approx * mean_s); return std::tuple<Eigen::Matrix3d, Eigen::Vector3d>(R_approx, t_approx); } Eigen::Vector3d get_xyz_from_pts(cv::Point2d pts_row, cv::Mat& depth, double px, double py, double focal) { double u = pts_row.x; double v = pts_row.y; int u0 = int(u); int v0 = int(v); int height = depth.rows; int width = depth.cols; // bilinear depth interpolation if ((u0 > 0) && (u0 < width - 1) && (v0 > 0) && (v0 < height - 1)) { double up = pts_row.x - u0; double vp = pts_row.y - v0; double d0 = depth.at<double>(v0, u0); double d1 = depth.at<double>(v0, u0 + 1); double d2 = depth.at<double>(v0 + 1, u0); double d3 = depth.at<double>(v0 + 1, u0); double d = (1 - vp) * (d1 * up + d0 * (1 - up)) + vp * (d3 * up + d2 * (1 - up)); return get_xyz_from_uv(u, v, d, px, py, focal); } return Eigen::Vector3d(0., 0., 0.); } Eigen::Vector3d get_xyz_from_uv(double u, double v, double d, double px, double py, double focal) { double x, y; if (focal != 0.) { x = (u - px) / focal + d; y = (v - py) / focal + d; } else { x = 0.; y = 0.; } return Eigen::Vector3d(x, y, d).transpose(); }

optimize_posegraph.h

#pragma once // Open3D: www.open3d.org // The MIT License (MIT) // See license file or visit www.open3d.org for details #include <Core/Core.h> #include <IO/IO.h> #include <Visualization/Visualization.h> void optimize_posegraph_for_fragment(std::string path_dataset, int fragment_id); void optimize_posegraph_for_scene(std::string path_dataset);

optimize_posegraph.cpp

#include "optimize_posegraph.h" #include <Core/Registration/PoseGraph.h> #include <Core/Registration/GlobalOptimizationMethod.h> #include <Core/Registration/GlobalOptimizationConvergenceCriteria.h> #include <Core/Registration/GlobalOptimization.h> #include <IO/ClassIO/PoseGraphIO.h> extern std::string template_fragment_posegraph; extern std::string template_fragment_posegraph_optimized; extern std::string template_global_posegraph; extern std::string template_global_posegraph_optimized; void run_posegraph_optimization(std::string pose_graph_name, std::string pose_graph_optimized_name, double max_correspondence_distance, double preference_loop_closure) { // to display messages from global optimization three::SetVerbosityLevel(three::VerbosityLevel::VerboseDebug); const three::GlobalOptimizationMethod & method = three::GlobalOptimizationLevenbergMarquardt(); const three::GlobalOptimizationConvergenceCriteria &criteria = three::GlobalOptimizationConvergenceCriteria(); const three::GlobalOptimizationOption &option = three::GlobalOptimizationOption(max_correspondence_distance, 0.25, preference_loop_closure, 0); three::PoseGraph pose_graph; //three::ReadPoseGraph(pose_graph_name, pose_graph); // Read PoseGraph failed: unknown file extension. three::ReadIJsonConvertibleFromJSON(pose_graph_name, pose_graph); three::GlobalOptimization(pose_graph, method, criteria, option); //three::WritePoseGraph(pose_graph_optimized_name, pose_graph); // Write PoseGraph failed: unknown file extension. three::WriteIJsonConvertibleToJSON(pose_graph_optimized_name, pose_graph); three::SetVerbosityLevel(three::VerbosityLevel::VerboseError); } void optimize_posegraph_for_fragment(std::string path_dataset, int fragment_id) { std::string pose_graph_name(path_dataset + template_fragment_posegraph); sprintf((char*)pose_graph_name.data(), (path_dataset + template_fragment_posegraph).c_str(), fragment_id); std::string pose_graph_optimized_name(path_dataset + template_fragment_posegraph_optimized); sprintf((char*)pose_graph_optimized_name.data(), (path_dataset + template_fragment_posegraph_optimized).c_str(), fragment_id); run_posegraph_optimization(pose_graph_name, pose_graph_optimized_name, 0.07, 0.1); } void optimize_posegraph_for_scene(std::string path_dataset) { std::string pose_graph_name(path_dataset + template_global_posegraph); std::string pose_graph_optimized_name(path_dataset + template_global_posegraph_optimized); double max_correspondence_distance = 0.07; double preference_loop_closure = 2.0; run_posegraph_optimization(pose_graph_name, pose_graph_optimized_name, max_correspondence_distance, preference_loop_closure); }

注意点

- ファイル入出力だけにopenFrameworksの関数を使っています。ofDirなど。代替のライブラリがあれば、そちらに書き換えてください。

- ReadPoseGraph, WritePoseGraphなどファイルの入出力のユーティリティ関数において、拡張子の照合が上手くいかなくて正しく動作しません。代わりに、拡張子を特定してWriteJsonConvertibleToJSONなどを直接呼んでいます。

- デバッグ用の表示関数を省略しています。つまり、ちゃんとデバッグできていない可能性があります。

- Eigenを使い慣れてないせいで無駄にstd::vectorと組み合わせたり、汚い書き方していますがご容赦を。もしくはきれいに書き換えてください。

今日のそうめん

そうめんは古くなるほどおいしくなるそうなので、もっと大きなやつもオススメ。

store.shopping.yahoo.co.jp