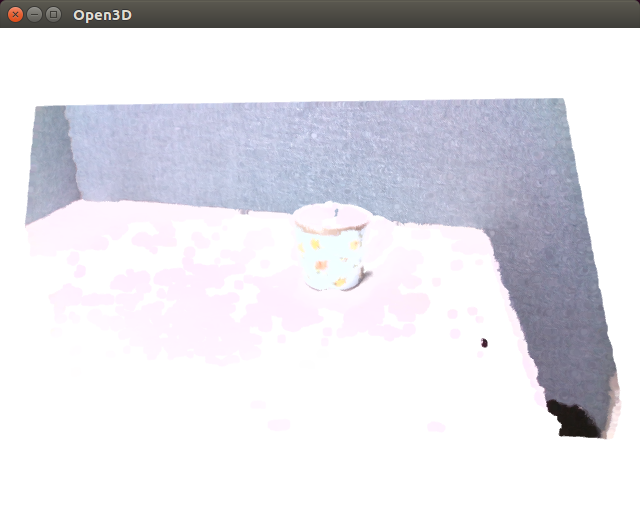

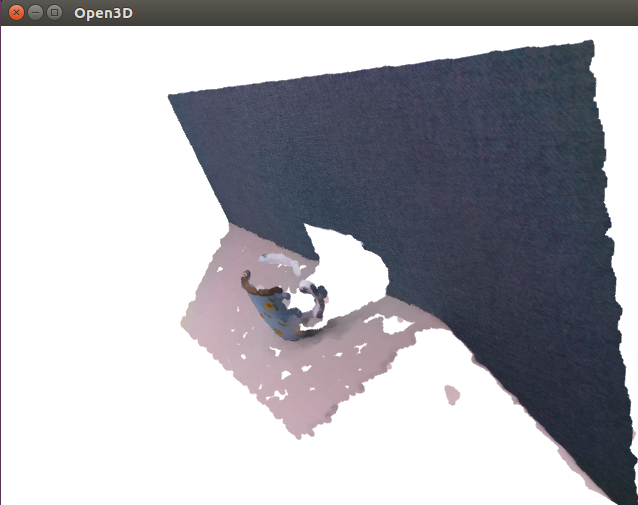

Open3DをIntel RealSense D435で動かすためにTestRealSense.cppをlibrealsense2に対応するよう改造する(その2)色付きPointCloudに変換

RealSense D435で取得したRGBColor画像とDepthをDepth解像度でlibrealsense側でalignして、Open3DのRGBD画像を作り、RGBD画像をさらにPointCloudに変換するサンプルです。

目視で確認したい場合は、DrawGeometriesのコメントアウトを外してください。ただし、1フレーム毎にループ処理が止まります。

// ---------------------------------------------------------------------------- // - Open3D: www.open3d.org - // ---------------------------------------------------------------------------- // The MIT License (MIT) // // Copyright (c) 2018 www.open3d.org // // Permission is hereby granted, free of charge, to any person obtaining a copy // of this software and associated documentation files (the "Software"), to deal // in the Software without restriction, including without limitation the rights // to use, copy, modify, merge, publish, distribute, sublicense, and/or sell // copies of the Software, and to permit persons to whom the Software is // furnished to do so, subject to the following conditions: // // The above copyright notice and this permission notice shall be included in // all copies or substantial portions of the Software. // // THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR // IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, // FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE // AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER // LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING // FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS // IN THE SOFTWARE. // ---------------------------------------------------------------------------- #include <iostream> #include <thread> #include <librealsense2/rs.hpp> #include <Core/Core.h> #include <Visualization/Visualization.h> // Why IO.a, Core.a, Visualization.a don't have these objects? #include <Core/Utility/FileSystem.cpp> #include <Core/Camera/PinholeCameraTrajectory.cpp> #include <Core/Geometry/TriangleMesh.cpp> #include <Core/Geometry/TriangleMeshFactory.cpp> #include <Core/Geometry/PointCloud.cpp> #include <IO/ClassIO/PointCloudIO.cpp> #include <IO/ClassIO/IJsonConvertible.cpp> #include <IO/ClassIO/IJsonConvertibleIO.cpp> #include <Core/Geometry/LineSet.cpp> #include <Core/Geometry/DownSample.cpp> using namespace three; void print_option_range(const rs2::sensor& sensor, rs2_option option_type) { // from api_how_to.h std::cout << "Supported range fro option " << option_type << ":" << std::endl; rs2::option_range range = sensor.get_option_range(option_type); float default_value = range.def; float maximum_supported_value = range.max; float minimum_supported_value = range.min; float difference_to_next_value = range.step; std::cout << " Min Value : " << minimum_supported_value << std::endl; std::cout << " Max Value : " << maximum_supported_value << std::endl; std::cout << " Default Value : " << default_value << std::endl; std::cout << " Step : " << difference_to_next_value << std::endl; } void change_option(const rs2::sensor& sensor, rs2_option option_type, float requested_value) { try { sensor.set_option(option_type, requested_value); } catch(const rs2::error& e) { std::cerr << "Failed to set option " << option_type << ". (" << e.what() << ")" << std::endl; } } int main(int argc, char **args) try { rs2::context ctx; rs2::device_list devices = ctx.query_devices(); if(devices.size() == 1){ PrintInfo("There is a connected RealSense device.\n"); } else { PrintInfo("There are %d connected RealSense devices.\n", devices.size()); } if(devices.size() == 0){ return 0; } int dev_id = 0; rs2::device dev = devices[dev_id]; std::string name = "Unknown Device"; if(dev.supports(RS2_CAMERA_INFO_NAME)){ // include/h/rs_sensor.h name = dev.get_info(RS2_CAMERA_INFO_NAME); } PrintInfo("Using device %d, an %s\n", dev_id, name.c_str()); std::string sn = "########"; if(dev.supports(RS2_CAMERA_INFO_SERIAL_NUMBER)){ sn = std::string("#") + dev.get_info(RS2_CAMERA_INFO_SERIAL_NUMBER); } PrintInfo(" Serial number: %s\n", sn.c_str()); std::string fn = "########"; if(dev.supports(RS2_CAMERA_INFO_FIRMWARE_VERSION)){ fn =dev.get_info(RS2_CAMERA_INFO_FIRMWARE_VERSION); } PrintInfo(" Firmware version: %s\n\n", fn.c_str()); int index = 0; int rgb_camera = -1; int stereo_module = -1; std::vector<rs2::sensor> sensors = dev.query_sensors(); // api_how_to.h for(rs2::sensor sensor : sensors){ if(sensor.supports(RS2_CAMERA_INFO_NAME)){ PrintInfo("%d: %s\n", index, sensor.get_info(RS2_CAMERA_INFO_NAME)); if(sensor.get_info(RS2_CAMERA_INFO_NAME) == std::string("Stereo Module")){ stereo_module = index; } if(sensor.get_info(RS2_CAMERA_INFO_NAME) == std::string("RGB Camera")){ rgb_camera = index; } index++; } } if(sensors[rgb_camera].supports(RS2_OPTION_ENABLE_AUTO_EXPOSURE)){ // rs_option.h print_option_range(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_EXPOSURE); change_option(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_EXPOSURE, 0.0); // api_how_to.h } if(sensors[rgb_camera].supports(RS2_OPTION_EXPOSURE)){ print_option_range(sensors[rgb_camera], RS2_OPTION_EXPOSURE); change_option(sensors[rgb_camera], RS2_OPTION_EXPOSURE, 625.0); } if(sensors[rgb_camera].supports(RS2_OPTION_GAIN)){ print_option_range(sensors[rgb_camera], RS2_OPTION_GAIN); change_option(sensors[rgb_camera], RS2_OPTION_GAIN, 128.0); } if(sensors[rgb_camera].supports(RS2_OPTION_ENABLE_AUTO_WHITE_BALANCE)){ print_option_range(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_WHITE_BALANCE); change_option(sensors[rgb_camera], RS2_OPTION_ENABLE_AUTO_WHITE_BALANCE, 0.0); } // Above, it seems doesn't work... // Decrare Realsense pipeline, encapsulating the actual device and sensors rs2::pipeline pipe; // rs_pipeline.h int depth_width = 1280; // depth_intr.width; int depth_height = 720; // depth_intr.height; int depth_fps = 30; // 30 or 60 due to resolution int color_width = 1280; // color_intr.width int color_height = 720; // color_intr.height int color_fps = 30; // 30 or 60 rs2::config cfg; // examples/measure/rs-measure.cpp cfg.enable_stream(RS2_STREAM_DEPTH, depth_width, depth_height, RS2_FORMAT_Z16, depth_fps); cfg.enable_stream(RS2_STREAM_COLOR, color_width, color_height, RS2_FORMAT_RGB8, color_fps); // Start streaming with recommended configuration auto profile = pipe.start(cfg); std::this_thread::sleep_for(std::chrono::milliseconds(50)); if(sensors[stereo_module].supports(RS2_OPTION_WHITE_BALANCE)){ change_option(sensors[stereo_module], RS2_OPTION_WHITE_BALANCE, 2100.0); } auto depth_stream = profile.get_stream(RS2_STREAM_DEPTH).as<rs2::video_stream_profile>(); auto depth_intr = depth_stream.get_intrinsics(); // Calibration data PrintInfo("%d %d %.6f %.6f %.6f %.6f\n", depth_intr.width, depth_intr.height, depth_intr.fx, depth_intr.fy, depth_intr.ppx, depth_intr.ppy); for(int i=0; i<5; i++){ PrintInfo("%.6f ", depth_intr.coeffs[i]); } PrintInfo("\n\n"); auto color_stream = profile.get_stream(RS2_STREAM_COLOR).as<rs2::video_stream_profile>(); auto color_intr = color_stream.get_intrinsics(); PrintInfo("%d %d %.6f %.6f %.6f %.6f\n", color_intr.width, color_intr.height, color_intr.fx, color_intr.fy, color_intr.ppx, color_intr.ppy); for(int i=0; i<5; i++){ PrintInfo("%.6f ", color_intr.coeffs[i]); } PrintInfo("\n\n"); // get_extrinsics from api_how_to.h try { auto extrinsics = depth_stream.get_extrinsics_to(color_stream); for(int i=0; i<9; i++){ PrintInfo("%.6f ", extrinsics.rotation[i]); } PrintInfo("\n"); for(int i=0; i<3; i++){ PrintInfo("%.6f ", extrinsics.translation[i]); } PrintInfo("\n"); } catch(const std::exception& e) { std::cerr << "Failed to get extrinsics for the given streams. " << e.what() << std::endl; } auto depth_image_ptr = std::make_shared<Image>(); depth_image_ptr->PrepareImage(depth_width, depth_height, 1, 2); auto color_image_ptr = std::make_shared<Image>(); color_image_ptr->PrepareImage(color_width, color_height, 3, 1); FPSTimer timer("Realsense stream"); Visualizer depth_vis, color_vis; if(depth_vis.CreateWindow("Depth", depth_width, depth_height, 15, 50) == false || depth_vis.AddGeometry(depth_image_ptr) == false || color_vis.CreateWindow("Color", color_width, color_height, 675, 50) == false || color_vis.AddGeometry(color_image_ptr) == false) { return 0; } while(depth_vis.PollEvents() && color_vis.PollEvents()){ timer.Signal(); rs2::frameset data = pipe.wait_for_frames(); // Wait for next set of frames from the camera // Retrieve Aligned Frame rs2::align align( rs2_stream::RS2_STREAM_COLOR ); rs2::frameset aligned_frameset = align.process( data ); if( !aligned_frameset.size() ){ return -1; } rs2::frame depth = aligned_frameset.get_depth_frame(); // Find the depth data rs2::frame color = aligned_frameset.get_color_frame(); // Find the color data memcpy(depth_image_ptr->data_.data(), depth.get_data(), depth_width * depth_height * 2); memcpy(color_image_ptr->data_.data(), color.get_data(), color_width * color_height * 3); depth_vis.UpdateGeometry(); color_vis.UpdateGeometry(); double depth_scale = 1000; double depth_trunc = 4.0; bool convert_rgb_to_intensity = false; auto rgbd = CreateRGBDImageFromColorAndDepth(*color_image_ptr, *depth_image_ptr, depth_scale, depth_trunc, convert_rgb_to_intensity); auto pcd = CreatePointCloudFromRGBDImage(*rgbd, PinholeCameraIntrinsic(color_intr.width, color_intr.height, color_intr.fx, color_intr.fy, color_intr.ppx, color_intr.ppy)); // DrawGeometries({pcd}); // uncomment, if you want to check the result if(sensors[rgb_camera].supports(RS2_OPTION_WHITE_BALANCE)){ PrintInfo("\r %.2f\t", sensors[rgb_camera].get_option(RS2_OPTION_WHITE_BALANCE)); } } return 1; } catch(const rs2::error & e) { std::cerr << "RealSense error calling " << e.get_failed_function() << "(" << e.get_failed_args() << "):\n " << e.what() << std::endl; return EXIT_FAILURE; } catch(const std::exception& e) { std::cerr << e.what() << std::endl; // pipe.stop(); // how can I get the pointer of the pipe? return EXIT_FAILURE; }

updated 2018/03/19

auto pcd = CreatePointCloudFromRGBDImage(*rgbd,

PinholeCameraIntrinsic(color_intr.width, color_intr.height,

color_intr.fx, color_intr.fy,

color_intr.ppx, color_intr.ppy));

I'm sorry, I missed. not depth_intr.